Prompt engineering focuses on crafting precise input queries to optimize the performance of AI models, enhancing their response relevance and accuracy. Model evaluation involves systematically assessing an AI model's effectiveness through metrics such as accuracy, precision, recall, and F1 score to ensure reliability and robustness. Explore these essential facets to deepen your understanding of AI development and application.

Why it is important

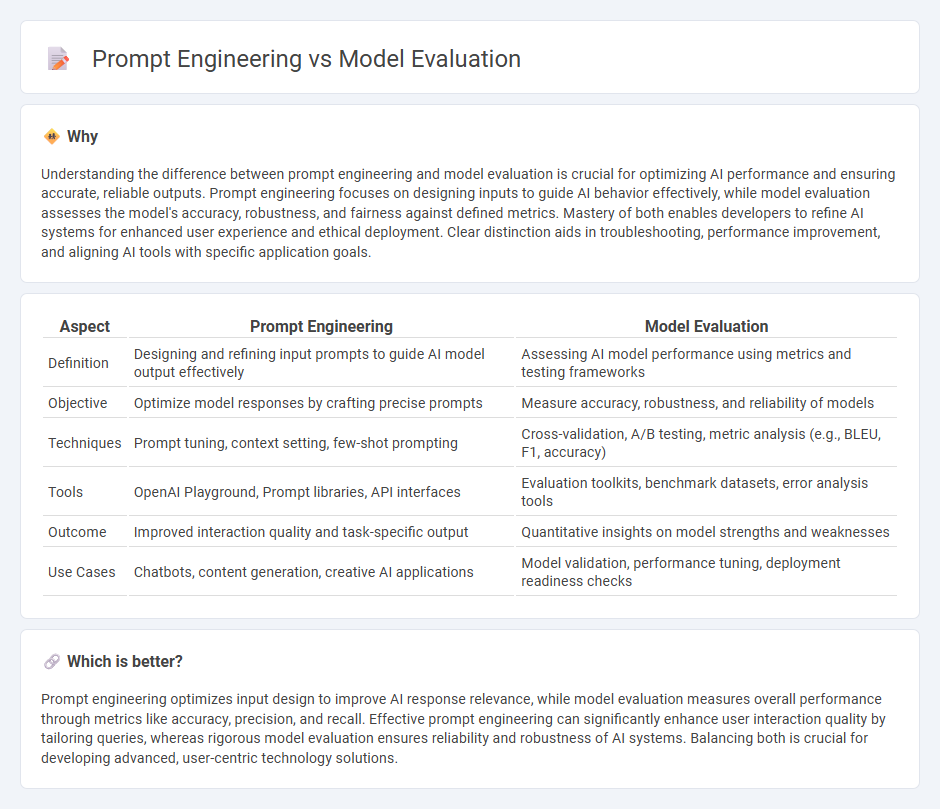

Understanding the difference between prompt engineering and model evaluation is crucial for optimizing AI performance and ensuring accurate, reliable outputs. Prompt engineering focuses on designing inputs to guide AI behavior effectively, while model evaluation assesses the model's accuracy, robustness, and fairness against defined metrics. Mastery of both enables developers to refine AI systems for enhanced user experience and ethical deployment. Clear distinction aids in troubleshooting, performance improvement, and aligning AI tools with specific application goals.

Comparison Table

| Aspect | Prompt Engineering | Model Evaluation |

|---|---|---|

| Definition | Designing and refining input prompts to guide AI model output effectively | Assessing AI model performance using metrics and testing frameworks |

| Objective | Optimize model responses by crafting precise prompts | Measure accuracy, robustness, and reliability of models |

| Techniques | Prompt tuning, context setting, few-shot prompting | Cross-validation, A/B testing, metric analysis (e.g., BLEU, F1, accuracy) |

| Tools | OpenAI Playground, Prompt libraries, API interfaces | Evaluation toolkits, benchmark datasets, error analysis tools |

| Outcome | Improved interaction quality and task-specific output | Quantitative insights on model strengths and weaknesses |

| Use Cases | Chatbots, content generation, creative AI applications | Model validation, performance tuning, deployment readiness checks |

Which is better?

Prompt engineering optimizes input design to improve AI response relevance, while model evaluation measures overall performance through metrics like accuracy, precision, and recall. Effective prompt engineering can significantly enhance user interaction quality by tailoring queries, whereas rigorous model evaluation ensures reliability and robustness of AI systems. Balancing both is crucial for developing advanced, user-centric technology solutions.

Connection

Prompt engineering directly influences the effectiveness of natural language models by optimizing input queries to generate precise and relevant outputs. Model evaluation measures the performance of these models using metrics like accuracy, F1 score, and human-in-the-loop feedback to ensure that the prompts yield consistent and meaningful responses. This iterative connection between prompt engineering and model evaluation drives continuous improvements in AI systems such as GPT, BERT, and other transformer-based architectures.

Key Terms

Accuracy

Model evaluation measures a model's accuracy by comparing its predictions to actual outcomes using metrics like precision, recall, and F1 score, ensuring reliability in real-world applications. Prompt engineering optimizes input queries to enhance model performance and increase accuracy by crafting effective prompts that guide the model's responses. Explore methods to boost accuracy through targeted prompt design and comprehensive evaluation techniques.

Prompt Design

Prompt design in prompt engineering centers on crafting precise and effective inputs that guide AI models to generate desired outputs, enhancing task-specific performance. In contrast, model evaluation assesses the accuracy, reliability, and relevance of AI responses using metrics like BLEU, ROUGE, or human judgment. Explore how refined prompt design strategies can significantly improve AI model outputs and streamline evaluation processes.

Metrics

Model evaluation centers on metrics such as accuracy, precision, recall, F1 score, and confusion matrices to quantify a model's performance and reliability. Prompt engineering influences output quality by optimizing input queries to improve relevance and reduce bias, assessed through user satisfaction, coherence scores, and response diversity. Explore detailed metric comparisons to enhance both model evaluation and prompt engineering strategies.

Source and External Links

Model Evaluation - Model evaluation assesses a machine learning model's performance using metrics like accuracy, precision, and confusion matrices to understand its strengths and weaknesses.

What Is Model Evaluation in Machine Learning? - Model evaluation is the process of determining how well a model performs its task by using metrics-driven analysis, which varies based on the data, algorithm, and use case.

What is model performance evaluation? - Model performance evaluation uses monitoring to assess how well a model performs its specific task, often using metrics like classification and regression methods.

dowidth.com

dowidth.com