Retrieval augmented generation (RAG) integrates external knowledge sources to enhance the accuracy and relevance of generated content, contrasting with supervised learning which relies heavily on pre-labeled datasets for training predictive models. RAG systems dynamically retrieve pertinent information during the generation process, improving adaptability and contextual understanding beyond static training data limitations. Discover how RAG transforms content generation compared to traditional supervised learning methods.

Why it is important

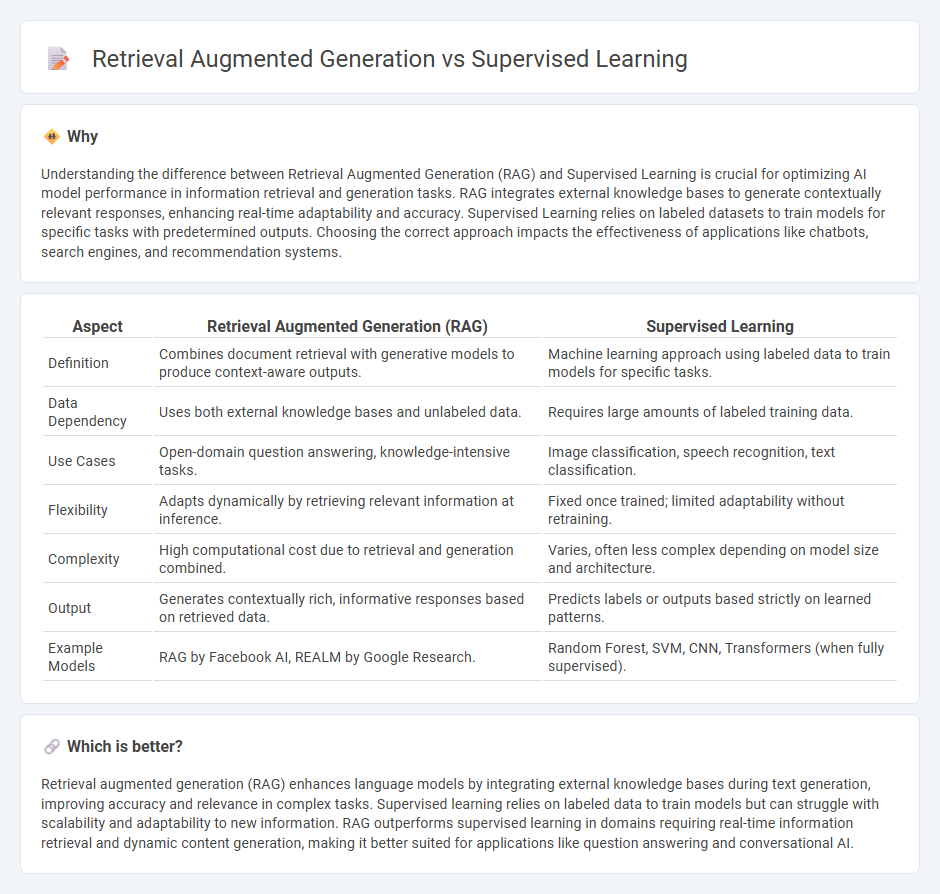

Understanding the difference between Retrieval Augmented Generation (RAG) and Supervised Learning is crucial for optimizing AI model performance in information retrieval and generation tasks. RAG integrates external knowledge bases to generate contextually relevant responses, enhancing real-time adaptability and accuracy. Supervised Learning relies on labeled datasets to train models for specific tasks with predetermined outputs. Choosing the correct approach impacts the effectiveness of applications like chatbots, search engines, and recommendation systems.

Comparison Table

| Aspect | Retrieval Augmented Generation (RAG) | Supervised Learning |

|---|---|---|

| Definition | Combines document retrieval with generative models to produce context-aware outputs. | Machine learning approach using labeled data to train models for specific tasks. |

| Data Dependency | Uses both external knowledge bases and unlabeled data. | Requires large amounts of labeled training data. |

| Use Cases | Open-domain question answering, knowledge-intensive tasks. | Image classification, speech recognition, text classification. |

| Flexibility | Adapts dynamically by retrieving relevant information at inference. | Fixed once trained; limited adaptability without retraining. |

| Complexity | High computational cost due to retrieval and generation combined. | Varies, often less complex depending on model size and architecture. |

| Output | Generates contextually rich, informative responses based on retrieved data. | Predicts labels or outputs based strictly on learned patterns. |

| Example Models | RAG by Facebook AI, REALM by Google Research. | Random Forest, SVM, CNN, Transformers (when fully supervised). |

Which is better?

Retrieval augmented generation (RAG) enhances language models by integrating external knowledge bases during text generation, improving accuracy and relevance in complex tasks. Supervised learning relies on labeled data to train models but can struggle with scalability and adaptability to new information. RAG outperforms supervised learning in domains requiring real-time information retrieval and dynamic content generation, making it better suited for applications like question answering and conversational AI.

Connection

Retrieval Augmented Generation (RAG) combines supervised learning with external knowledge retrieval to enhance language model outputs, improving accuracy and relevance. Supervised learning trains models on labeled datasets, enabling RAG to effectively integrate retrieved information during the generation process. This hybrid approach leverages the strengths of both methods to produce more context-aware and informed responses in natural language processing tasks.

Key Terms

Labeled Data

Supervised learning relies heavily on large volumes of labeled data to train models that map inputs to outputs accurately, ensuring high precision in tasks like classification and regression. Retrieval Augmented Generation (RAG) combines pre-trained language models with external knowledge bases, reducing dependency on labeled data by dynamically retrieving relevant information during inference. Explore how integrating these approaches can enhance performance in data-scarce environments.

Grounded Retrieval

Supervised learning relies on labeled datasets to train models for specific tasks, while Retrieval-Augmented Generation (RAG) integrates external knowledge bases through grounded retrieval to enhance response accuracy and relevance. Grounded retrieval in RAG leverages dynamic document search, improving the generation of contextually accurate and fact-based outputs by accessing up-to-date or domain-specific information. Explore how grounded retrieval transforms language models by enabling real-time, evidence-based generation for practical AI applications.

Contextual Augmentation

Supervised learning relies on labeled datasets to train models for specific tasks, while retrieval-augmented generation (RAG) integrates external knowledge sources dynamically, enhancing the model's responses through contextual augmentation. Contextual augmentation in RAG allows real-time retrieval of relevant documents, providing richer context compared to static datasets used in supervised learning. Explore how combining these approaches can revolutionize knowledge-based applications with improved accuracy and adaptability.

Source and External Links

What Is Supervised Learning? - Supervised learning is a machine learning method where models are trained using labeled input and output data to predict new, unseen inputs accurately.

Supervised Machine Learning - Supervised learning trains a model on labeled datasets, learning the relationship between input features and output labels, and helps the model make predictions on new data.

What is Supervised Learning? - Supervised learning algorithms use labeled examples to recognize patterns and predict outcomes, which are then evaluated and improved using additional data.

dowidth.com

dowidth.com