Neural Radiance Fields (NeRF) utilize deep learning to generate highly accurate 3D scene reconstructions by modeling light interaction at the pixel level, enabling photorealistic novel views from sparse input images. Light Field Capture, on the other hand, records the intensity and direction of light rays using specialized cameras, allowing immediate interactive rendering of scenes but often with lower resolution and higher data requirements. Explore the latest advancements and comparative applications of NeRF and Light Field Capture technology to better understand their impact on immersive imaging.

Why it is important

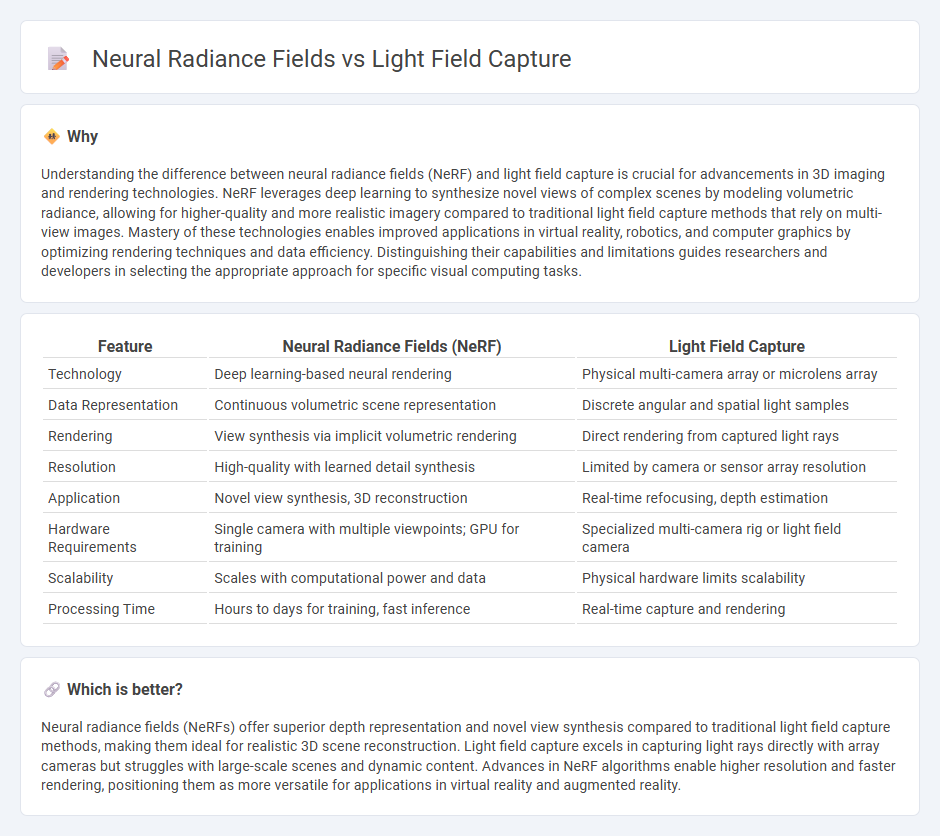

Understanding the difference between neural radiance fields (NeRF) and light field capture is crucial for advancements in 3D imaging and rendering technologies. NeRF leverages deep learning to synthesize novel views of complex scenes by modeling volumetric radiance, allowing for higher-quality and more realistic imagery compared to traditional light field capture methods that rely on multi-view images. Mastery of these technologies enables improved applications in virtual reality, robotics, and computer graphics by optimizing rendering techniques and data efficiency. Distinguishing their capabilities and limitations guides researchers and developers in selecting the appropriate approach for specific visual computing tasks.

Comparison Table

| Feature | Neural Radiance Fields (NeRF) | Light Field Capture |

|---|---|---|

| Technology | Deep learning-based neural rendering | Physical multi-camera array or microlens array |

| Data Representation | Continuous volumetric scene representation | Discrete angular and spatial light samples |

| Rendering | View synthesis via implicit volumetric rendering | Direct rendering from captured light rays |

| Resolution | High-quality with learned detail synthesis | Limited by camera or sensor array resolution |

| Application | Novel view synthesis, 3D reconstruction | Real-time refocusing, depth estimation |

| Hardware Requirements | Single camera with multiple viewpoints; GPU for training | Specialized multi-camera rig or light field camera |

| Scalability | Scales with computational power and data | Physical hardware limits scalability |

| Processing Time | Hours to days for training, fast inference | Real-time capture and rendering |

Which is better?

Neural radiance fields (NeRFs) offer superior depth representation and novel view synthesis compared to traditional light field capture methods, making them ideal for realistic 3D scene reconstruction. Light field capture excels in capturing light rays directly with array cameras but struggles with large-scale scenes and dynamic content. Advances in NeRF algorithms enable higher resolution and faster rendering, positioning them as more versatile for applications in virtual reality and augmented reality.

Connection

Neural Radiance Fields (NeRF) and light field capture both represent advanced techniques for synthesizing and reconstructing 3D scenes from 2D images by modeling light interactions and spatial geometry. NeRF uses deep learning to infer volumetric radiance by optimizing a continuous 5D function of spatial coordinates and viewing direction, closely related to light field methods that sample rays passing through space to record light intensity. The integration of NeRF with light field capture enhances realistic rendering and novel view synthesis by combining computational imaging with neural scene representations.

Key Terms

Plenoptic Function

Light field capture directly records the plenoptic function by sampling rays of light in a scene, capturing angular and spatial information to reconstruct views from different perspectives. Neural Radiance Fields (NeRF) model the plenoptic function implicitly as a continuous volumetric scene representation using deep neural networks, enabling high-fidelity novel view synthesis. Explore more about how each approach leverages the plenoptic function to enhance 3D scene reconstruction and rendering.

View Synthesis

Light field capture utilizes multi-directional ray sampling to record light intensity and direction, enabling accurate view synthesis through dense angular sampling. Neural Radiance Fields (NeRF) model volumetric scene representations via deep learning, synthesizing novel views by predicting color and density at continuous 3D coordinates for complex view-dependent effects. Explore detailed comparisons on efficiency and quality trade-offs in view synthesis techniques for advanced applications.

Implicit Scene Representation

Light field capture directly records the angular distribution of light rays, offering explicit multi-view data for scene reconstruction, while neural radiance fields (NeRFs) encode scenes as implicit functions using neural networks, enabling continuous 3D representations with high detail and view-dependent effects. Implicit scene representation in NeRFs leverages volumetric rendering and differentiable optimization to synthesize novel views from sparse inputs, surpassing traditional light field methods in handling complex geometry and lighting. Explore how implicit neural models transform 3D scene understanding and advanced rendering techniques.

Source and External Links

Light Field Technology - This webpage describes how light field cameras, also known as plenoptic cameras, capture not just the intensity but also the direction of light rays, allowing for post-capture refocusing and viewpoint changes.

Light Field Imaging Technology - The article discusses the concept of light field imaging, its advantages, and the challenges of capturing it, including methods like camera arrays and plenoptic cameras.

Light Fields - This documentation explains various methods for capturing light fields, including linear, arc, and view shearing capture, and their use in high-quality holographic displays.

dowidth.com

dowidth.com