Liquid neural networks adapt dynamically to changing inputs through continuous-time processing, offering flexibility in real-time learning and adaptation. Spiking neural networks emulate biological brain signals via discrete spikes, enhancing energy efficiency and temporal precision in computational models. Explore the unique advantages and applications of these cutting-edge neural architectures to understand their impact on the future of AI technology.

Why it is important

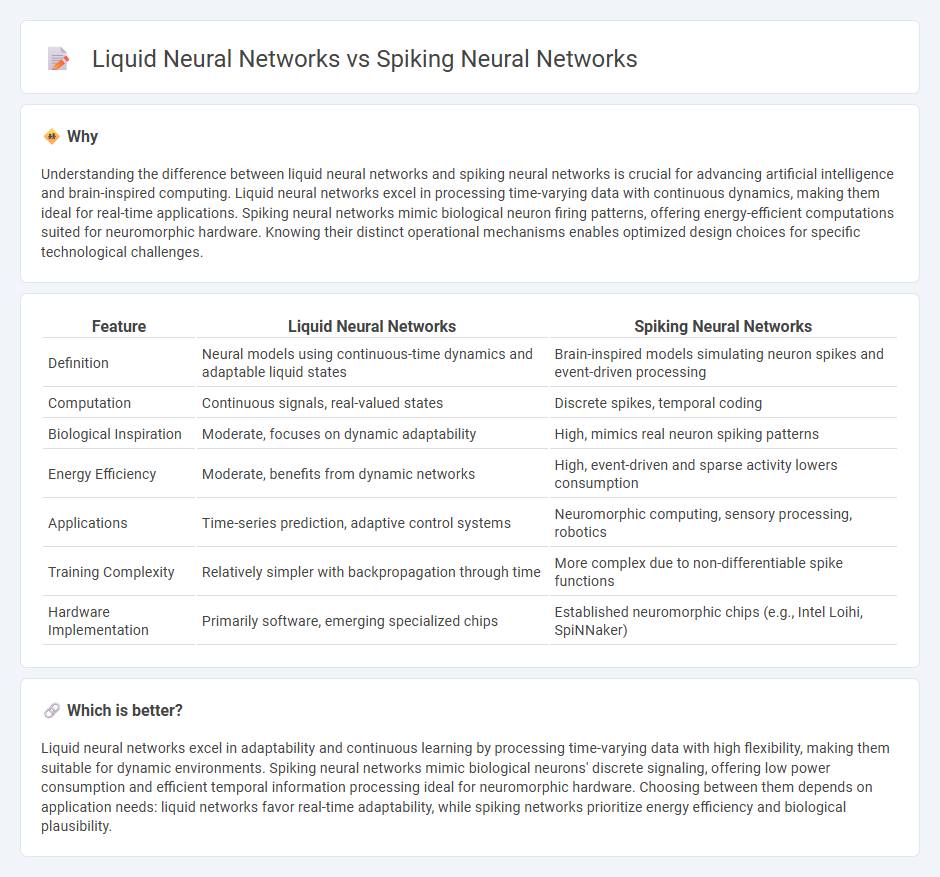

Understanding the difference between liquid neural networks and spiking neural networks is crucial for advancing artificial intelligence and brain-inspired computing. Liquid neural networks excel in processing time-varying data with continuous dynamics, making them ideal for real-time applications. Spiking neural networks mimic biological neuron firing patterns, offering energy-efficient computations suited for neuromorphic hardware. Knowing their distinct operational mechanisms enables optimized design choices for specific technological challenges.

Comparison Table

| Feature | Liquid Neural Networks | Spiking Neural Networks |

|---|---|---|

| Definition | Neural models using continuous-time dynamics and adaptable liquid states | Brain-inspired models simulating neuron spikes and event-driven processing |

| Computation | Continuous signals, real-valued states | Discrete spikes, temporal coding |

| Biological Inspiration | Moderate, focuses on dynamic adaptability | High, mimics real neuron spiking patterns |

| Energy Efficiency | Moderate, benefits from dynamic networks | High, event-driven and sparse activity lowers consumption |

| Applications | Time-series prediction, adaptive control systems | Neuromorphic computing, sensory processing, robotics |

| Training Complexity | Relatively simpler with backpropagation through time | More complex due to non-differentiable spike functions |

| Hardware Implementation | Primarily software, emerging specialized chips | Established neuromorphic chips (e.g., Intel Loihi, SpiNNaker) |

Which is better?

Liquid neural networks excel in adaptability and continuous learning by processing time-varying data with high flexibility, making them suitable for dynamic environments. Spiking neural networks mimic biological neurons' discrete signaling, offering low power consumption and efficient temporal information processing ideal for neuromorphic hardware. Choosing between them depends on application needs: liquid networks favor real-time adaptability, while spiking networks prioritize energy efficiency and biological plausibility.

Connection

Liquid neural networks and spiking neural networks are connected through their inspiration from biological neural systems, aiming to improve adaptability and efficiency in artificial intelligence. Both models utilize dynamic, time-dependent processing, with liquid neural networks focusing on continuous-time dynamics and spiking neural networks emphasizing discrete event-driven spikes. Their integration enhances computational models for real-time learning and energy-efficient processing in robotics and neuromorphic hardware.

Key Terms

Temporal Coding

Spiking Neural Networks (SNNs) leverage discrete spikes for temporal coding, mimicking biological neuron behavior to process time-dependent information efficiently. Liquid Neural Networks use continuous-time dynamics within recurrent structures to capture temporal patterns, offering robustness in handling sequential data. Explore the distinctive temporal coding mechanisms in both architectures to enhance time-sensitive AI applications.

Dynamic Reservoir

Spiking Neural Networks (SNNs) use discrete spikes to emulate biological neuron activity, enabling precise temporal coding for dynamic reservoir computing, while Liquid Neural Networks feature continuous-time dynamics that create a dynamic reservoir through adaptive, liquid-like internal states. The dynamic reservoir in SNNs processes temporal information efficiently by leveraging spike timing, whereas Liquid Neural Networks exploit continuous state changes to model complex time-varying functions with robustness. Explore further to understand how these architectures optimize dynamic reservoirs for advanced temporal processing and learning tasks.

Event-Driven Processing

Spiking Neural Networks (SNNs) utilize discrete spike events to process information efficiently, mimicking biological neurons and excelling in event-driven processing with low power consumption. Liquid Neural Networks (LNNs) differ by incorporating continuous-time dynamics and adaptable internal states, enabling robust temporal pattern recognition in streaming data. Explore the strengths of each model to understand how they revolutionize event-driven computing.

Source and External Links

Deep Learning in Spiking Neural Networks - arXiv - Spiking neural networks (SNNs) are biologically realistic artificial networks that model neurons firing discrete spikes, used in various pattern recognition tasks with learning methods such as STDP and stochastic gradient descent.

SpikeGPT: researcher releases code for largest-ever spiking neural ... - SpikeGPT demonstrates a large language model implemented with spiking neural networks, which are more efficient by transmitting neural signals only when neurons spike, introducing a temporal dimension; training methods adapt deep learning optimization techniques to this framework.

Spiking neural network - Wikipedia - SNNs mimic biological neurons more closely by using spike timing and dynamics like Spike Frequency Adaptation, offering computational efficiency and accuracy comparable to traditional ANNs while reducing power consumption and processing needs.

dowidth.com

dowidth.com