Generative Adversarial Networks (GANs) employ a dual-network system where a generator creates data while a discriminator evaluates its authenticity, enabling high-quality synthetic data generation. Autoencoders compress input data into a latent space and reconstruct it, focusing on efficient data representation and noise reduction. Explore deeper insights into how GANs and Autoencoders transform machine learning applications.

Why it is important

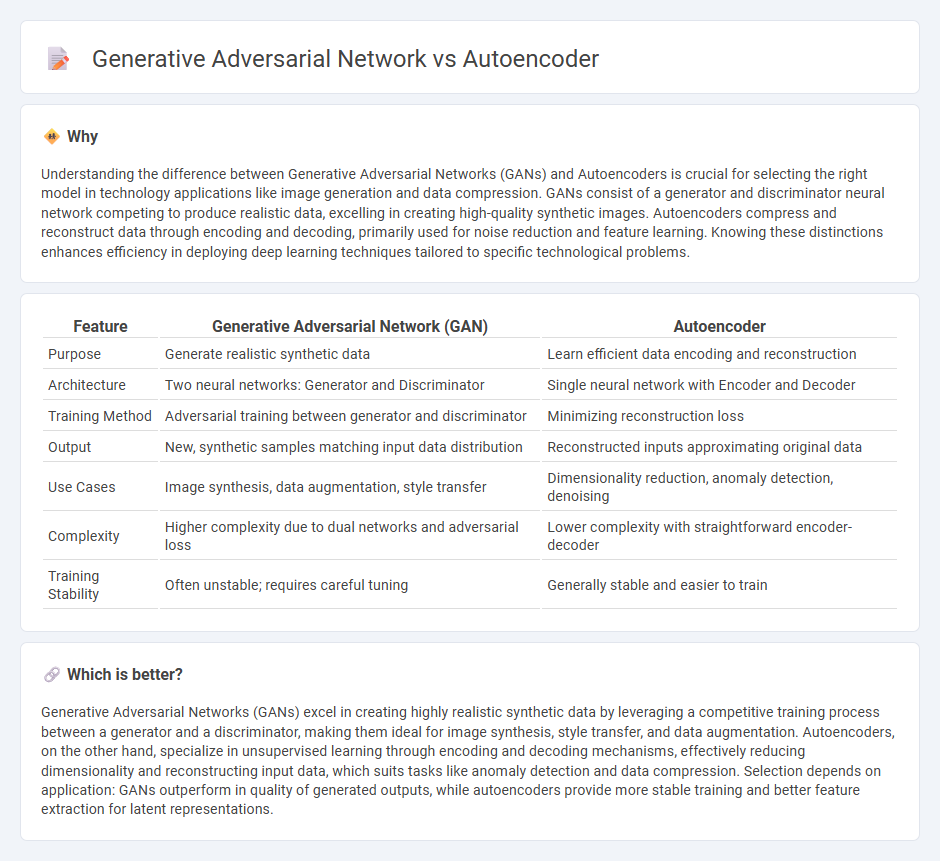

Understanding the difference between Generative Adversarial Networks (GANs) and Autoencoders is crucial for selecting the right model in technology applications like image generation and data compression. GANs consist of a generator and discriminator neural network competing to produce realistic data, excelling in creating high-quality synthetic images. Autoencoders compress and reconstruct data through encoding and decoding, primarily used for noise reduction and feature learning. Knowing these distinctions enhances efficiency in deploying deep learning techniques tailored to specific technological problems.

Comparison Table

| Feature | Generative Adversarial Network (GAN) | Autoencoder |

|---|---|---|

| Purpose | Generate realistic synthetic data | Learn efficient data encoding and reconstruction |

| Architecture | Two neural networks: Generator and Discriminator | Single neural network with Encoder and Decoder |

| Training Method | Adversarial training between generator and discriminator | Minimizing reconstruction loss |

| Output | New, synthetic samples matching input data distribution | Reconstructed inputs approximating original data |

| Use Cases | Image synthesis, data augmentation, style transfer | Dimensionality reduction, anomaly detection, denoising |

| Complexity | Higher complexity due to dual networks and adversarial loss | Lower complexity with straightforward encoder-decoder |

| Training Stability | Often unstable; requires careful tuning | Generally stable and easier to train |

Which is better?

Generative Adversarial Networks (GANs) excel in creating highly realistic synthetic data by leveraging a competitive training process between a generator and a discriminator, making them ideal for image synthesis, style transfer, and data augmentation. Autoencoders, on the other hand, specialize in unsupervised learning through encoding and decoding mechanisms, effectively reducing dimensionality and reconstructing input data, which suits tasks like anomaly detection and data compression. Selection depends on application: GANs outperform in quality of generated outputs, while autoencoders provide more stable training and better feature extraction for latent representations.

Connection

Generative Adversarial Networks (GANs) and autoencoders are interconnected through their shared goal of unsupervised learning for data representation and generation. Autoencoders compress input data into a latent space and then reconstruct the data, while GANs consist of a generator and discriminator network that compete to produce realistic synthetic data. The latent representations learned by autoencoders can be utilized to improve GAN training, enhancing the quality and stability of generated outputs in various applications such as image synthesis and anomaly detection.

Key Terms

Encoder-Decoder (Autoencoder)

Autoencoders utilize an encoder-decoder architecture to compress input data into a lower-dimensional latent representation and then reconstruct the original input, enabling efficient data encoding and noise reduction. Generative Adversarial Networks (GANs) generate new data samples by pitting a generator against a discriminator in a competitive setting, focusing more on realistic data synthesis without explicit encoding. Explore how autoencoders' encoder-decoder framework contrasts with GANs' adversarial mechanism to understand their distinct applications and benefits.

Generator-Discriminator (GAN)

Autoencoders compress input data into a latent space and reconstruct it, primarily optimizing for data fidelity, while Generative Adversarial Networks (GANs) consist of a Generator that creates realistic data samples and a Discriminator that differentiates between real and fake data, driving the Generator to improve through adversarial training. GANs excel in generating high-quality, diverse outputs by learning data distributions, whereas autoencoders tend to focus on accurate reconstruction and denoising. Explore how the interplay between the Generator and Discriminator in GANs results in state-of-the-art synthetic data generation.

Unsupervised Learning

Autoencoders excel in unsupervised learning by encoding input data into a compressed latent space and reconstructing it to minimize loss, enabling efficient dimensionality reduction and feature extraction. Generative Adversarial Networks (GANs) leverage a dual-network system--generator and discriminator--in a minimax game to produce highly realistic synthetic data, pushing the boundaries of data generation and representation learning without labeled data. Explore further to understand their architectures, applications, and training nuances in unsupervised contexts.

Source and External Links

Autoencoder - Wikipedia - An autoencoder is a type of artificial neural network used for unsupervised learning to learn efficient codings by compressing input data into a latent space and reconstructing the output data from this representation.

What Is an Autoencoder? | IBM - An autoencoder is a neural network designed to compress input data to essential features and reconstruct it from the compressed form, used for tasks like data compression, denoising, anomaly detection, and generative modeling with architectures including encoder and decoder parts.

Introduction to Autoencoders: From The Basics ... - Autoencoders are unsupervised feedforward neural networks composed of an encoder that compresses input data into a latent space and a decoder that reconstructs the original input, optimized by minimizing reconstruction error.

dowidth.com

dowidth.com