Generative audio leverages artificial intelligence to create realistic and dynamic soundscapes, enhancing immersive experiences in gaming, virtual reality, and multimedia content. Sound localization technology accurately identifies the spatial origin of sounds, enabling precise audio positioning crucial for applications like augmented reality and hearing aids. Discover how these cutting-edge audio innovations are reshaping the way we experience sound.

Why it is important

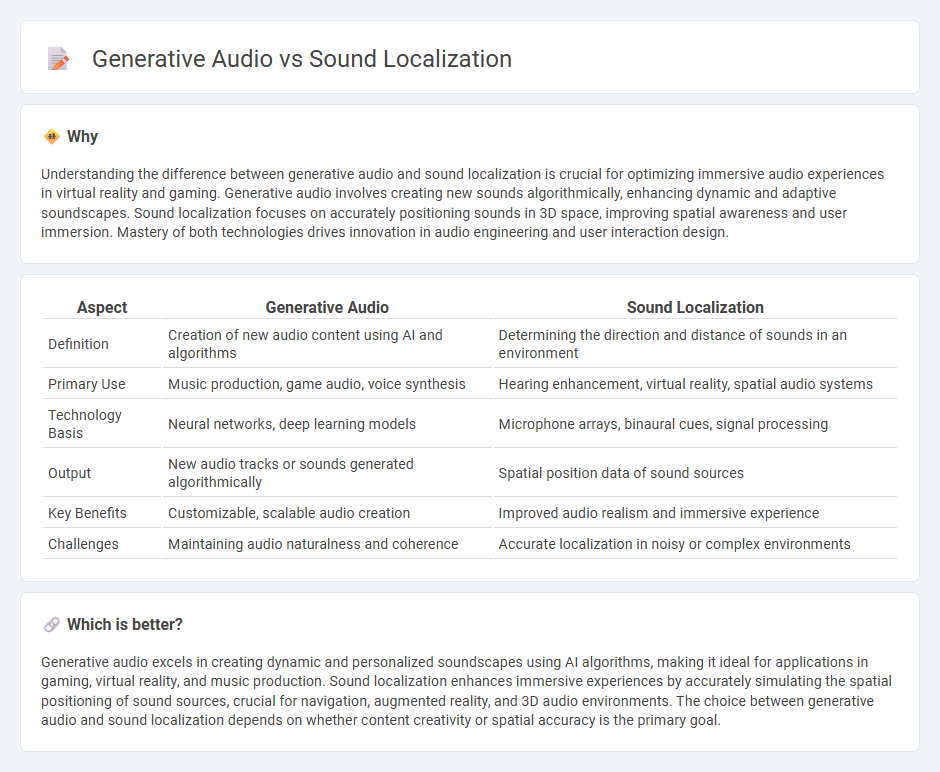

Understanding the difference between generative audio and sound localization is crucial for optimizing immersive audio experiences in virtual reality and gaming. Generative audio involves creating new sounds algorithmically, enhancing dynamic and adaptive soundscapes. Sound localization focuses on accurately positioning sounds in 3D space, improving spatial awareness and user immersion. Mastery of both technologies drives innovation in audio engineering and user interaction design.

Comparison Table

| Aspect | Generative Audio | Sound Localization |

|---|---|---|

| Definition | Creation of new audio content using AI and algorithms | Determining the direction and distance of sounds in an environment |

| Primary Use | Music production, game audio, voice synthesis | Hearing enhancement, virtual reality, spatial audio systems |

| Technology Basis | Neural networks, deep learning models | Microphone arrays, binaural cues, signal processing |

| Output | New audio tracks or sounds generated algorithmically | Spatial position data of sound sources |

| Key Benefits | Customizable, scalable audio creation | Improved audio realism and immersive experience |

| Challenges | Maintaining audio naturalness and coherence | Accurate localization in noisy or complex environments |

Which is better?

Generative audio excels in creating dynamic and personalized soundscapes using AI algorithms, making it ideal for applications in gaming, virtual reality, and music production. Sound localization enhances immersive experiences by accurately simulating the spatial positioning of sound sources, crucial for navigation, augmented reality, and 3D audio environments. The choice between generative audio and sound localization depends on whether content creativity or spatial accuracy is the primary goal.

Connection

Generative audio leverages advanced algorithms to create dynamic soundscapes, while sound localization techniques enable precise spatial positioning of these sounds in a 3D environment. Combining these technologies enhances immersive experiences in virtual reality, gaming, and augmented reality by accurately simulating how audio sources move and interact within a space. This integration improves user engagement through more realistic auditory cues and spatial awareness.

Key Terms

Spatial Audio

Sound localization enhances spatial audio by accurately simulating how humans perceive the direction and distance of sounds, leveraging binaural cues such as interaural time difference (ITD) and interaural level difference (ILD). Generative audio utilizes AI-driven synthesis to create dynamic and immersive soundscapes that adapt in real-time to listener movement and environment, enriching spatial audio experiences. Discover how these technologies combine to revolutionize spatial audio applications in virtual reality and gaming by exploring advanced audio spatialization techniques.

Source Separation

Sound localization involves identifying the precise origin of audio signals in a spatial environment, utilizing techniques such as beamforming, interaural time difference (ITD), and interaural level difference (ILD) to enhance source separation accuracy. Generative audio leverages deep learning models like neural networks and variational autoencoders to synthesize or isolate individual sound sources from complex auditory scenes, improving clarity and separation quality. Explore advanced methodologies in sound source separation to understand their applications in immersive audio, hearing aids, and augmented reality systems.

Neural Audio Synthesis

Neural audio synthesis leverages deep learning models to create realistic sounds by capturing complex acoustic patterns, surpassing traditional sound localization methods that primarily identify source positions in space. Sound localization algorithms focus on spatial accuracy using binaural cues such as interaural time and level differences, while neural audio synthesis generates nuanced audio textures and environments for immersive experiences. Explore the advancements in neural audio synthesis to understand how AI transforms audio production and virtual soundscapes.

Source and External Links

Auditory localization: a comprehensive practical review - Auditory localization is the human ability to perceive the spatial location of a sound source using binaural cues (interaural time and level differences), monaural spectral cues, and environmental factors like reverberation and relative motion.

Sound localization - Sound localization is the process by which the brain determines the direction, elevation, and distance of a sound source by analyzing subtle differences in timing, intensity, and spectral content between the two ears, as well as the impact of the listener's head and body on sound waves.

Chapter 12: Sound Localization and the Auditory Scene - People localize sounds most accurately in front of them, use interaural time differences (ITD) to judge azimuth for low frequencies, and rely on interaural level differences (ILD) for high frequencies, while elevation judgments often require head movement or spectral cues.

dowidth.com

dowidth.com