Diffusion models generate high-quality images by iteratively refining noise through probabilistic processes, while ResNets leverage deep convolutional networks with skip connections to efficiently train very deep architectures. These techniques have revolutionized machine learning applications, from image synthesis to classification tasks, offering complementary strengths in handling complex data patterns. Explore the advancements in Diffusion models and ResNets to understand their impact on modern AI solutions.

Why it is important

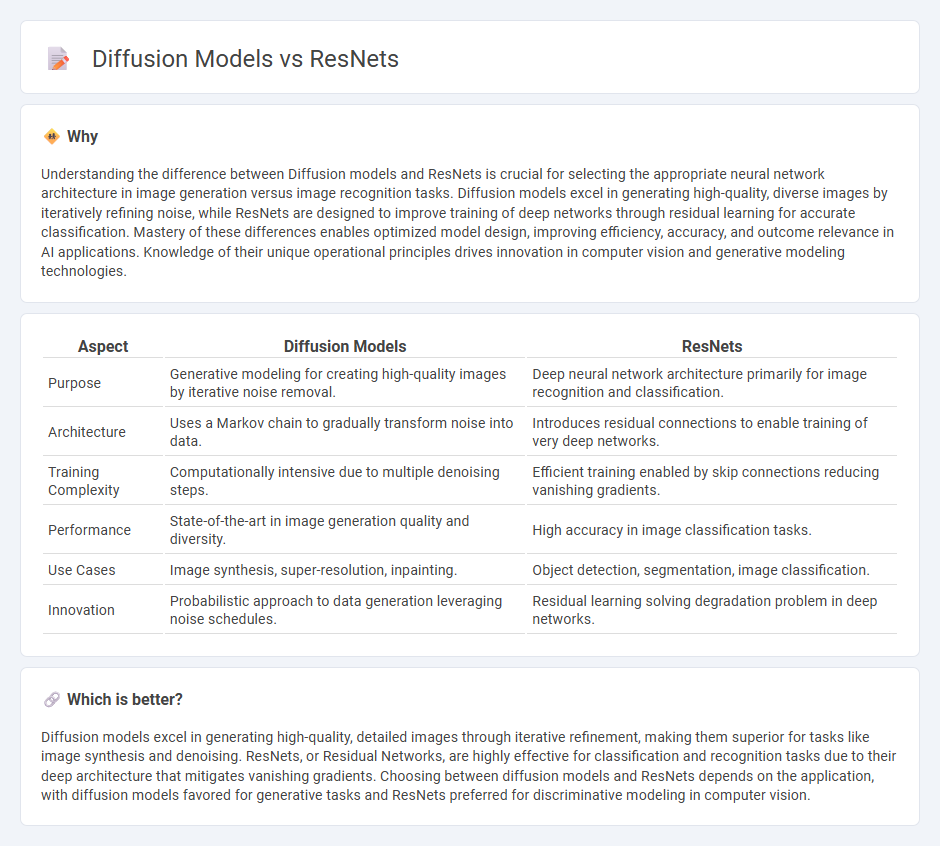

Understanding the difference between Diffusion models and ResNets is crucial for selecting the appropriate neural network architecture in image generation versus image recognition tasks. Diffusion models excel in generating high-quality, diverse images by iteratively refining noise, while ResNets are designed to improve training of deep networks through residual learning for accurate classification. Mastery of these differences enables optimized model design, improving efficiency, accuracy, and outcome relevance in AI applications. Knowledge of their unique operational principles drives innovation in computer vision and generative modeling technologies.

Comparison Table

| Aspect | Diffusion Models | ResNets |

|---|---|---|

| Purpose | Generative modeling for creating high-quality images by iterative noise removal. | Deep neural network architecture primarily for image recognition and classification. |

| Architecture | Uses a Markov chain to gradually transform noise into data. | Introduces residual connections to enable training of very deep networks. |

| Training Complexity | Computationally intensive due to multiple denoising steps. | Efficient training enabled by skip connections reducing vanishing gradients. |

| Performance | State-of-the-art in image generation quality and diversity. | High accuracy in image classification tasks. |

| Use Cases | Image synthesis, super-resolution, inpainting. | Object detection, segmentation, image classification. |

| Innovation | Probabilistic approach to data generation leveraging noise schedules. | Residual learning solving degradation problem in deep networks. |

Which is better?

Diffusion models excel in generating high-quality, detailed images through iterative refinement, making them superior for tasks like image synthesis and denoising. ResNets, or Residual Networks, are highly effective for classification and recognition tasks due to their deep architecture that mitigates vanishing gradients. Choosing between diffusion models and ResNets depends on the application, with diffusion models favored for generative tasks and ResNets preferred for discriminative modeling in computer vision.

Connection

Diffusion models leverage iterative refinement processes resembling the layer-wise feature extraction seen in ResNets, where residual connections facilitate stable training and better gradient flow. Both architectures emphasize hierarchical representation learning, with ResNets enabling deep networks by mitigating vanishing gradients and diffusion models applying controlled noise reduction for generative tasks. This synergy enhances model robustness and performance in complex image synthesis and denoising applications.

Key Terms

Residual Connections

Residual connections in ResNets address the degradation problem by enabling identity mapping, which allows gradients to flow directly through the network, improving training of deep architectures. Diffusion models utilize residual connections within their denoising networks to effectively capture complex data distributions during iterative noise removal processes. Explore the design and impact of residual connections in both models to understand their role in advancing deep learning performance.

Generative Process

ResNets, primarily designed for image recognition, utilize deep residual learning with skip connections to enhance gradient flow and model complex transformations, often adapted for generative tasks through frameworks like GANs or VAEs. Diffusion models generate images by iteratively denoising a random noise vector using a Markov chain, effectively learning the data distribution via a sequence of gradual refinements, resulting in high-quality image synthesis. Explore the underlying mechanisms and applications of these models to understand their distinct generative capabilities.

Denoising

ResNets utilize residual connections to enhance feature learning and effectively reduce noise in images by propagating clean signal pathways across layers. Diffusion models tackle denoising through a stochastic process that iteratively refines data from pure noise to structured outputs with high fidelity. Explore the underlying mechanisms and performance benchmarks to understand which model suits your denoising applications best.

Source and External Links

Detailed Guide to Understand and Implement ResNets - ResNet is a powerful deep neural network architecture known for its outstanding performance in image classification challenges such as ILSVRC 2015, featuring variants like ResNet-18, ResNet-34, ResNet-50, etc., based on the number of layers.

Deep Residual Networks (ResNet, ResNet50) A Complete ... - ResNet introduces residual blocks with "skip connections" to overcome training issues like vanishing gradients, enabling very deep networks to train effectively and improve accuracy in computer vision tasks.

Residual neural network - ResNet architecture features residual connections of the form \(x \mapsto f(x) + x\), allowing layers to learn residual functions, which stabilizes the training of very deep neural networks and is widely used beyond vision tasks in models like BERT and GPT.

dowidth.com

dowidth.com