Prompt engineering involves designing specific input queries to guide AI models toward desired outputs, optimizing interaction without altering the model itself. Fine-tuning adjusts pre-trained AI models by retraining them on specialized datasets, enhancing performance for particular tasks or domains. Explore the distinctions and applications of prompt engineering versus fine-tuning to leverage AI capabilities effectively.

Why it is important

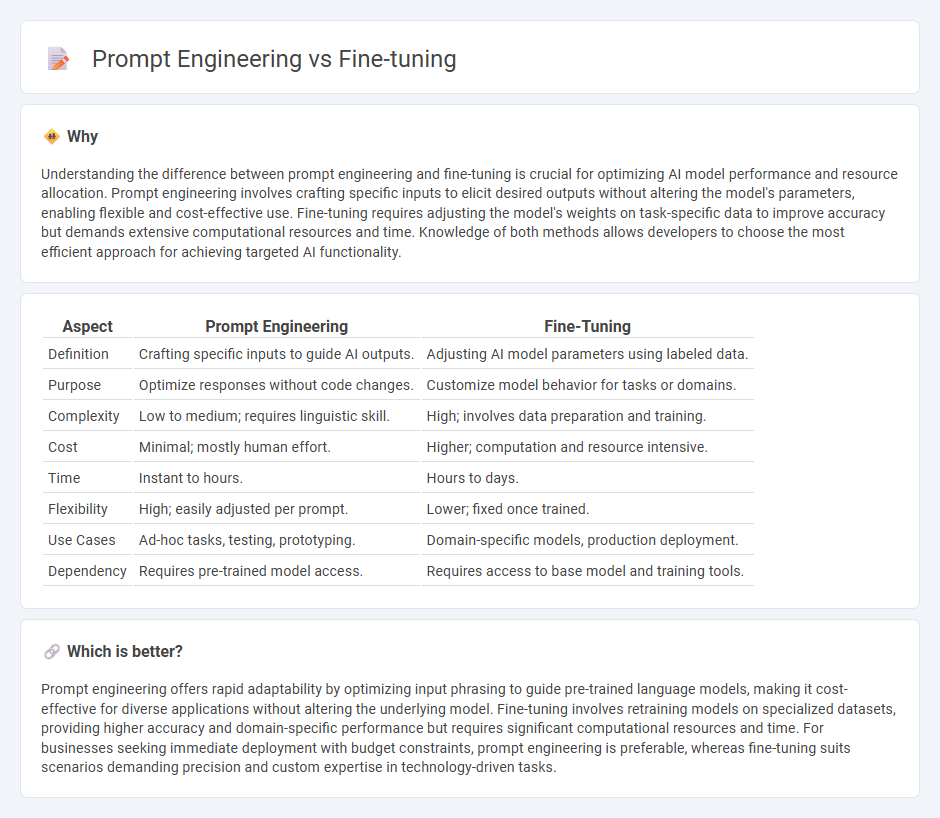

Understanding the difference between prompt engineering and fine-tuning is crucial for optimizing AI model performance and resource allocation. Prompt engineering involves crafting specific inputs to elicit desired outputs without altering the model's parameters, enabling flexible and cost-effective use. Fine-tuning requires adjusting the model's weights on task-specific data to improve accuracy but demands extensive computational resources and time. Knowledge of both methods allows developers to choose the most efficient approach for achieving targeted AI functionality.

Comparison Table

| Aspect | Prompt Engineering | Fine-Tuning |

|---|---|---|

| Definition | Crafting specific inputs to guide AI outputs. | Adjusting AI model parameters using labeled data. |

| Purpose | Optimize responses without code changes. | Customize model behavior for tasks or domains. |

| Complexity | Low to medium; requires linguistic skill. | High; involves data preparation and training. |

| Cost | Minimal; mostly human effort. | Higher; computation and resource intensive. |

| Time | Instant to hours. | Hours to days. |

| Flexibility | High; easily adjusted per prompt. | Lower; fixed once trained. |

| Use Cases | Ad-hoc tasks, testing, prototyping. | Domain-specific models, production deployment. |

| Dependency | Requires pre-trained model access. | Requires access to base model and training tools. |

Which is better?

Prompt engineering offers rapid adaptability by optimizing input phrasing to guide pre-trained language models, making it cost-effective for diverse applications without altering the underlying model. Fine-tuning involves retraining models on specialized datasets, providing higher accuracy and domain-specific performance but requires significant computational resources and time. For businesses seeking immediate deployment with budget constraints, prompt engineering is preferable, whereas fine-tuning suits scenarios demanding precision and custom expertise in technology-driven tasks.

Connection

Prompt engineering customizes input queries to maximize AI model performance, while fine-tuning adapts pre-trained models by adjusting weights using domain-specific data. Both techniques enhance model accuracy and relevance, improving natural language understanding and generation tasks. Effective integration of prompt engineering and fine-tuning drives optimized AI applications across industries.

Key Terms

Pre-trained Model

Fine-tuning a pre-trained model involves adjusting its weights using a specific dataset to improve performance on targeted tasks, enhancing its understanding and adaptability. Prompt engineering leverages the existing capabilities of the pre-trained model by crafting effective input prompts without altering the model's parameters, optimizing responses based on contextual cues. Explore deeper insights into these strategies to maximize the potential of pre-trained models in various applications.

Parameter Adjustment

Fine-tuning involves adjusting the internal parameters of a pre-trained model to improve performance on specific tasks, optimizing its weights through additional training. Prompt engineering, in contrast, manipulates input prompts without modifying model parameters, creatively shaping responses by crafting specific instructions or context. Explore detailed comparisons and strategies to determine the best approach for your AI applications.

Instruction Design

Fine-tuning involves modifying pre-trained models by updating their weights with task-specific data to enhance performance on targeted instructions, whereas prompt engineering designs precise input prompts to elicit desired responses without altering the model itself. Instruction design in fine-tuning requires extensive datasets and computational resources, while well-crafted prompts rely on linguistic strategies and contextual understanding for optimal outcomes. Explore deeper insights into instruction design strategies and their impacts on AI model efficiency.

Source and External Links

What is Fine-Tuning? - GeeksforGeeks - Fine-tuning is the process of adjusting the weights of a pre-trained model's layers, often freezing earlier layers and updating later ones, to adapt the model for a new, usually smaller or domain-specific task using knowledge from a larger dataset.

Fine-Tuning LLMs: A Guide With Examples - DataCamp - Fine-tuning involves further training a pre-trained model on a domain-specific labeled dataset to enhance performance on specialized tasks such as sentiment analysis or domain-specific language understanding.

What is Fine-Tuning? | IBM - Fine-tuning is a transfer learning technique to adapt a pre-trained neural network, such as large language models or vision models, to niche use cases by reducing computing and data needs through further training on task-specific data.

dowidth.com

dowidth.com