Generative fill leverages AI to create realistic image content by intelligently predicting and filling missing areas, enhancing creative workflows across design and media industries. Semantic segmentation classifies each pixel in an image to identify and separate objects, enabling precise analysis for applications like autonomous driving and medical imaging. Explore more to understand how these cutting-edge technologies transform visual data processing.

Why it is important

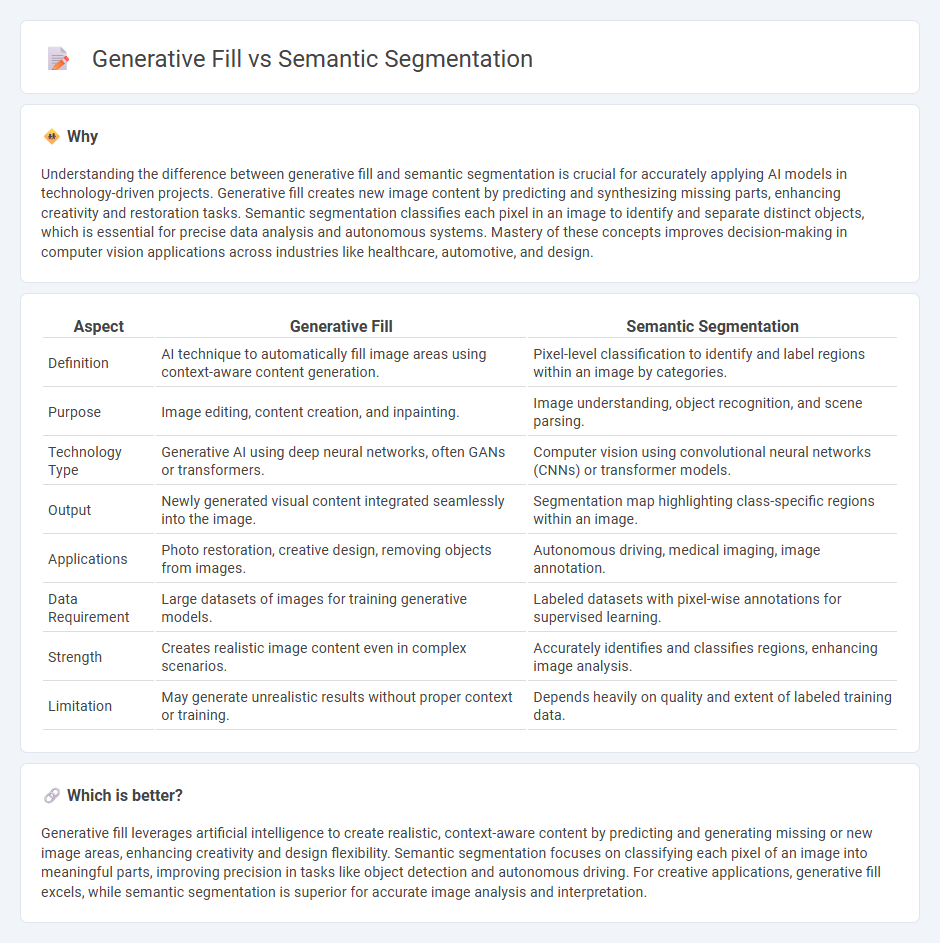

Understanding the difference between generative fill and semantic segmentation is crucial for accurately applying AI models in technology-driven projects. Generative fill creates new image content by predicting and synthesizing missing parts, enhancing creativity and restoration tasks. Semantic segmentation classifies each pixel in an image to identify and separate distinct objects, which is essential for precise data analysis and autonomous systems. Mastery of these concepts improves decision-making in computer vision applications across industries like healthcare, automotive, and design.

Comparison Table

| Aspect | Generative Fill | Semantic Segmentation |

|---|---|---|

| Definition | AI technique to automatically fill image areas using context-aware content generation. | Pixel-level classification to identify and label regions within an image by categories. |

| Purpose | Image editing, content creation, and inpainting. | Image understanding, object recognition, and scene parsing. |

| Technology Type | Generative AI using deep neural networks, often GANs or transformers. | Computer vision using convolutional neural networks (CNNs) or transformer models. |

| Output | Newly generated visual content integrated seamlessly into the image. | Segmentation map highlighting class-specific regions within an image. |

| Applications | Photo restoration, creative design, removing objects from images. | Autonomous driving, medical imaging, image annotation. |

| Data Requirement | Large datasets of images for training generative models. | Labeled datasets with pixel-wise annotations for supervised learning. |

| Strength | Creates realistic image content even in complex scenarios. | Accurately identifies and classifies regions, enhancing image analysis. |

| Limitation | May generate unrealistic results without proper context or training. | Depends heavily on quality and extent of labeled training data. |

Which is better?

Generative fill leverages artificial intelligence to create realistic, context-aware content by predicting and generating missing or new image areas, enhancing creativity and design flexibility. Semantic segmentation focuses on classifying each pixel of an image into meaningful parts, improving precision in tasks like object detection and autonomous driving. For creative applications, generative fill excels, while semantic segmentation is superior for accurate image analysis and interpretation.

Connection

Generative fill leverages semantic segmentation to accurately identify and separate distinct objects or regions within an image, enabling targeted content generation that blends seamlessly with the surrounding context. Semantic segmentation provides detailed pixel-level classification, which guides generative fill algorithms in reconstructing missing or occluded areas with coherent textures and structures. This integration enhances image editing, augmented reality, and computer vision applications by improving precision and realism in automated content creation.

Key Terms

Pixel-wise Classification

Semantic segmentation performs pixel-wise classification to assign each pixel a specific class label, enabling precise object recognition and boundary delineation in images. Generative fill uses contextual image information to synthesize new pixel data, focusing less on classification and more on content creation and restoration. Explore deeper insights into how pixel-wise classification differentiates these advanced image processing techniques.

Mask Generation

Semantic segmentation involves generating precise masks by classifying each pixel into predefined categories, enabling detailed object delineation within images. Generative fill focuses on creating masks that guide AI models to synthesize or complete image regions based on contextual understanding, often enhancing image editing workflows. Discover more about how these mask generation techniques transform computer vision applications.

Content Synthesis

Semantic segmentation enables precise identification and classification of image regions to understand scene structure, crucial for content analysis. Generative fill leverages deep learning to synthesize realistic content by filling missing or corrupted areas, enhancing image restoration and manipulation tasks. Explore deeper into content synthesis techniques to unlock advanced applications in image editing and computer vision.

Source and External Links

IBM: What Is Semantic Segmentation - This article explains semantic segmentation as a computer vision task that assigns class labels to pixels using deep learning, highlighting its role in image segmentation alongside instance and panoptic segmentation.

Coursera: What Is Semantic Segmentation and How Does It Work - This article provides an overview of semantic segmentation, its applications, and how it works compared to other image segmentation techniques like instance and panoptic segmentation.

Encord: Semantic Segmentation in Computer Vision - This guide provides a comprehensive look at semantic segmentation, including its key takeaways, popular architectures like FCN and U-Net, and its use in various applications such as autonomous vehicles and medical imaging.

dowidth.com

dowidth.com