Edge AI processes data locally on devices such as smartphones or IoT sensors, enabling real-time decision-making and reducing reliance on cloud connectivity. Embedded AI integrates artificial intelligence directly into hardware components, optimizing performance for specific functions within devices like smart cameras or wearable technology. Explore the key differences and applications of edge AI versus embedded AI to understand their impact on future technology.

Why it is important

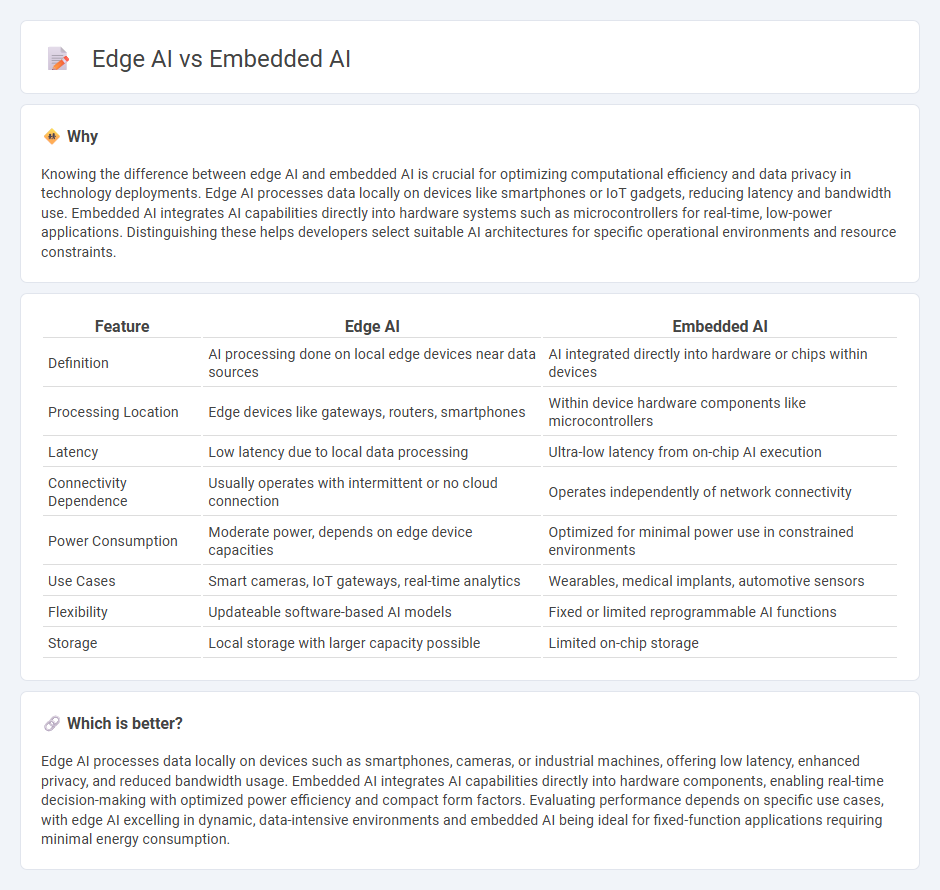

Knowing the difference between edge AI and embedded AI is crucial for optimizing computational efficiency and data privacy in technology deployments. Edge AI processes data locally on devices like smartphones or IoT gadgets, reducing latency and bandwidth use. Embedded AI integrates AI capabilities directly into hardware systems such as microcontrollers for real-time, low-power applications. Distinguishing these helps developers select suitable AI architectures for specific operational environments and resource constraints.

Comparison Table

| Feature | Edge AI | Embedded AI |

|---|---|---|

| Definition | AI processing done on local edge devices near data sources | AI integrated directly into hardware or chips within devices |

| Processing Location | Edge devices like gateways, routers, smartphones | Within device hardware components like microcontrollers |

| Latency | Low latency due to local data processing | Ultra-low latency from on-chip AI execution |

| Connectivity Dependence | Usually operates with intermittent or no cloud connection | Operates independently of network connectivity |

| Power Consumption | Moderate power, depends on edge device capacities | Optimized for minimal power use in constrained environments |

| Use Cases | Smart cameras, IoT gateways, real-time analytics | Wearables, medical implants, automotive sensors |

| Flexibility | Updateable software-based AI models | Fixed or limited reprogrammable AI functions |

| Storage | Local storage with larger capacity possible | Limited on-chip storage |

Which is better?

Edge AI processes data locally on devices such as smartphones, cameras, or industrial machines, offering low latency, enhanced privacy, and reduced bandwidth usage. Embedded AI integrates AI capabilities directly into hardware components, enabling real-time decision-making with optimized power efficiency and compact form factors. Evaluating performance depends on specific use cases, with edge AI excelling in dynamic, data-intensive environments and embedded AI being ideal for fixed-function applications requiring minimal energy consumption.

Connection

Edge AI and embedded AI both involve deploying artificial intelligence algorithms directly on local devices rather than relying on cloud-based processing, enabling real-time data analysis and decision-making. Edge AI focuses on processing data at the network's edge, such as smartphones or IoT devices, reducing latency and bandwidth use, while embedded AI refers to the integration of AI capabilities within hardware components like microcontrollers and sensors. This connection allows for efficient, autonomous operations in applications ranging from autonomous vehicles to smart home systems, leveraging local computation power for enhanced performance and security.

Key Terms

On-device Processing

Embedded AI enables on-device processing by integrating AI capabilities directly within hardware components, allowing real-time data analysis without relying on cloud connectivity. Edge AI processes data locally on edge devices such as smartphones, sensors, or gateways, reducing latency and enhancing privacy by minimizing data transmission. Explore the differences in on-device processing to understand how each technology optimizes AI performance in distinct applications.

Real-time Inference

Embedded AI processes data locally within devices using dedicated hardware for efficient real-time inference, reducing latency and enhancing responsiveness in applications like autonomous vehicles and smart cameras. Edge AI similarly performs inference near the data source but often involves more powerful edge servers that handle complex workloads while maintaining low latency compared to cloud processing. Discover detailed insights into how these technologies optimize real-time AI solutions across industries.

Resource Constraints

Embedded AI operates within devices with limited computing power and memory, optimizing algorithms to run efficiently on microcontrollers and MCU-class processors. Edge AI processes data locally on edge devices such as IoT gateways or smartphones, leveraging more substantial resources than embedded systems but still under strict latency and bandwidth constraints. Explore how these resource-aware AI approaches transform real-time decision-making in constrained environments.

Source and External Links

What is Embedded AI and How Does it Work? - Embedded AI integrates artificial intelligence into embedded systems, enabling devices to process data and make real-time decisions locally without relying on cloud servers, using specialized low-power processors and efficient AI models like TinyML for applications such as robotics and industrial automation.

What Is Embedded AI (EAI)? Why Do We Need EAI? - Embedded AI is a framework built into network devices that manages AI models and performs real-time inference on local data, reducing transmission costs and ensuring data security while enhancing device functions like parameter optimization and fault diagnosis.

Developing Embedded AI Systems | UC Irvine DCE - Embedded AI enables low-power, cost-effective AI solutions at the device edge, requiring knowledge of sensors, signal processing, and TinyML, and is crucial for developing AI applications on constrained hardware like smartphones and drones.

dowidth.com

dowidth.com