Generative Adversarial Networks (GANs) utilize a dual-model architecture involving a generator and a discriminator to produce realistic synthetic data, excelling in image and video generation tasks. Restricted Boltzmann Machines (RBMs) are energy-based models that learn data distributions through unsupervised learning, often applied in feature extraction and dimensionality reduction. Explore more to understand the distinct applications and advantages of GANs and RBMs in advanced machine learning.

Why it is important

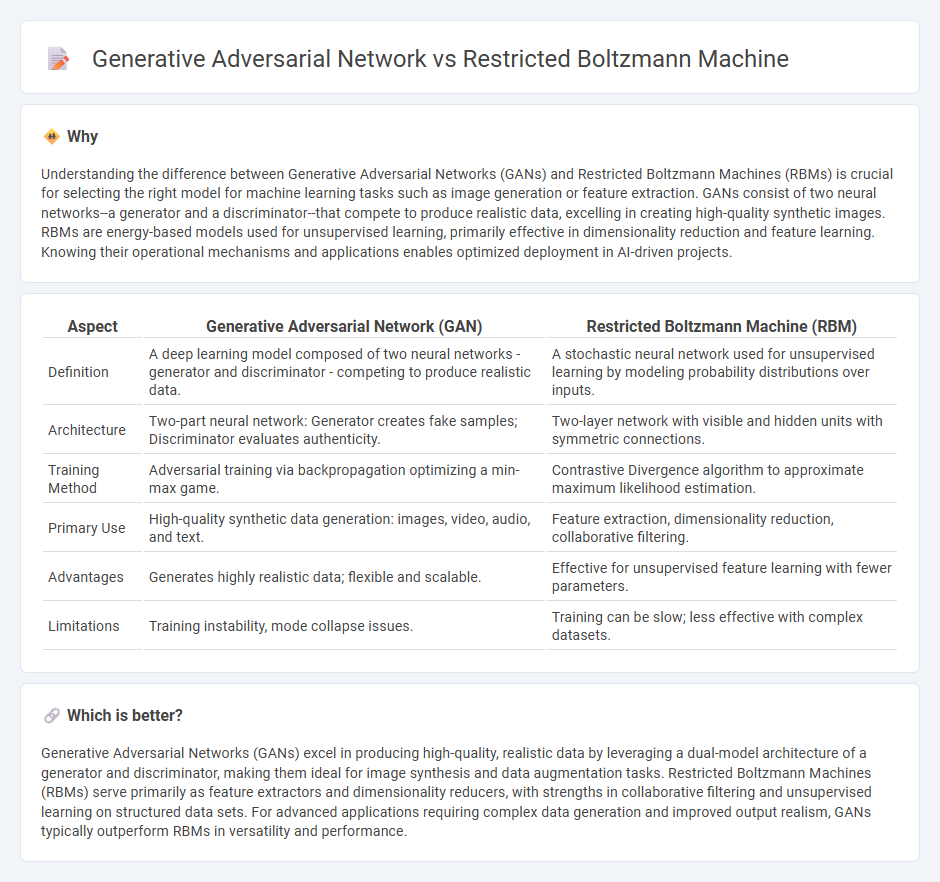

Understanding the difference between Generative Adversarial Networks (GANs) and Restricted Boltzmann Machines (RBMs) is crucial for selecting the right model for machine learning tasks such as image generation or feature extraction. GANs consist of two neural networks--a generator and a discriminator--that compete to produce realistic data, excelling in creating high-quality synthetic images. RBMs are energy-based models used for unsupervised learning, primarily effective in dimensionality reduction and feature learning. Knowing their operational mechanisms and applications enables optimized deployment in AI-driven projects.

Comparison Table

| Aspect | Generative Adversarial Network (GAN) | Restricted Boltzmann Machine (RBM) |

|---|---|---|

| Definition | A deep learning model composed of two neural networks - generator and discriminator - competing to produce realistic data. | A stochastic neural network used for unsupervised learning by modeling probability distributions over inputs. |

| Architecture | Two-part neural network: Generator creates fake samples; Discriminator evaluates authenticity. | Two-layer network with visible and hidden units with symmetric connections. |

| Training Method | Adversarial training via backpropagation optimizing a min-max game. | Contrastive Divergence algorithm to approximate maximum likelihood estimation. |

| Primary Use | High-quality synthetic data generation: images, video, audio, and text. | Feature extraction, dimensionality reduction, collaborative filtering. |

| Advantages | Generates highly realistic data; flexible and scalable. | Effective for unsupervised feature learning with fewer parameters. |

| Limitations | Training instability, mode collapse issues. | Training can be slow; less effective with complex datasets. |

Which is better?

Generative Adversarial Networks (GANs) excel in producing high-quality, realistic data by leveraging a dual-model architecture of a generator and discriminator, making them ideal for image synthesis and data augmentation tasks. Restricted Boltzmann Machines (RBMs) serve primarily as feature extractors and dimensionality reducers, with strengths in collaborative filtering and unsupervised learning on structured data sets. For advanced applications requiring complex data generation and improved output realism, GANs typically outperform RBMs in versatility and performance.

Connection

Generative Adversarial Networks (GANs) and Restricted Boltzmann Machines (RBMs) are connected through their foundation in unsupervised learning and generative modeling. Both use neural network architectures to learn data distributions and generate realistic samples by capturing underlying patterns; GANs achieve this via a two-network competition framework, while RBMs rely on energy-based probabilistic models. These complementary approaches contribute to advancements in synthetic data generation, dimensionality reduction, and feature extraction across AI applications.

Key Terms

Hidden Layers

Restricted Boltzmann Machines (RBMs) utilize a single hidden layer of stochastic binary units to model data distributions, with visible and hidden layers forming a bipartite graph enabling efficient learning through contrastive divergence. Generative Adversarial Networks (GANs) often incorporate multiple hidden layers within their generator and discriminator, leveraging deep neural networks for complex feature extraction and generation tasks, enhancing the capability to model high-dimensional data. Explore further to understand the architectural nuances and learning mechanisms distinguishing RBMs and GANs in generative modeling.

Unsupervised Learning

Restricted Boltzmann Machines (RBMs) are energy-based models that learn probability distributions over input data through unsupervised learning, often used for feature extraction and dimensionality reduction. Generative Adversarial Networks (GANs) consist of a generator and discriminator, trained simultaneously in a competitive framework to produce highly realistic synthetic data without labeled examples. Explore further to understand how these models uniquely capture data distributions and their applications in unsupervised representation learning.

Generator-Discriminator

Restricted Boltzmann Machines (RBMs) leverage a stochastic neural network with visible and hidden layers to model probability distributions, whereas Generative Adversarial Networks (GANs) consist of a generator producing synthetic data and a discriminator evaluating their authenticity through adversarial training. GANs optimize the generator-discriminator dynamic, forcing the generator to create increasingly realistic outputs by trying to deceive the discriminator, a process absent in RBMs which rely on energy-based modeling. Explore more about how these architectures compare in generating complex data patterns and their applications.

Source and External Links

Restricted Boltzmann Machines - An RBM is a probability distribution over binary variables split into visible and hidden layers with no intra-layer connections, designed to model data like images by learning a tunable probability distribution over training inputs.

Restricted Boltzmann Machine - RBMs are generative neural networks used for unsupervised learning that connect visible and hidden layers without connections within the same layer, learning probability distributions over training data.

Restricted Boltzmann machine - A generative stochastic neural network with a bipartite structure separating visible and hidden units, allowing efficient learning via contrastive divergence and usable in deep learning frameworks like deep belief networks.

dowidth.com

dowidth.com