Liquid neural networks adapt dynamically to changing data inputs by continuously updating their parameters, offering advantages in real-time learning and energy efficiency. Transformer networks excel in processing sequential data with self-attention mechanisms, enabling powerful natural language understanding and generation. Explore the distinctions between these architectures to discover their unique applications and capabilities.

Why it is important

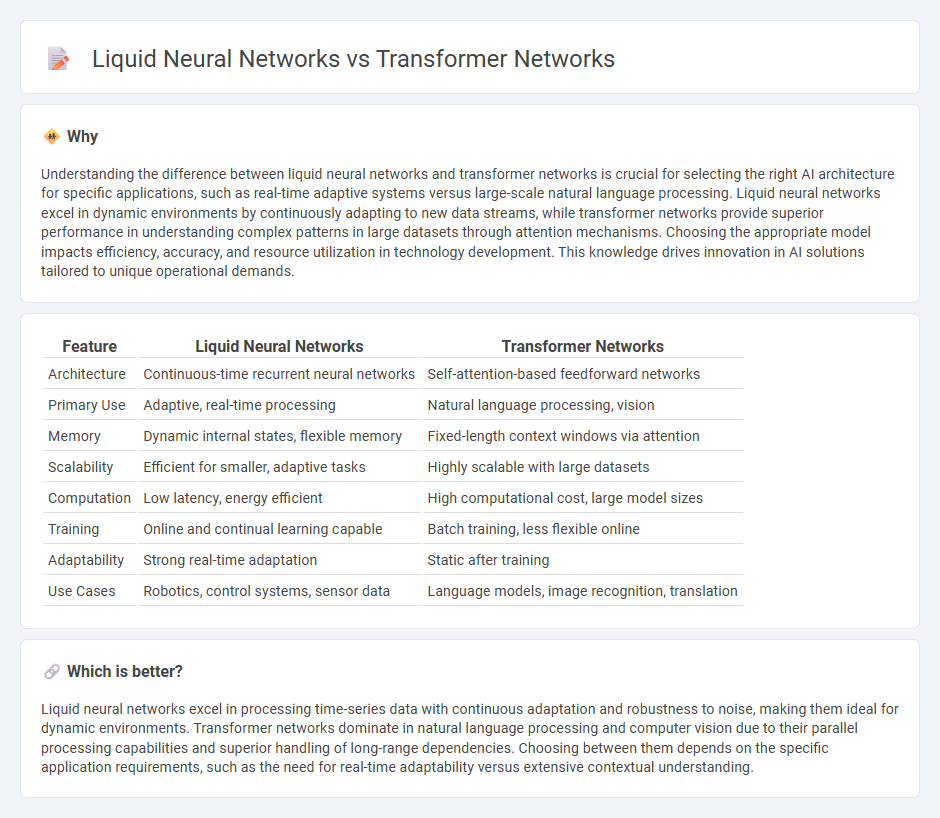

Understanding the difference between liquid neural networks and transformer networks is crucial for selecting the right AI architecture for specific applications, such as real-time adaptive systems versus large-scale natural language processing. Liquid neural networks excel in dynamic environments by continuously adapting to new data streams, while transformer networks provide superior performance in understanding complex patterns in large datasets through attention mechanisms. Choosing the appropriate model impacts efficiency, accuracy, and resource utilization in technology development. This knowledge drives innovation in AI solutions tailored to unique operational demands.

Comparison Table

| Feature | Liquid Neural Networks | Transformer Networks |

|---|---|---|

| Architecture | Continuous-time recurrent neural networks | Self-attention-based feedforward networks |

| Primary Use | Adaptive, real-time processing | Natural language processing, vision |

| Memory | Dynamic internal states, flexible memory | Fixed-length context windows via attention |

| Scalability | Efficient for smaller, adaptive tasks | Highly scalable with large datasets |

| Computation | Low latency, energy efficient | High computational cost, large model sizes |

| Training | Online and continual learning capable | Batch training, less flexible online |

| Adaptability | Strong real-time adaptation | Static after training |

| Use Cases | Robotics, control systems, sensor data | Language models, image recognition, translation |

Which is better?

Liquid neural networks excel in processing time-series data with continuous adaptation and robustness to noise, making them ideal for dynamic environments. Transformer networks dominate in natural language processing and computer vision due to their parallel processing capabilities and superior handling of long-range dependencies. Choosing between them depends on the specific application requirements, such as the need for real-time adaptability versus extensive contextual understanding.

Connection

Liquid neural networks and transformer networks are connected through their advanced architectures designed to improve adaptive learning and sequence processing. Liquid neural networks dynamically adjust their parameters in real-time, enhancing flexibility, while transformer networks utilize self-attention mechanisms to capture long-range dependencies in data. This synergy enables more efficient handling of complex temporal and contextual information in applications like natural language processing and robotics.

Key Terms

Attention (Transformer Networks)

Transformer networks utilize attention mechanisms to dynamically weigh the importance of different input tokens, enabling context-aware processing and improved handling of long-range dependencies in sequential data. Liquid neural networks adapt their internal state continuously over time, but lack explicit attention modules, resulting in different approaches to sequence learning with a focus on temporal adaptability. Explore the nuances of attention in these architectures to understand their impact on natural language processing and time-series prediction.

Memory Cells (Liquid Neural Networks)

Liquid Neural Networks utilize dynamic memory cells that adapt continuously to new information, enabling real-time learning and improved temporal processing compared to Transformer networks, which rely on fixed attention mechanisms without explicit memory cells. These adaptive memory cells in Liquid Neural Networks allow for efficient handling of streaming data and better modeling of time-dependent patterns. Discover how these distinctive memory architectures impact performance across various AI applications.

Sequence Modeling

Transformer networks leverage self-attention mechanisms to capture long-range dependencies in sequence modeling, excelling in natural language processing and time-series analysis with parallel processing capabilities. Liquid neural networks use continuous-time dynamics and adaptable state transitions, enabling robust handling of temporal variability and real-time adaptation in sequential data. Explore deeper insights into how these architectures revolutionize sequence modeling and their respective applications.

Source and External Links

What is a Transformer Model? - Transformer models are neural networks using self-attention mechanisms that excel at processing sequences by detecting relationships between input elements, outperforming earlier RNNs and CNNs, and are widely used in language, vision, and multimodal tasks.

What Is a Transformer Model? - A transformer model learns contextual relationships in sequential data, enabling applications from language understanding to fraud detection, and has largely replaced traditional CNNs and RNNs due to its efficiency and ability for self-supervised learning.

How Transformers Work: A Detailed Exploration of Transformer Architecture - Transformers use an encoder-decoder structure built entirely on self-attention and feedforward layers, allowing parallel processing of sequence data to generate outputs like translations without relying on recurrent or convolutional networks.

dowidth.com

dowidth.com