Neuromorphic computing mimics the brain's neural architecture to enhance processing efficiency and energy consumption, leveraging spiking neural networks for real-time data interpretation. Reservoir computing utilizes a dynamic, high-dimensional reservoir of recurrent neural networks to transform input signals before linear readout, facilitating rapid learning in temporal tasks. Explore the distinctions and applications of these innovative computing paradigms to better understand their impact on AI advancements.

Why it is important

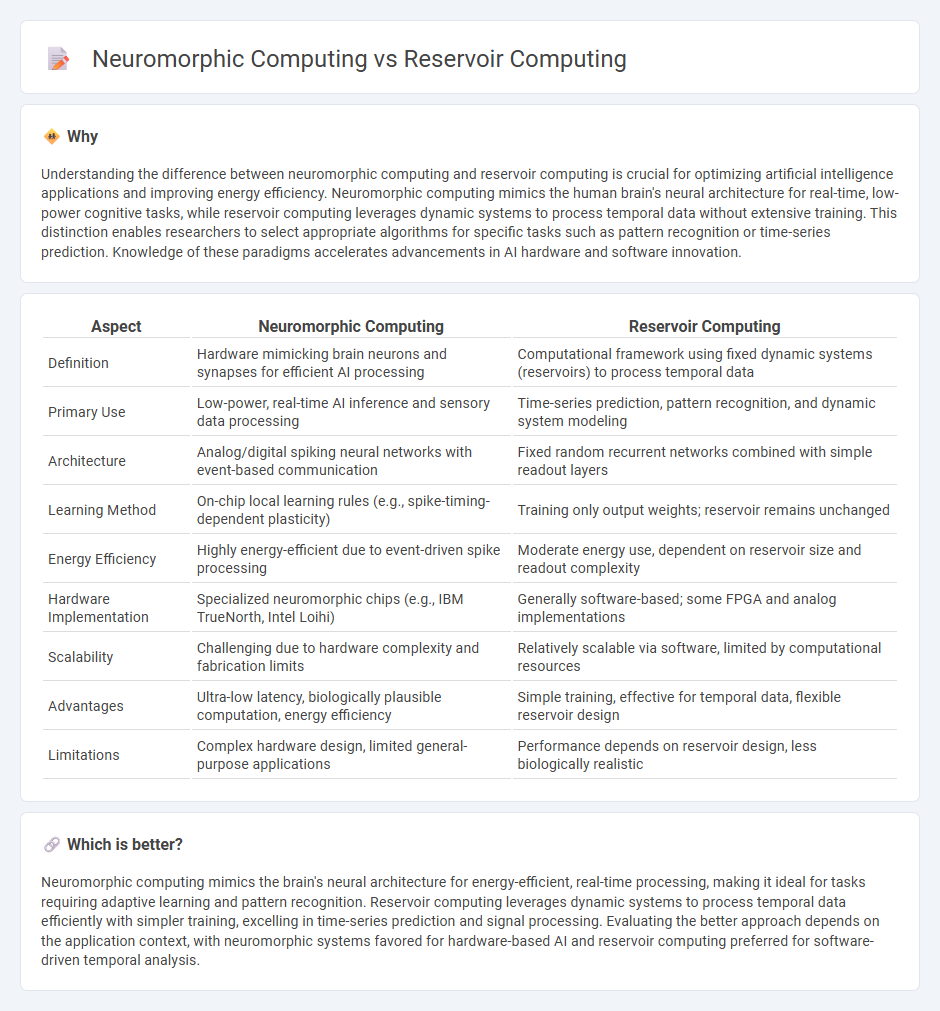

Understanding the difference between neuromorphic computing and reservoir computing is crucial for optimizing artificial intelligence applications and improving energy efficiency. Neuromorphic computing mimics the human brain's neural architecture for real-time, low-power cognitive tasks, while reservoir computing leverages dynamic systems to process temporal data without extensive training. This distinction enables researchers to select appropriate algorithms for specific tasks such as pattern recognition or time-series prediction. Knowledge of these paradigms accelerates advancements in AI hardware and software innovation.

Comparison Table

| Aspect | Neuromorphic Computing | Reservoir Computing |

|---|---|---|

| Definition | Hardware mimicking brain neurons and synapses for efficient AI processing | Computational framework using fixed dynamic systems (reservoirs) to process temporal data |

| Primary Use | Low-power, real-time AI inference and sensory data processing | Time-series prediction, pattern recognition, and dynamic system modeling |

| Architecture | Analog/digital spiking neural networks with event-based communication | Fixed random recurrent networks combined with simple readout layers |

| Learning Method | On-chip local learning rules (e.g., spike-timing-dependent plasticity) | Training only output weights; reservoir remains unchanged |

| Energy Efficiency | Highly energy-efficient due to event-driven spike processing | Moderate energy use, dependent on reservoir size and readout complexity |

| Hardware Implementation | Specialized neuromorphic chips (e.g., IBM TrueNorth, Intel Loihi) | Generally software-based; some FPGA and analog implementations |

| Scalability | Challenging due to hardware complexity and fabrication limits | Relatively scalable via software, limited by computational resources |

| Advantages | Ultra-low latency, biologically plausible computation, energy efficiency | Simple training, effective for temporal data, flexible reservoir design |

| Limitations | Complex hardware design, limited general-purpose applications | Performance depends on reservoir design, less biologically realistic |

Which is better?

Neuromorphic computing mimics the brain's neural architecture for energy-efficient, real-time processing, making it ideal for tasks requiring adaptive learning and pattern recognition. Reservoir computing leverages dynamic systems to process temporal data efficiently with simpler training, excelling in time-series prediction and signal processing. Evaluating the better approach depends on the application context, with neuromorphic systems favored for hardware-based AI and reservoir computing preferred for software-driven temporal analysis.

Connection

Neuromorphic computing and reservoir computing both draw inspiration from the brain's neural architecture to enhance computational efficiency and adaptability. Neuromorphic systems emulate spiking neural networks through hardware that mimics neuronal behavior, while reservoir computing leverages dynamic recurrent neural networks to process temporal data. The synergy lies in utilizing neuromorphic hardware to implement reservoir computing models, enabling real-time, low-power processing for complex tasks like speech recognition and sensory data analysis.

Key Terms

Recurrent Neural Networks (Reservoir Computing)

Reservoir computing leverages a fixed, high-dimensional recurrent neural network (RNN) known as the reservoir, where only the readout layer is trained, enabling efficient processing of temporal data with reduced computational cost. Neuromorphic computing, inspired by the brain's architecture, employs spiking neural networks and hardware designed to mimic neural dynamics, offering energy-efficient real-time learning and adaptation. Explore more about how reservoir computing and neuromorphic computing compare in optimizing recurrent neural network applications.

Spiking Neural Networks (Neuromorphic Computing)

Reservoir computing leverages fixed, high-dimensional dynamic systems to process time-series data efficiently, while neuromorphic computing, particularly Spiking Neural Networks (SNNs), emulates biological neuron spiking behaviors for energy-efficient processing and temporal information encoding. SNNs utilize event-driven architectures and synaptic plasticity mechanisms that enhance processing speed and reduce power consumption, making them ideal for real-time sensory applications. Explore further to understand how these paradigms transform machine learning and artificial intelligence scalability.

Analog Hardware Emulation

Reservoir computing leverages dynamic, high-dimensional systems such as analog hardware to process temporal data efficiently, relying on fixed recurrent networks with trained readouts. Neuromorphic computing emulates neural architectures at the hardware level, often utilizing memristors or spiking neurons to mimic synaptic plasticity and enable low-power, real-time processing. Explore deeper to understand how these approaches harness analog hardware for advanced computational tasks.

Source and External Links

Reservoir computing - Wikipedia - Reservoir computing is a computational framework derived from recurrent neural networks (RNNs) that uses a fixed, high-dimensional, nonlinear dynamical system (the "reservoir") to transform input signals into complex temporal patterns, with only a simple linear readout layer being trained for specific tasks.

An introduction to reservoir computing - Reservoir computing leverages a high-dimensional recurrent network where the input signal drives a nonlinear dynamical system (the reservoir), and only the final output layer is trained using methods like linear regression to map the reservoir states to desired outputs, making it efficient for time-series prediction and similar tasks.

A brief introduction to Reservoir Computing - Reservoir computing is an umbrella term for computational models like Echo State Networks and Liquid State Machines that use a fixed, nonlinear, higher-dimensional system to map inputs, with learning restricted to a simple readout layer trained to produce the correct outputs from the reservoir's states.

dowidth.com

dowidth.com