Liquid neural networks adapt dynamically to incoming data streams, making them highly effective for real-time applications and environments with changing conditions. Graph neural networks excel at processing complex relational data by leveraging network structures to enhance tasks such as social network analysis and molecular modeling. Explore the differences and applications of these cutting-edge technologies to understand their transformative potential in AI.

Why it is important

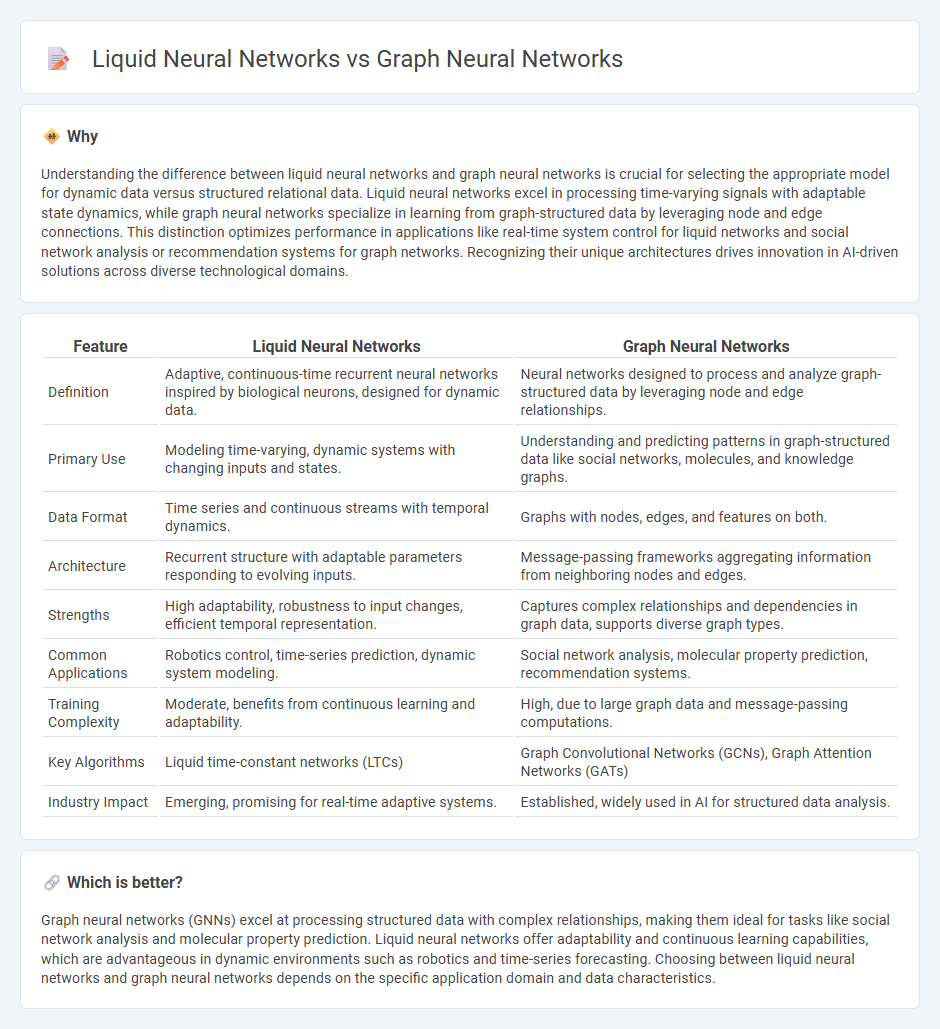

Understanding the difference between liquid neural networks and graph neural networks is crucial for selecting the appropriate model for dynamic data versus structured relational data. Liquid neural networks excel in processing time-varying signals with adaptable state dynamics, while graph neural networks specialize in learning from graph-structured data by leveraging node and edge connections. This distinction optimizes performance in applications like real-time system control for liquid networks and social network analysis or recommendation systems for graph networks. Recognizing their unique architectures drives innovation in AI-driven solutions across diverse technological domains.

Comparison Table

| Feature | Liquid Neural Networks | Graph Neural Networks |

|---|---|---|

| Definition | Adaptive, continuous-time recurrent neural networks inspired by biological neurons, designed for dynamic data. | Neural networks designed to process and analyze graph-structured data by leveraging node and edge relationships. |

| Primary Use | Modeling time-varying, dynamic systems with changing inputs and states. | Understanding and predicting patterns in graph-structured data like social networks, molecules, and knowledge graphs. |

| Data Format | Time series and continuous streams with temporal dynamics. | Graphs with nodes, edges, and features on both. |

| Architecture | Recurrent structure with adaptable parameters responding to evolving inputs. | Message-passing frameworks aggregating information from neighboring nodes and edges. |

| Strengths | High adaptability, robustness to input changes, efficient temporal representation. | Captures complex relationships and dependencies in graph data, supports diverse graph types. |

| Common Applications | Robotics control, time-series prediction, dynamic system modeling. | Social network analysis, molecular property prediction, recommendation systems. |

| Training Complexity | Moderate, benefits from continuous learning and adaptability. | High, due to large graph data and message-passing computations. |

| Key Algorithms | Liquid time-constant networks (LTCs) | Graph Convolutional Networks (GCNs), Graph Attention Networks (GATs) |

| Industry Impact | Emerging, promising for real-time adaptive systems. | Established, widely used in AI for structured data analysis. |

Which is better?

Graph neural networks (GNNs) excel at processing structured data with complex relationships, making them ideal for tasks like social network analysis and molecular property prediction. Liquid neural networks offer adaptability and continuous learning capabilities, which are advantageous in dynamic environments such as robotics and time-series forecasting. Choosing between liquid neural networks and graph neural networks depends on the specific application domain and data characteristics.

Connection

Liquid neural networks and graph neural networks both leverage dynamic adaptability to process complex data structures, with liquid neural networks enabling continuous temporal adaptation and graph neural networks excelling in relational data representation through graph-based structures. Their connection lies in the shared goal of modeling dynamic systems where nodes and connections evolve over time, which enhances tasks such as trajectory prediction, social network analysis, and spatiotemporal reasoning. Integrating principles from liquid neural networks into graph neural networks can improve the handling of time-dependent signals on graphs, leading to more robust and flexible AI models in domains like robotics and network science.

Key Terms

Node Embeddings (Graph Neural Networks)

Graph neural networks (GNNs) excel at generating node embeddings by capturing structural and feature information from graph data through message passing and aggregation functions, enabling effective representation learning for nodes in complex networks. Liquid neural networks, designed for continuous-time and dynamic data streams, differ by adapting their parameters fluidly in response to temporal changes but do not inherently specialize in graph topology or node embedding generation. Explore further to understand how these architectures uniquely handle node representations and dynamic learning tasks.

Dynamic State Update (Liquid Neural Networks)

Liquid Neural Networks feature dynamic state updates that adaptively modify internal states in response to continuous input streams, enabling real-time processing and temporal pattern recognition. In contrast, Graph Neural Networks primarily focus on message passing between nodes with relatively static state updates, emphasizing structural relationships over temporal dynamics. Explore how dynamic state updates in Liquid Neural Networks revolutionize adaptive modeling by diving deeper into their architecture and applications.

Temporal Processing

Graph neural networks (GNNs) excel at modeling complex relationships and spatial dependencies in graph-structured data, but they face challenges in efficiently capturing dynamic temporal patterns over long sequences. Liquid neural networks, inspired by continuous-time nonlinear dynamics, provide a robust framework for temporal processing by inherently adapting to time-varying inputs with fast learning capabilities and stable memory retention. Discover how these architectures compare in temporal sequence tasks and their practical implications in real-world applications.

Source and External Links

Graph Neural Network and Some of GNN Applications - neptune.ai - Graph Neural Networks (GNNs) are deep learning methods designed to operate directly on graph structures, enabling node-level, edge-level, and graph-level predictions by generalizing convolution beyond regular grids, which CNNs struggle with due to graph topology and node ordering issues.

What is a GNN (graph neural network)? - IBM - GNNs are neural networks specialized for representing data about entities and their relationships in graph form, useful in areas such as social networks, knowledge graphs, recommender systems, and bioinformatics, inspired by but distinct from CNNs and RNNs.

A Gentle Introduction to Graph Neural Networks - Distill.pub - GNNs perform optimizable transformations on graph attributes while preserving graph symmetries, commonly implemented using message passing neural network frameworks that learn embeddings for nodes, edges, and global graph context by stacking GNN layers.

dowidth.com

dowidth.com