Diffusion models generate data by iteratively refining noise into structured outputs, excelling in creating high-quality images and smooth data transitions. Autoregressive models produce data sequentially, predicting each element based on previous ones, which enhances their performance in natural language processing and time series analysis. Explore the detailed comparison to understand their unique strengths and applications.

Why it is important

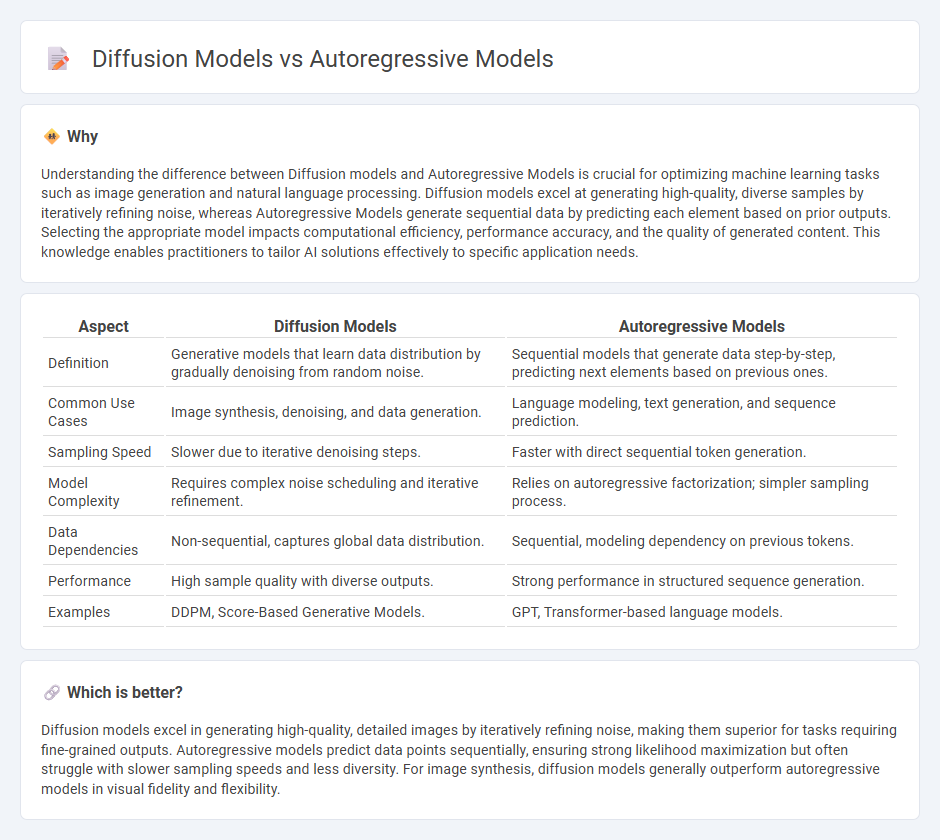

Understanding the difference between Diffusion models and Autoregressive Models is crucial for optimizing machine learning tasks such as image generation and natural language processing. Diffusion models excel at generating high-quality, diverse samples by iteratively refining noise, whereas Autoregressive Models generate sequential data by predicting each element based on prior outputs. Selecting the appropriate model impacts computational efficiency, performance accuracy, and the quality of generated content. This knowledge enables practitioners to tailor AI solutions effectively to specific application needs.

Comparison Table

| Aspect | Diffusion Models | Autoregressive Models |

|---|---|---|

| Definition | Generative models that learn data distribution by gradually denoising from random noise. | Sequential models that generate data step-by-step, predicting next elements based on previous ones. |

| Common Use Cases | Image synthesis, denoising, and data generation. | Language modeling, text generation, and sequence prediction. |

| Sampling Speed | Slower due to iterative denoising steps. | Faster with direct sequential token generation. |

| Model Complexity | Requires complex noise scheduling and iterative refinement. | Relies on autoregressive factorization; simpler sampling process. |

| Data Dependencies | Non-sequential, captures global data distribution. | Sequential, modeling dependency on previous tokens. |

| Performance | High sample quality with diverse outputs. | Strong performance in structured sequence generation. |

| Examples | DDPM, Score-Based Generative Models. | GPT, Transformer-based language models. |

Which is better?

Diffusion models excel in generating high-quality, detailed images by iteratively refining noise, making them superior for tasks requiring fine-grained outputs. Autoregressive models predict data points sequentially, ensuring strong likelihood maximization but often struggle with slower sampling speeds and less diversity. For image synthesis, diffusion models generally outperform autoregressive models in visual fidelity and flexibility.

Connection

Diffusion models and autoregressive models both serve as generative frameworks in machine learning, focusing on data synthesis by learning probability distributions. Diffusion models iteratively refine data through a noise-adding and denoising process, while autoregressive models predict each element in a sequence based on preceding elements, capturing dependencies in data. Their connection lies in the shared goal of modeling complex data distributions, with recent research exploring hybrid approaches that leverage the strengths of both for improved generative performance.

Key Terms

Sequence Modeling

Autoregressive models predict sequences by generating each token based on previous outputs, excelling in tasks requiring precise temporal dependencies such as language modeling and time series forecasting. Diffusion models, leveraging iterative noise reduction processes, offer robust modeling of complex distributions and can capture global sequence characteristics more effectively, making them suitable for high-dimensional sequence data like audio and video synthesis. Explore the nuances of these approaches to understand their unique strengths in sequence modeling applications.

Markov Process

Autoregressive models generate sequences by predicting each element based on previous ones, effectively modeling data through a step-by-step Markov process where the current state depends solely on the immediate past state. Diffusion models utilize a Markov chain by gradually adding noise to data and then reversing this process to recover the original signal, leveraging a sequence of latent variables governed by Markov transitions. Discover more about how these Markov processes underpin modern generative techniques in machine learning.

Probabilistic Sampling

Autoregressive models generate data by sequentially predicting each element based on previous ones, ensuring coherent sample generation with exact likelihood estimation. Diffusion models iteratively refine noisy data through a learned denoising process, offering improved sample diversity and robustness at the cost of more complex probabilistic sampling. Explore the detailed mechanisms and comparative advantages of both methods to enhance your understanding of modern probabilistic approaches.

Source and External Links

What are Autoregressive Models? - AR Models Explained - Autoregressive models predict the next value in a sequence using linear regression on previous output values (lags), capturing dependencies on past observations with coefficients and random noise included for imperfect predictions.

What is an autoregressive model - Autoregressive modeling is a technique mostly used in time series forecasting that predicts future values based on past values in the series, leveraging autocorrelation present in the data for effective forecasts.

Autoregressive (AR) Model for Time Series Forecasting - The AR(p) model expresses the current value of a time series as a linear combination of its previous p values plus a constant and random noise, widely used to model and forecast time-dependent data.

dowidth.com

dowidth.com