Self-supervised learning enables machines to learn from unlabeled data by generating labels internally, enhancing pattern recognition and feature extraction without human intervention. Reinforcement learning focuses on agents learning optimal actions through trial and error by maximizing cumulative rewards in dynamic environments. Discover how these distinct AI approaches drive innovation across industries.

Why it is important

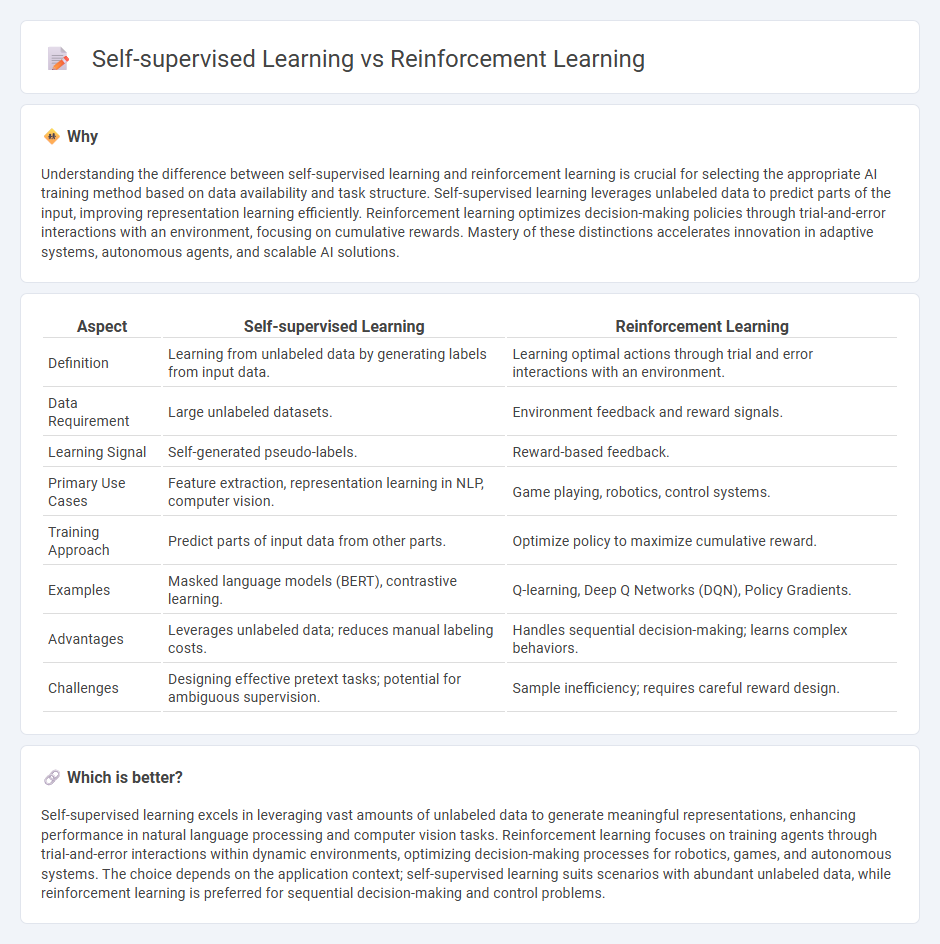

Understanding the difference between self-supervised learning and reinforcement learning is crucial for selecting the appropriate AI training method based on data availability and task structure. Self-supervised learning leverages unlabeled data to predict parts of the input, improving representation learning efficiently. Reinforcement learning optimizes decision-making policies through trial-and-error interactions with an environment, focusing on cumulative rewards. Mastery of these distinctions accelerates innovation in adaptive systems, autonomous agents, and scalable AI solutions.

Comparison Table

| Aspect | Self-supervised Learning | Reinforcement Learning |

|---|---|---|

| Definition | Learning from unlabeled data by generating labels from input data. | Learning optimal actions through trial and error interactions with an environment. |

| Data Requirement | Large unlabeled datasets. | Environment feedback and reward signals. |

| Learning Signal | Self-generated pseudo-labels. | Reward-based feedback. |

| Primary Use Cases | Feature extraction, representation learning in NLP, computer vision. | Game playing, robotics, control systems. |

| Training Approach | Predict parts of input data from other parts. | Optimize policy to maximize cumulative reward. |

| Examples | Masked language models (BERT), contrastive learning. | Q-learning, Deep Q Networks (DQN), Policy Gradients. |

| Advantages | Leverages unlabeled data; reduces manual labeling costs. | Handles sequential decision-making; learns complex behaviors. |

| Challenges | Designing effective pretext tasks; potential for ambiguous supervision. | Sample inefficiency; requires careful reward design. |

Which is better?

Self-supervised learning excels in leveraging vast amounts of unlabeled data to generate meaningful representations, enhancing performance in natural language processing and computer vision tasks. Reinforcement learning focuses on training agents through trial-and-error interactions within dynamic environments, optimizing decision-making processes for robotics, games, and autonomous systems. The choice depends on the application context; self-supervised learning suits scenarios with abundant unlabeled data, while reinforcement learning is preferred for sequential decision-making and control problems.

Connection

Self-supervised learning generates labeled data from unlabeled inputs, enabling models to learn feature representations without manual annotations, which enhances the efficiency of reinforcement learning by providing richer state representations. Reinforcement learning then uses these representations to optimize decision-making policies through trial and error interactions with the environment, improving action selection based on reward signals. The integration of self-supervised learning accelerates reinforcement learning convergence and performance in complex tasks such as robotics, autonomous driving, and game playing.

Key Terms

Reward Signal vs. Data Representation

Reinforcement learning centers on optimizing an agent's behavior through reward signals that provide feedback on action success, enabling goal-directed decision-making in dynamic environments. Self-supervised learning emphasizes learning rich data representations by leveraging inherent structures within unlabeled data to improve performance on downstream tasks. Explore the nuances and applications of these approaches to unlock their full potential in artificial intelligence.

Exploration/Exploitation vs. Pretext Tasks

Reinforcement learning optimizes decision-making by balancing exploration of new actions and exploitation of known rewards to maximize long-term gains, which is critical in dynamic environments. Self-supervised learning leverages pretext tasks, such as predicting masked data or sequence order, to learn useful representations without labeled data, enhancing downstream performance. Explore the differences in learning strategies and their practical applications to deepen your understanding of machine learning paradigms.

Agent-Environment Interaction vs. Unlabeled Data

Reinforcement learning emphasizes agent-environment interaction where an agent learns optimal actions through rewards and penalties in dynamic settings, whereas self-supervised learning leverages vast amounts of unlabeled data to generate supervisory signals for representation learning. Reinforcement learning is pivotal in robotics, game playing, and autonomous systems, while self-supervised learning excels in natural language processing and computer vision tasks by pre-training models without manual annotation. Discover how these paradigms shape AI advancements by exploring their underlying mechanisms and applications further.

Source and External Links

What is reinforcement learning? - Blog - York Online Masters degrees - Reinforcement learning (RL) is a subset of machine learning where an AI agent learns by trial and error through feedback from its actions, aiming to maximize cumulative rewards without explicit instructions or labeled data, and includes types such as policy-based, value-based, and model-based RL.

What is Reinforcement Learning? - AWS - Reinforcement learning is a machine learning technique where an agent interacts with an environment, takes actions, receives rewards or punishments, and learns policies to maximize long-term cumulative reward through a process resembling human behavioral learning and balancing exploration versus exploitation.

Reinforcement learning - Wikipedia - Reinforcement learning is an interdisciplinary machine learning approach where an agent learns an optimal policy mapping states to actions to maximize accumulated reward signals by interacting with an environment in discrete time steps, inspired by animal learning processes.

dowidth.com

dowidth.com