Generative Adversarial Networks (GANs) excel in creating realistic synthetic data through adversarial training between generator and discriminator models, while Reinforcement Learning (RL) focuses on training agents to make optimal decisions via trial-and-error interactions with an environment. Both technologies are pivotal in advancing artificial intelligence but serve distinctly different purposes--GANs for data generation and RL for sequential decision-making. Explore deeper insights into how these AI frameworks transform technology applications and innovation.

Why it is important

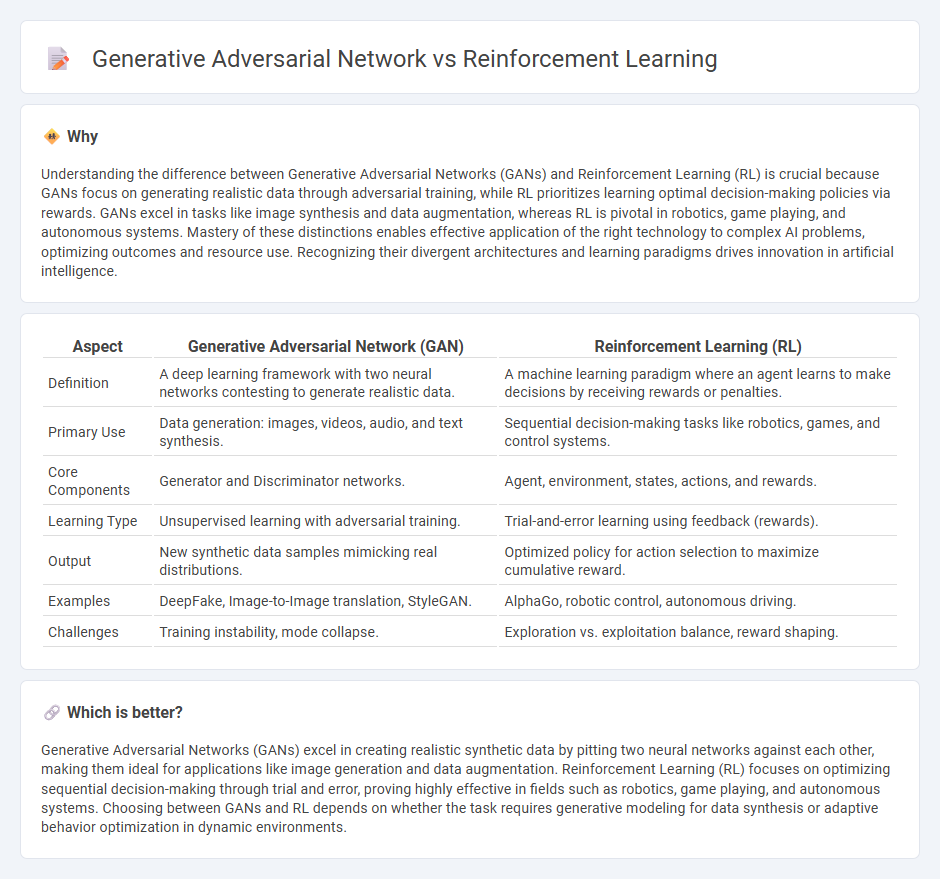

Understanding the difference between Generative Adversarial Networks (GANs) and Reinforcement Learning (RL) is crucial because GANs focus on generating realistic data through adversarial training, while RL prioritizes learning optimal decision-making policies via rewards. GANs excel in tasks like image synthesis and data augmentation, whereas RL is pivotal in robotics, game playing, and autonomous systems. Mastery of these distinctions enables effective application of the right technology to complex AI problems, optimizing outcomes and resource use. Recognizing their divergent architectures and learning paradigms drives innovation in artificial intelligence.

Comparison Table

| Aspect | Generative Adversarial Network (GAN) | Reinforcement Learning (RL) |

|---|---|---|

| Definition | A deep learning framework with two neural networks contesting to generate realistic data. | A machine learning paradigm where an agent learns to make decisions by receiving rewards or penalties. |

| Primary Use | Data generation: images, videos, audio, and text synthesis. | Sequential decision-making tasks like robotics, games, and control systems. |

| Core Components | Generator and Discriminator networks. | Agent, environment, states, actions, and rewards. |

| Learning Type | Unsupervised learning with adversarial training. | Trial-and-error learning using feedback (rewards). |

| Output | New synthetic data samples mimicking real distributions. | Optimized policy for action selection to maximize cumulative reward. |

| Examples | DeepFake, Image-to-Image translation, StyleGAN. | AlphaGo, robotic control, autonomous driving. |

| Challenges | Training instability, mode collapse. | Exploration vs. exploitation balance, reward shaping. |

Which is better?

Generative Adversarial Networks (GANs) excel in creating realistic synthetic data by pitting two neural networks against each other, making them ideal for applications like image generation and data augmentation. Reinforcement Learning (RL) focuses on optimizing sequential decision-making through trial and error, proving highly effective in fields such as robotics, game playing, and autonomous systems. Choosing between GANs and RL depends on whether the task requires generative modeling for data synthesis or adaptive behavior optimization in dynamic environments.

Connection

Generative Adversarial Networks (GANs) and Reinforcement Learning (RL) intersect through their mutual emphasis on learning optimal policies or models via iterative feedback mechanisms. GANs employ a generator-discriminator dynamic to improve data generation quality, while RL agents optimize decision-making strategies based on reward signals from the environment. Integrating GANs with RL can enhance sample efficiency and policy learning by enabling the generation of realistic training scenarios and improving exploration strategies.

Key Terms

Agent vs. Generator-Discriminator

Reinforcement learning centers on an agent that learns optimal behaviors through trial and error by interacting with an environment to maximize cumulative rewards. In contrast, Generative Adversarial Networks (GANs) comprise two neural networks--a generator creating data samples and a discriminator evaluating their authenticity, competing to improve data generation quality. Explore the key differences and applications of agent-based learning versus generator-discriminator dynamics to deepen your understanding.

Reward Signal vs. Adversarial Loss

Reinforcement learning optimizes agent behavior by maximizing a reward signal derived from interactions with the environment, guiding decision-making toward long-term goals. In contrast, generative adversarial networks (GANs) utilize adversarial loss through a two-player game where a generator tries to produce realistic data to fool a discriminator, which distinguishes real from fake inputs. Explore deeper into the mechanisms and applications of reward signals and adversarial loss to understand their impact on AI model performance.

Exploration vs. Data Generation

Reinforcement learning centers on exploration by enabling agents to learn optimal policies through interaction with dynamic environments, maximizing cumulative rewards. Generative adversarial networks excel in data generation by employing two neural networks in competition to produce realistic synthetic data. Discover how these contrasting approaches impact AI advancements and applications.

Source and External Links

Reinforcement learning - Wikipedia - Reinforcement learning (RL) is a machine learning method where an intelligent agent learns to make decisions by taking actions in an environment to maximize cumulative reward based on immediate feedback, modeled as a policy mapping states to actions.

What is Reinforcement Learning? - AWS - Reinforcement learning is a type of machine learning where an agent learns optimal decisions through trial and error in an environment, balancing between exploring new actions and exploiting known reward-gaining actions.

What is reinforcement learning? - Blog - York Online Masters degrees - RL is a subset of machine learning where an agent uses trial and error and feedback signals (punishment or reward) to learn an optimal action policy without direct supervision, with implementations including policy-based, value-based, and model-based approaches.

dowidth.com

dowidth.com