Graph Neural Networks (GNNs) excel at processing data structured as graphs, enabling advanced analysis of relational information in social networks, molecular chemistry, and recommendation systems. Capsule Networks offer a novel approach to preserving spatial hierarchies and feature relationships in image recognition tasks, improving robustness to viewpoint changes. Explore the distinct strengths and applications of GNNs and Capsule Networks to enhance your understanding of cutting-edge AI architectures.

Why it is important

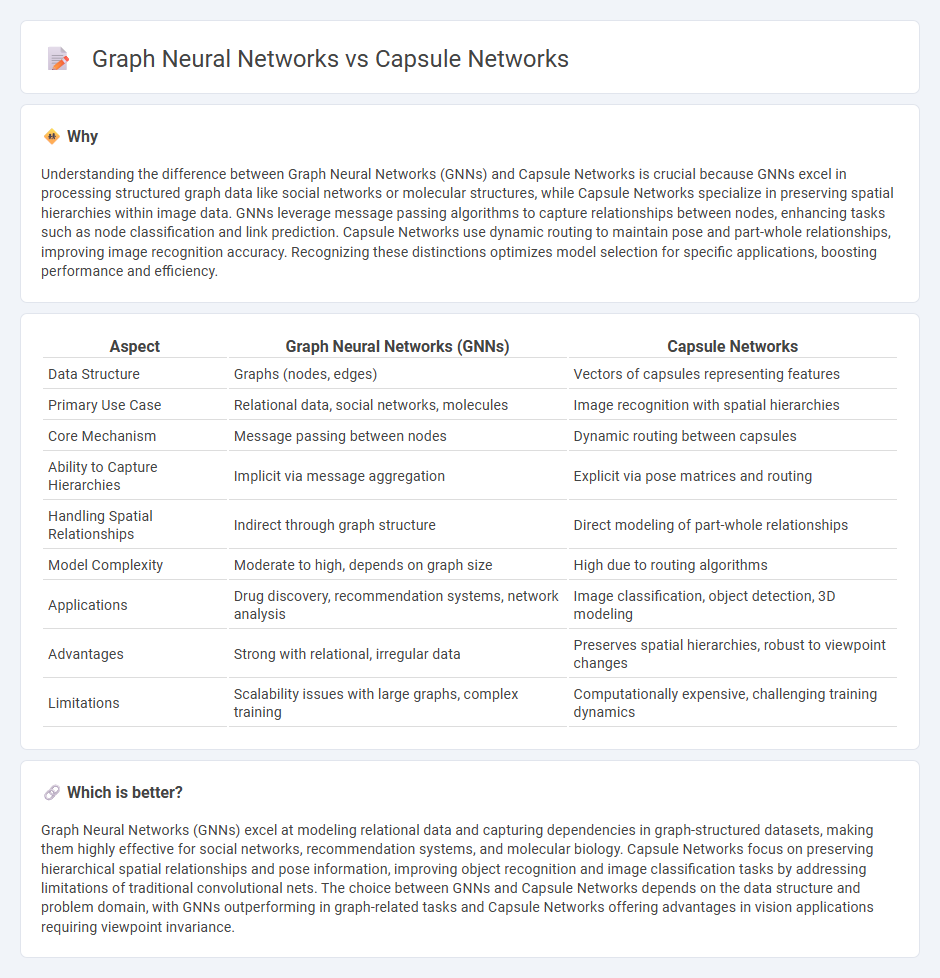

Understanding the difference between Graph Neural Networks (GNNs) and Capsule Networks is crucial because GNNs excel in processing structured graph data like social networks or molecular structures, while Capsule Networks specialize in preserving spatial hierarchies within image data. GNNs leverage message passing algorithms to capture relationships between nodes, enhancing tasks such as node classification and link prediction. Capsule Networks use dynamic routing to maintain pose and part-whole relationships, improving image recognition accuracy. Recognizing these distinctions optimizes model selection for specific applications, boosting performance and efficiency.

Comparison Table

| Aspect | Graph Neural Networks (GNNs) | Capsule Networks |

|---|---|---|

| Data Structure | Graphs (nodes, edges) | Vectors of capsules representing features |

| Primary Use Case | Relational data, social networks, molecules | Image recognition with spatial hierarchies |

| Core Mechanism | Message passing between nodes | Dynamic routing between capsules |

| Ability to Capture Hierarchies | Implicit via message aggregation | Explicit via pose matrices and routing |

| Handling Spatial Relationships | Indirect through graph structure | Direct modeling of part-whole relationships |

| Model Complexity | Moderate to high, depends on graph size | High due to routing algorithms |

| Applications | Drug discovery, recommendation systems, network analysis | Image classification, object detection, 3D modeling |

| Advantages | Strong with relational, irregular data | Preserves spatial hierarchies, robust to viewpoint changes |

| Limitations | Scalability issues with large graphs, complex training | Computationally expensive, challenging training dynamics |

Which is better?

Graph Neural Networks (GNNs) excel at modeling relational data and capturing dependencies in graph-structured datasets, making them highly effective for social networks, recommendation systems, and molecular biology. Capsule Networks focus on preserving hierarchical spatial relationships and pose information, improving object recognition and image classification tasks by addressing limitations of traditional convolutional nets. The choice between GNNs and Capsule Networks depends on the data structure and problem domain, with GNNs outperforming in graph-related tasks and Capsule Networks offering advantages in vision applications requiring viewpoint invariance.

Connection

Graph neural networks (GNNs) and Capsule Networks both enhance deep learning by capturing complex spatial relationships, with GNNs modeling data as graphs to leverage node connections and Capsule Networks preserving hierarchical pose information through dynamic routing. Integrating these architectures enables improved representation learning for structured data, such as 3D shapes and social networks. Experimental results demonstrate that combining GNNs and Capsule Networks yields superior performance in tasks requiring relational reasoning and spatial awareness.

Key Terms

Routing-by-agreement

Capsule Networks utilize Routing-by-agreement to dynamically route information between capsules, enhancing feature representation by preserving spatial hierarchies in data. Graph Neural Networks process graph-structured data by aggregating features from neighboring nodes but traditionally lack an explicit agreement-based routing mechanism. Explore in-depth how Routing-by-agreement fundamentally differentiates Capsule Networks from standard Graph Neural Networks.

Node Embeddings

Capsule Networks enhance node embeddings by capturing hierarchical relationships and preserving spatial information, leading to more robust representations in graph-structured data. Graph Neural Networks (GNNs) excel in aggregating and propagating features across nodes, effectively encoding local and global connectivity patterns. Explore the detailed comparative analysis to understand their impact on node embedding quality and application domains.

Dynamic Graphs

Capsule Networks efficiently capture hierarchical relationships within data by preserving spatial hierarchies through dynamic routing mechanisms, offering robustness in dynamic graph settings where node features and connections evolve over time. Graph Neural Networks (GNNs), particularly dynamic GNNs, excel at modeling temporal dependencies and structural changes in evolving graphs using message passing frameworks to update node representations dynamically. Explore the latest advancements in dynamic graph modeling by delving deeper into the integration and application of Capsule Networks and graph neural networks.

Source and External Links

Capsule neural network - Capsule Networks (CapsNet) are advanced artificial neural networks designed to model hierarchical relationships by grouping neurons into capsules that output probabilities and pose information, addressing spatial relationship issues in image recognition through dynamic routing algorithms developed primarily by Geoffrey Hinton.

Introduction to Capsule Neural Networks | ML - CapsNets encapsulate both the activation and spatial hierarchy of objects using vector outputs, enabling better handling of pose variations and improving recognition by dynamically routing information between capsules based on the agreement of predicted spatial relationships.

Capsule Networks: A Quick Primer - Capsule networks extend CNNs by using dynamic routing to assign connections from lower-level capsules to higher-level ones that agree in pose and prediction, improving hierarchical feature detection through an iterative and distance-based weighting mechanism rather than fixed weights as in traditional ANNs.

dowidth.com

dowidth.com