Edge intelligence processes data locally on devices, reducing latency and enhancing privacy by minimizing reliance on centralized cloud servers. Real-time inference enables immediate decision-making by analyzing streaming data as it is generated, crucial for applications like autonomous vehicles and smart surveillance. Explore how these technologies transform connectivity and computing efficiency.

Why it is important

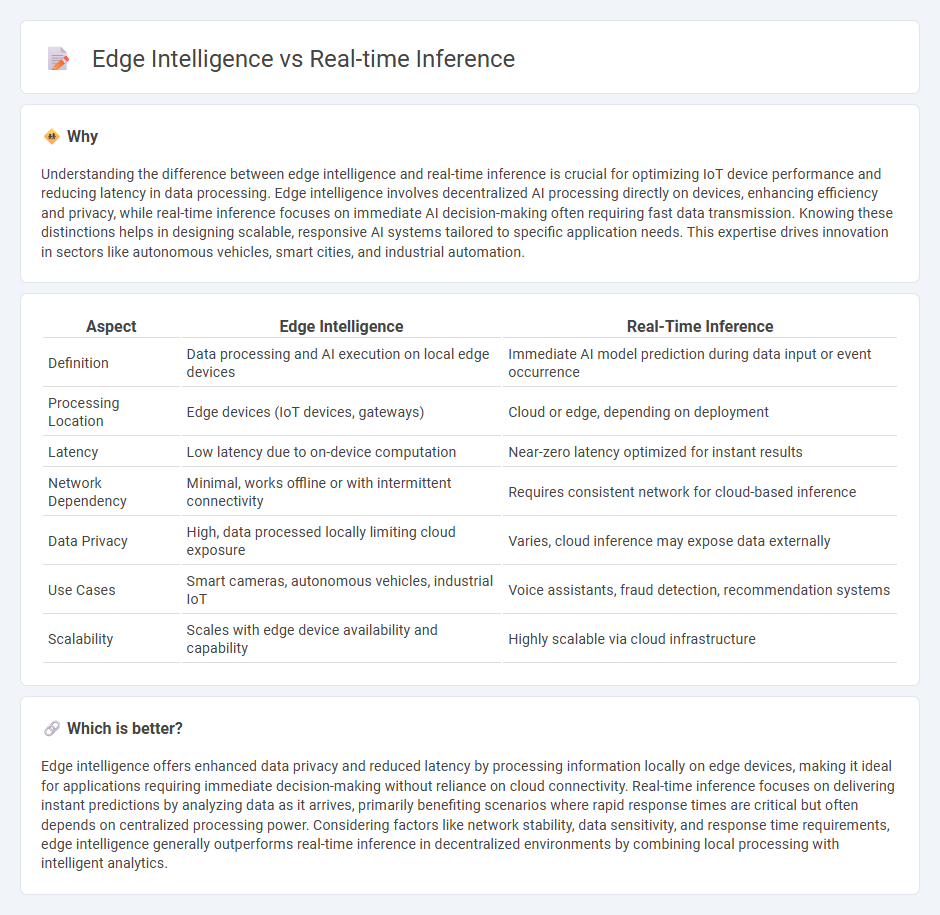

Understanding the difference between edge intelligence and real-time inference is crucial for optimizing IoT device performance and reducing latency in data processing. Edge intelligence involves decentralized AI processing directly on devices, enhancing efficiency and privacy, while real-time inference focuses on immediate AI decision-making often requiring fast data transmission. Knowing these distinctions helps in designing scalable, responsive AI systems tailored to specific application needs. This expertise drives innovation in sectors like autonomous vehicles, smart cities, and industrial automation.

Comparison Table

| Aspect | Edge Intelligence | Real-Time Inference |

|---|---|---|

| Definition | Data processing and AI execution on local edge devices | Immediate AI model prediction during data input or event occurrence |

| Processing Location | Edge devices (IoT devices, gateways) | Cloud or edge, depending on deployment |

| Latency | Low latency due to on-device computation | Near-zero latency optimized for instant results |

| Network Dependency | Minimal, works offline or with intermittent connectivity | Requires consistent network for cloud-based inference |

| Data Privacy | High, data processed locally limiting cloud exposure | Varies, cloud inference may expose data externally |

| Use Cases | Smart cameras, autonomous vehicles, industrial IoT | Voice assistants, fraud detection, recommendation systems |

| Scalability | Scales with edge device availability and capability | Highly scalable via cloud infrastructure |

Which is better?

Edge intelligence offers enhanced data privacy and reduced latency by processing information locally on edge devices, making it ideal for applications requiring immediate decision-making without reliance on cloud connectivity. Real-time inference focuses on delivering instant predictions by analyzing data as it arrives, primarily benefiting scenarios where rapid response times are critical but often depends on centralized processing power. Considering factors like network stability, data sensitivity, and response time requirements, edge intelligence generally outperforms real-time inference in decentralized environments by combining local processing with intelligent analytics.

Connection

Edge intelligence integrates artificial intelligence algorithms directly on edge devices, enabling real-time data processing without reliance on centralized cloud servers. Real-time inference occurs at the edge, allowing instantaneous decision-making by analyzing data locally, reducing latency and bandwidth usage. The synergy between edge intelligence and real-time inference enhances responsiveness and efficiency in applications such as autonomous vehicles, smart surveillance, and IoT devices.

Key Terms

Latency

Real-time inference relies on processing data instantly, often within milliseconds, to support applications like autonomous driving and live video analytics, where minimal latency is critical. Edge intelligence reduces latency by performing AI computations locally on edge devices such as IoT sensors and mobile gadgets, avoiding delays caused by data transmission to central servers. Explore the advantages and trade-offs between real-time inference and edge intelligence to optimize latency for specific use cases.

Model Deployment

Real-time inference enables instant data processing and decision-making by deploying AI models directly on edge devices, reducing latency and bandwidth usage compared to cloud-based solutions. Edge intelligence integrates advanced model deployment strategies that optimize computational efficiency and adapt to dynamic environments, enhancing responsiveness and security in applications like autonomous vehicles and smart cameras. Explore our detailed analysis to understand the best practices for seamless model deployment in real-time and edge AI scenarios.

On-device Processing

Real-time inference on edge devices enables immediate data processing without relying on cloud connectivity, reducing latency and enhancing privacy by keeping sensitive information local. Edge intelligence leverages embedded AI models to perform complex analytics and decision-making directly on smartphones, IoT devices, and sensors, optimizing power consumption and network bandwidth. Explore how on-device processing transforms industries by delivering faster, secure, and autonomous AI capabilities at the edge.

Source and External Links

Real-time Inference: Definition, Use Cases - Ultralytics - Real-time inference is the process of using a trained machine learning model to make predictions on new, live data with minimal delay, enabling instant decision-making in applications like autonomous driving and security systems.

Real-time inference - Amazon SageMaker AI - AWS Documentation - Real-time inference suits workloads requiring interactive, low-latency predictions by deploying models to managed SageMaker endpoints that support autoscaling for seamless performance.

Choosing between SageMaker AI Inference and Endpoint Type ... - Real-time inference endpoints in SageMaker are fully managed and configurable for autoscaling, resource selection, and deployment modes, optimized for high throughput and low latency applications such as personalized recommendations.

dowidth.com

dowidth.com