Quantum advantage emerges when quantum computers solve complex problems more efficiently than classical computers, leveraging quantum bits and entanglement for exponential speedups. Classical computing relies on binary bits and deterministic processing, facing limitations in simulating quantum systems and large-scale optimization tasks. Explore the transformative potential of quantum advantage to understand its impact on future technology advancements.

Why it is important

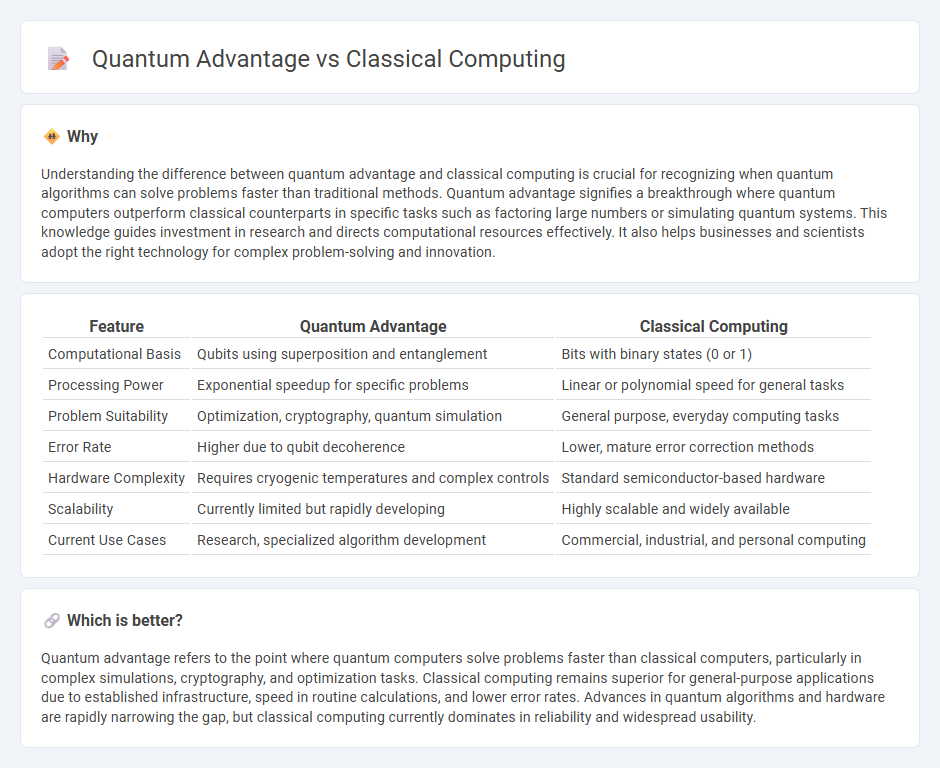

Understanding the difference between quantum advantage and classical computing is crucial for recognizing when quantum algorithms can solve problems faster than traditional methods. Quantum advantage signifies a breakthrough where quantum computers outperform classical counterparts in specific tasks such as factoring large numbers or simulating quantum systems. This knowledge guides investment in research and directs computational resources effectively. It also helps businesses and scientists adopt the right technology for complex problem-solving and innovation.

Comparison Table

| Feature | Quantum Advantage | Classical Computing |

|---|---|---|

| Computational Basis | Qubits using superposition and entanglement | Bits with binary states (0 or 1) |

| Processing Power | Exponential speedup for specific problems | Linear or polynomial speed for general tasks |

| Problem Suitability | Optimization, cryptography, quantum simulation | General purpose, everyday computing tasks |

| Error Rate | Higher due to qubit decoherence | Lower, mature error correction methods |

| Hardware Complexity | Requires cryogenic temperatures and complex controls | Standard semiconductor-based hardware |

| Scalability | Currently limited but rapidly developing | Highly scalable and widely available |

| Current Use Cases | Research, specialized algorithm development | Commercial, industrial, and personal computing |

Which is better?

Quantum advantage refers to the point where quantum computers solve problems faster than classical computers, particularly in complex simulations, cryptography, and optimization tasks. Classical computing remains superior for general-purpose applications due to established infrastructure, speed in routine calculations, and lower error rates. Advances in quantum algorithms and hardware are rapidly narrowing the gap, but classical computing currently dominates in reliability and widespread usability.

Connection

Quantum advantage emerges when quantum computers outperform classical computing in solving specific problems, leveraging phenomena such as superposition and entanglement. Classical computing provides the foundational algorithms and hardware architecture, serving as a benchmark to measure quantum superiority. The interplay between quantum advantage and classical computing drives advancements in cryptography, optimization, and complex simulations.

Key Terms

Superposition

Superposition, a fundamental principle in quantum computing, allows quantum bits (qubits) to represent multiple states simultaneously, unlike classical bits that exist as either 0 or 1. This unique property enables quantum computers to process complex computations exponentially faster, offering a quantum advantage in fields like cryptography, optimization, and drug discovery. Explore the transformative impact of superposition on computational power and potential breakthroughs in quantum technology.

Computational Complexity

Classical computing relies on deterministic algorithms operating on bits, resulting in computational complexity that grows exponentially with certain problem sizes, such as factoring large integers or simulating quantum systems. Quantum advantage emerges when quantum algorithms like Shor's or Grover's outperform classical counterparts by leveraging qubits and superposition, reducing complexity from exponential to polynomial or sub-exponential time. Explore further to understand how computational complexity theory defines and benchmarks the practical benefits of quantum advantage over classical methods.

Source and External Links

What is classical computing? | Definition from TechTarget - Classical computing is the traditional binary computing model where information is stored and processed in bits that can be either 0 (off) or 1 (on), operating deterministically according to classical physics and Boolean algebra.

Classical computation - Classical computers use digital representation of information in binary numbers, with each bit being either 0 or 1, following the von Neumann architecture for flexible program execution and information processing.

The Difference Between Classical and Quantum Computing - Classical computing relies on silicon-based CPUs with transistor logic, processing unambiguous data in predictable, replicable ways, and increases in power scale linearly with the number of bits and transistors.

dowidth.com

dowidth.com