Zero-knowledge proofs enable secure verification of information without revealing underlying data, enhancing trust in digital transactions. Privacy-preserving machine learning focuses on training algorithms without exposing sensitive user data, ensuring confidentiality during model development. Explore the differences and applications of these technologies to understand their impact on data security.

Why it is important

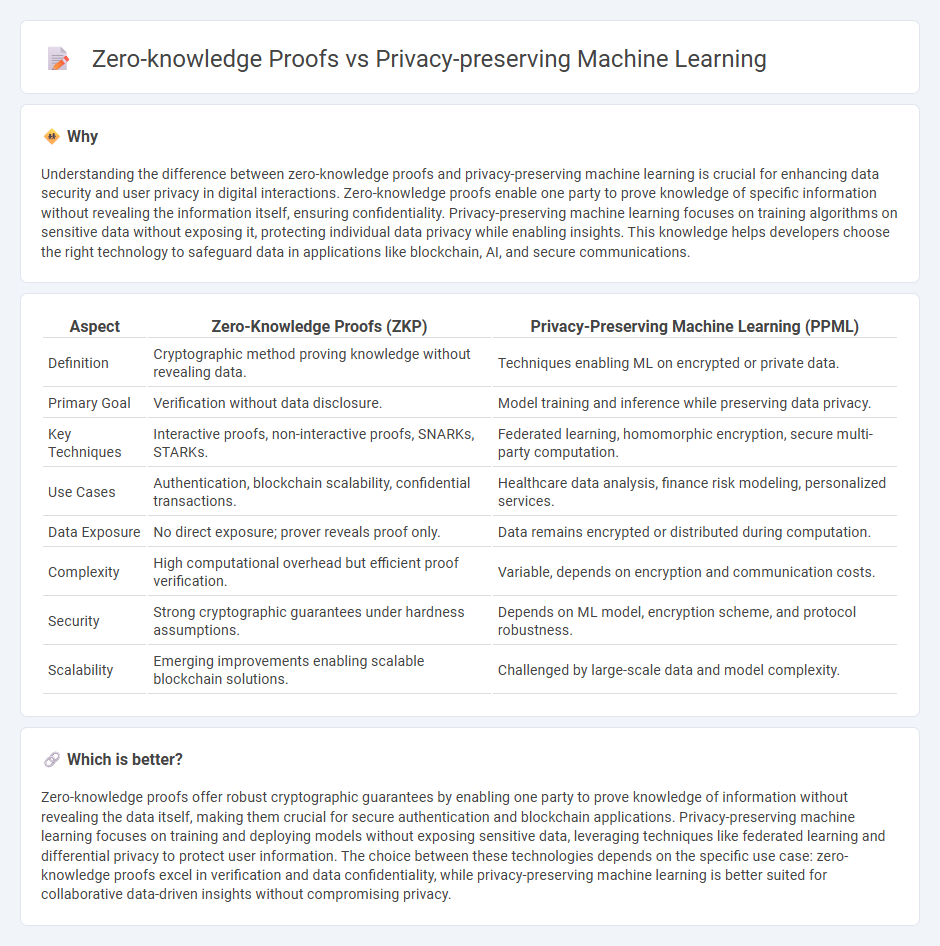

Understanding the difference between zero-knowledge proofs and privacy-preserving machine learning is crucial for enhancing data security and user privacy in digital interactions. Zero-knowledge proofs enable one party to prove knowledge of specific information without revealing the information itself, ensuring confidentiality. Privacy-preserving machine learning focuses on training algorithms on sensitive data without exposing it, protecting individual data privacy while enabling insights. This knowledge helps developers choose the right technology to safeguard data in applications like blockchain, AI, and secure communications.

Comparison Table

| Aspect | Zero-Knowledge Proofs (ZKP) | Privacy-Preserving Machine Learning (PPML) |

|---|---|---|

| Definition | Cryptographic method proving knowledge without revealing data. | Techniques enabling ML on encrypted or private data. |

| Primary Goal | Verification without data disclosure. | Model training and inference while preserving data privacy. |

| Key Techniques | Interactive proofs, non-interactive proofs, SNARKs, STARKs. | Federated learning, homomorphic encryption, secure multi-party computation. |

| Use Cases | Authentication, blockchain scalability, confidential transactions. | Healthcare data analysis, finance risk modeling, personalized services. |

| Data Exposure | No direct exposure; prover reveals proof only. | Data remains encrypted or distributed during computation. |

| Complexity | High computational overhead but efficient proof verification. | Variable, depends on encryption and communication costs. |

| Security | Strong cryptographic guarantees under hardness assumptions. | Depends on ML model, encryption scheme, and protocol robustness. |

| Scalability | Emerging improvements enabling scalable blockchain solutions. | Challenged by large-scale data and model complexity. |

Which is better?

Zero-knowledge proofs offer robust cryptographic guarantees by enabling one party to prove knowledge of information without revealing the data itself, making them crucial for secure authentication and blockchain applications. Privacy-preserving machine learning focuses on training and deploying models without exposing sensitive data, leveraging techniques like federated learning and differential privacy to protect user information. The choice between these technologies depends on the specific use case: zero-knowledge proofs excel in verification and data confidentiality, while privacy-preserving machine learning is better suited for collaborative data-driven insights without compromising privacy.

Connection

Zero-knowledge proofs enable verifying data authenticity without revealing the data itself, a principle crucial for privacy-preserving machine learning which protects sensitive information during model training and inference. By integrating zero-knowledge proofs, machine learning systems ensure that data privacy remains intact while validating computations, enhancing trust in decentralized and federated learning environments. This connection supports secure data sharing and collaborative analytics without compromising user confidentiality or data integrity.

Key Terms

Federated Learning

Privacy-preserving machine learning techniques in Federated Learning emphasize decentralized model training to protect user data by keeping it localized on devices. Zero-knowledge proofs complement this by enabling verification of model updates without revealing the underlying data, ensuring data confidentiality and integrity across participants. Explore the synergy of these approaches to enhance security and trust in Federated Learning environments.

Homomorphic Encryption

Privacy-preserving machine learning (PPML) leverages homomorphic encryption to enable data computations on encrypted datasets without revealing sensitive information, ensuring confidentiality throughout the process. Zero-knowledge proofs (ZKPs) provide cryptographic guarantees by allowing one party to prove knowledge of a secret without disclosing the secret itself, but they do not inherently support direct computation on encrypted data like homomorphic encryption does. Explore the intricacies of homomorphic encryption's role in enhancing secure data analysis to better understand its applications in PPML and ZKP frameworks.

Non-Interactive Zero-Knowledge (NIZK)

Privacy-preserving machine learning techniques focus on protecting sensitive data during model training and inference, often utilizing methods like homomorphic encryption and differential privacy. Non-Interactive Zero-Knowledge (NIZK) proofs offer a cryptographic approach that allows one party to prove knowledge of a secret without revealing it, enhancing data privacy without communication rounds. Explore how integrating NIZK can revolutionize secure, scalable, and privacy-compliant machine learning frameworks.

Source and External Links

Privacy Preserving Machine Learning (PPML) - Duality Tech - PPML uses cryptographic techniques, differential privacy, and federated learning to enable machine learning on sensitive data without exposing or decrypting the raw inputs.

PrivacyML - A Privacy Preserving Framework for Machine Learning - PrivacyML is a general framework that transparently enforces privacy, allowing developers to build machine learning models that are automatically privacy-preserving without requiring deep expertise in privacy mechanisms.

Privacy Preserving Machine Learning: Maintaining confidentiality and preserving trust - Microsoft adopts a holistic approach to PPML by understanding risks, measuring privacy impacts, and applying multiple mitigation strategies like differential privacy to protect sensitive data throughout the ML pipeline.

dowidth.com

dowidth.com