Multimodal AI integrates data from various sources such as text, images, and audio to enhance machine understanding, leveraging complex architectures that process multiple types of inputs simultaneously. Neural networks are a foundational element in this technology, designed to mimic human brain function and enable deep learning, pattern recognition, and predictive analytics. Explore how multimodal AI and neural networks revolutionize artificial intelligence by combining diverse data streams for superior context and accuracy.

Why it is important

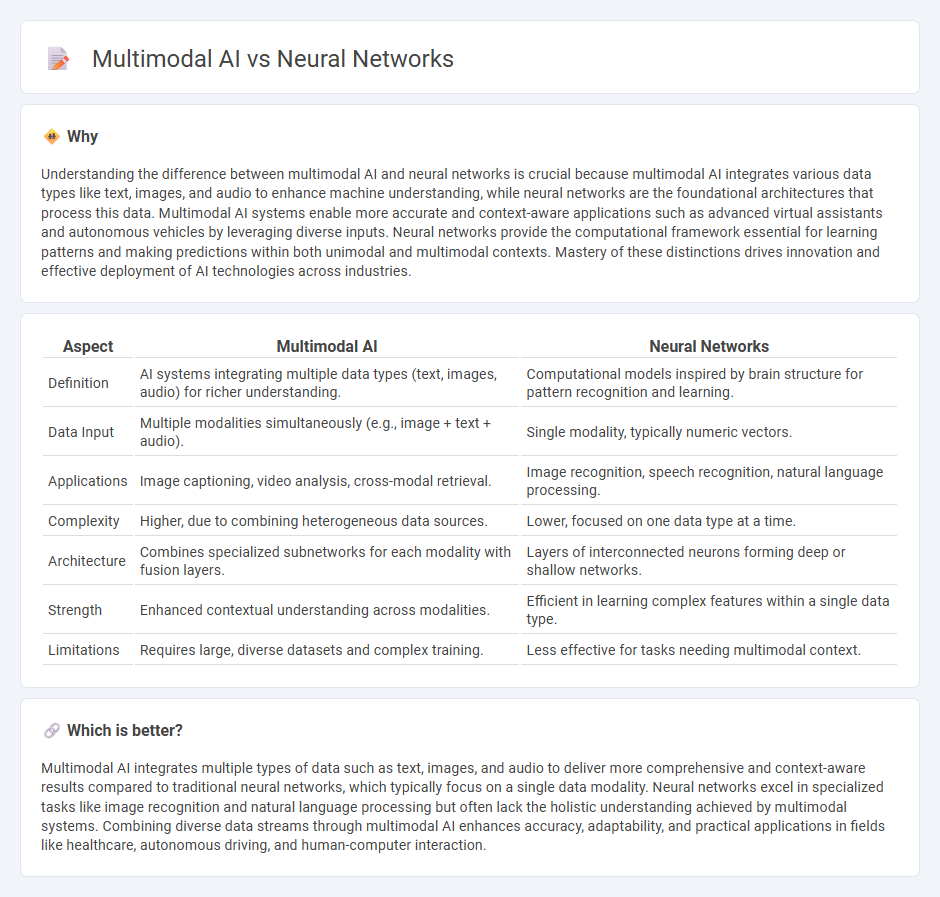

Understanding the difference between multimodal AI and neural networks is crucial because multimodal AI integrates various data types like text, images, and audio to enhance machine understanding, while neural networks are the foundational architectures that process this data. Multimodal AI systems enable more accurate and context-aware applications such as advanced virtual assistants and autonomous vehicles by leveraging diverse inputs. Neural networks provide the computational framework essential for learning patterns and making predictions within both unimodal and multimodal contexts. Mastery of these distinctions drives innovation and effective deployment of AI technologies across industries.

Comparison Table

| Aspect | Multimodal AI | Neural Networks |

|---|---|---|

| Definition | AI systems integrating multiple data types (text, images, audio) for richer understanding. | Computational models inspired by brain structure for pattern recognition and learning. |

| Data Input | Multiple modalities simultaneously (e.g., image + text + audio). | Single modality, typically numeric vectors. |

| Applications | Image captioning, video analysis, cross-modal retrieval. | Image recognition, speech recognition, natural language processing. |

| Complexity | Higher, due to combining heterogeneous data sources. | Lower, focused on one data type at a time. |

| Architecture | Combines specialized subnetworks for each modality with fusion layers. | Layers of interconnected neurons forming deep or shallow networks. |

| Strength | Enhanced contextual understanding across modalities. | Efficient in learning complex features within a single data type. |

| Limitations | Requires large, diverse datasets and complex training. | Less effective for tasks needing multimodal context. |

Which is better?

Multimodal AI integrates multiple types of data such as text, images, and audio to deliver more comprehensive and context-aware results compared to traditional neural networks, which typically focus on a single data modality. Neural networks excel in specialized tasks like image recognition and natural language processing but often lack the holistic understanding achieved by multimodal systems. Combining diverse data streams through multimodal AI enhances accuracy, adaptability, and practical applications in fields like healthcare, autonomous driving, and human-computer interaction.

Connection

Multimodal AI integrates diverse data types such as text, images, and audio, leveraging neural networks to process and analyze these inputs simultaneously. Neural networks, particularly deep learning models like convolutional and recurrent networks, enable multimodal AI systems to extract complex patterns and correlations across different modalities, enhancing contextual understanding and decision-making. This synergy drives advancements in applications such as natural language processing, computer vision, and speech recognition, improving accuracy and user interaction.

Key Terms

Architecture

Neural networks rely on layers of interconnected nodes designed to process specific data types, typically structured for tasks like image recognition or natural language processing. Multimodal AI integrates multiple neural network architectures to simultaneously process diverse data modalities such as text, images, and audio, improving contextual understanding and decision-making. Explore the latest advancements in neural network and multimodal AI architectures to enhance your AI applications.

Data Modality

Neural networks primarily process a single data modality, such as images, text, or audio, optimizing performance within that specific domain. Multimodal AI integrates multiple data modalities simultaneously, enhancing context understanding and decision-making by combining visual, textual, and auditory inputs. Explore deeper insights into how data modality impacts AI capabilities and applications.

Fusion Techniques

Neural networks primarily handle single-modal data, such as images or text, whereas multimodal AI integrates diverse data types using advanced fusion techniques like early fusion, late fusion, and hybrid fusion to enhance performance. Fusion methods optimize the combination of visual, textual, and auditory inputs, improving tasks such as image captioning, speech recognition, and sentiment analysis. Explore the latest developments in fusion techniques to deepen your understanding of multimodal AI capabilities.

Source and External Links

What is a Neural Network? | IBM - Neural networks are machine learning models inspired by the brain, consisting of layers of interconnected artificial neurons that process data, learn from training, and perform tasks like image or speech recognition with high accuracy.

What is a Neural Network? - GeeksforGeeks - Neural networks mimic human brain functions using neurons connected by weighted links, learning patterns through iterative weight and bias adjustment to enable complex decision-making and pattern recognition.

What is a Neural Network? - AWS - Neural networks are deep learning AI systems that model nonlinear relationships in data through layered neurons, enabling computers to learn from errors and make intelligent decisions with minimal human input.

dowidth.com

dowidth.com