Generative Adversarial Networks (GANs) consist of dual neural networks contesting to generate realistic data, excelling in image synthesis and enhancement. Deep Belief Networks (DBNs) are hierarchical models designed for unsupervised learning, effective in feature extraction and dimensionality reduction. Discover how these advanced technologies transform artificial intelligence and machine learning applications.

Why it is important

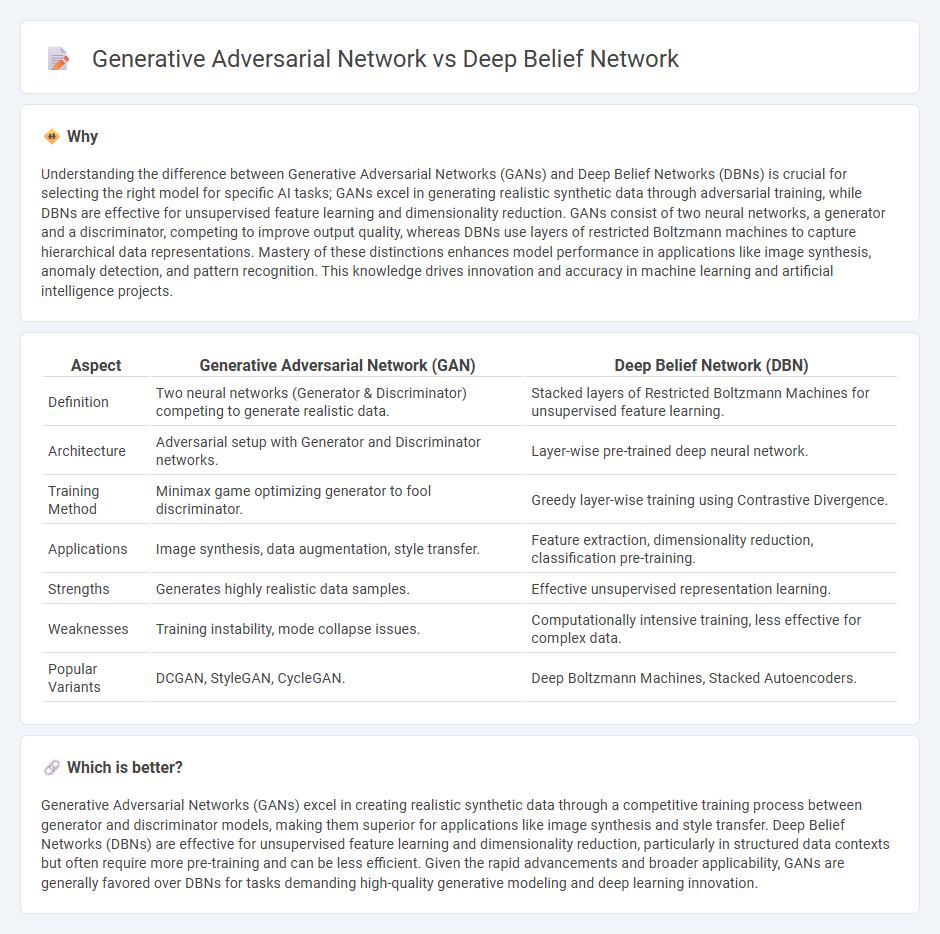

Understanding the difference between Generative Adversarial Networks (GANs) and Deep Belief Networks (DBNs) is crucial for selecting the right model for specific AI tasks; GANs excel in generating realistic synthetic data through adversarial training, while DBNs are effective for unsupervised feature learning and dimensionality reduction. GANs consist of two neural networks, a generator and a discriminator, competing to improve output quality, whereas DBNs use layers of restricted Boltzmann machines to capture hierarchical data representations. Mastery of these distinctions enhances model performance in applications like image synthesis, anomaly detection, and pattern recognition. This knowledge drives innovation and accuracy in machine learning and artificial intelligence projects.

Comparison Table

| Aspect | Generative Adversarial Network (GAN) | Deep Belief Network (DBN) |

|---|---|---|

| Definition | Two neural networks (Generator & Discriminator) competing to generate realistic data. | Stacked layers of Restricted Boltzmann Machines for unsupervised feature learning. |

| Architecture | Adversarial setup with Generator and Discriminator networks. | Layer-wise pre-trained deep neural network. |

| Training Method | Minimax game optimizing generator to fool discriminator. | Greedy layer-wise training using Contrastive Divergence. |

| Applications | Image synthesis, data augmentation, style transfer. | Feature extraction, dimensionality reduction, classification pre-training. |

| Strengths | Generates highly realistic data samples. | Effective unsupervised representation learning. |

| Weaknesses | Training instability, mode collapse issues. | Computationally intensive training, less effective for complex data. |

| Popular Variants | DCGAN, StyleGAN, CycleGAN. | Deep Boltzmann Machines, Stacked Autoencoders. |

Which is better?

Generative Adversarial Networks (GANs) excel in creating realistic synthetic data through a competitive training process between generator and discriminator models, making them superior for applications like image synthesis and style transfer. Deep Belief Networks (DBNs) are effective for unsupervised feature learning and dimensionality reduction, particularly in structured data contexts but often require more pre-training and can be less efficient. Given the rapid advancements and broader applicability, GANs are generally favored over DBNs for tasks demanding high-quality generative modeling and deep learning innovation.

Connection

Generative Adversarial Networks (GANs) and Deep Belief Networks (DBNs) are both pivotal innovations in unsupervised deep learning, focusing on generative modeling. GANs utilize a game-theoretic framework where a generator and discriminator network compete to produce realistic data samples, while DBNs employ stacked Restricted Boltzmann Machines to learn hierarchical feature representations. The connection lies in their shared goal of capturing complex data distributions, with DBNs offering a probabilistic approach and GANs providing an adversarial training mechanism that enhances generative performance.

Key Terms

Layered architecture (Deep Belief Network)

Deep Belief Networks (DBNs) utilize a layered architecture composed of multiple Restricted Boltzmann Machines (RBMs) stacked to learn hierarchical feature representations through unsupervised pre-training. Each layer in a DBN captures increasingly abstract features, enabling effective dimensionality reduction and pattern recognition in complex datasets. Explore the nuances of DBN's layered architecture and its impact on deep learning by learning more about its structure and applications.

Generator-Discriminator (Generative Adversarial Network)

Generative Adversarial Networks (GANs) consist of a generator that creates synthetic data samples and a discriminator that evaluates their authenticity, enabling the system to improve through adversarial training. Deep Belief Networks (DBNs) are layered probabilistic models that learn hierarchical representations via unsupervised pre-training but lack the adversarial component present in GANs. Explore further to understand the distinctive roles and training dynamics of GAN generators and discriminators.

Unsupervised learning

Deep Belief Networks (DBNs) utilize layered Restricted Boltzmann Machines to capture complex data distributions through hierarchical feature learning in unsupervised settings, excelling at probabilistic generative modeling. Generative Adversarial Networks (GANs) employ two neural networks--the generator and the discriminator--in a competitive framework to produce highly realistic synthetic data by learning the underlying data distribution without labels. Explore how these models transform unsupervised learning by diving deeper into their architectures and applications.

Source and External Links

Deep Belief Networks (DBNs) explained - A Deep Belief Network is a stack of multiple Restricted Boltzmann Machines (RBMs) where each RBM consists of a visible layer and a hidden layer, and the hidden layer of one RBM acts as the visible layer for the next, enabling the network to learn complex representations by minimizing an energy function that defines the relationship between layers.

Explanation of Deep Belief Network (DBN) - DBNs are deep learning architectures made of layers of RBMs trained unsupervisedly one at a time, and they are used for supervised tasks like classification by leveraging the hierarchical features learned through the stacked RBMs.

Deep Belief Network (DBN) in Deep Learning - DBNs operate in two phases: unsupervised pre-training where each layer is trained as an RBM to learn probability distributions of input data, and supervised fine-tuning using backpropagation to optimize performance on tasks such as classification or regression.

dowidth.com

dowidth.com