Neural Radiance Fields (NeRF) revolutionize 3D scene representation by encoding volumetric radiance and scene geometry into a continuous neural network, allowing for high-fidelity novel view synthesis. Point-based rendering relies on discrete point cloud data to reconstruct scenes, often resulting in lower detail and less smooth transitions between viewpoints compared to NeRF's implicit function approach. Discover how these cutting-edge rendering methods reshape visualization and 3D graphics by exploring their technical nuances and applications.

Why it is important

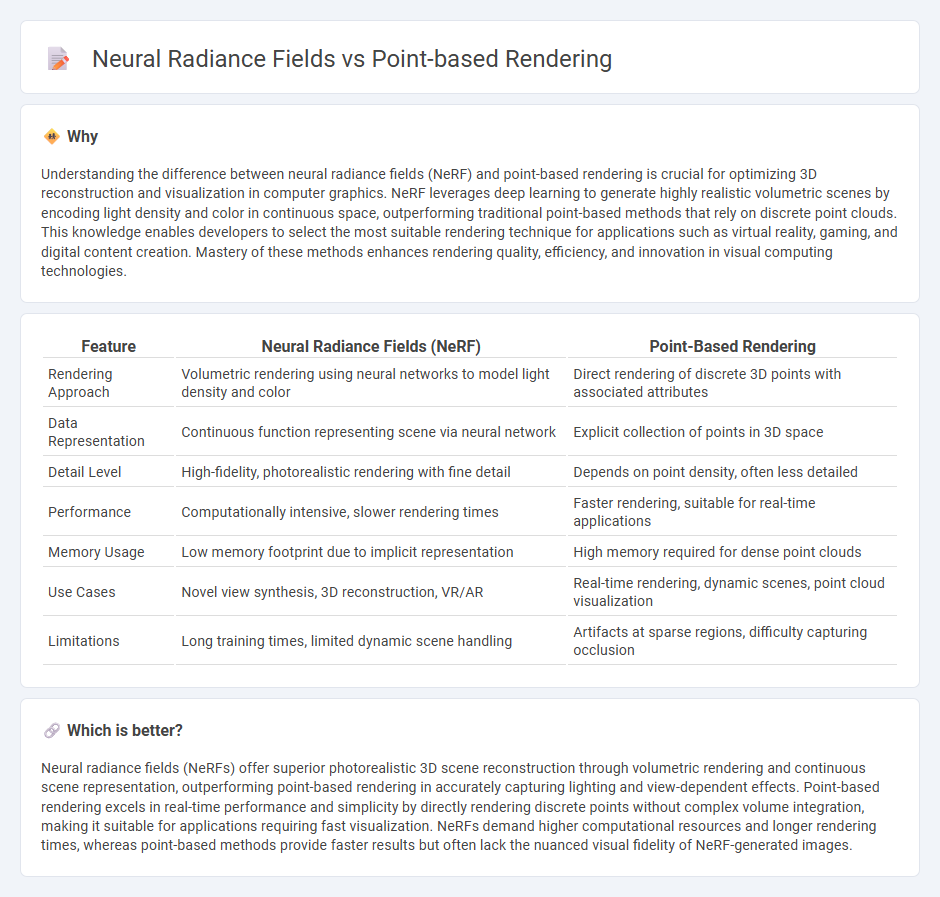

Understanding the difference between neural radiance fields (NeRF) and point-based rendering is crucial for optimizing 3D reconstruction and visualization in computer graphics. NeRF leverages deep learning to generate highly realistic volumetric scenes by encoding light density and color in continuous space, outperforming traditional point-based methods that rely on discrete point clouds. This knowledge enables developers to select the most suitable rendering technique for applications such as virtual reality, gaming, and digital content creation. Mastery of these methods enhances rendering quality, efficiency, and innovation in visual computing technologies.

Comparison Table

| Feature | Neural Radiance Fields (NeRF) | Point-Based Rendering |

|---|---|---|

| Rendering Approach | Volumetric rendering using neural networks to model light density and color | Direct rendering of discrete 3D points with associated attributes |

| Data Representation | Continuous function representing scene via neural network | Explicit collection of points in 3D space |

| Detail Level | High-fidelity, photorealistic rendering with fine detail | Depends on point density, often less detailed |

| Performance | Computationally intensive, slower rendering times | Faster rendering, suitable for real-time applications |

| Memory Usage | Low memory footprint due to implicit representation | High memory required for dense point clouds |

| Use Cases | Novel view synthesis, 3D reconstruction, VR/AR | Real-time rendering, dynamic scenes, point cloud visualization |

| Limitations | Long training times, limited dynamic scene handling | Artifacts at sparse regions, difficulty capturing occlusion |

Which is better?

Neural radiance fields (NeRFs) offer superior photorealistic 3D scene reconstruction through volumetric rendering and continuous scene representation, outperforming point-based rendering in accurately capturing lighting and view-dependent effects. Point-based rendering excels in real-time performance and simplicity by directly rendering discrete points without complex volume integration, making it suitable for applications requiring fast visualization. NeRFs demand higher computational resources and longer rendering times, whereas point-based methods provide faster results but often lack the nuanced visual fidelity of NeRF-generated images.

Connection

Neural radiance fields (NeRF) and point-based rendering both focus on representing and synthesizing 3D scenes by leveraging spatial data points to generate realistic images. NeRF utilizes continuous volumetric scene representations with neural networks to estimate light radiance at any point in space, while point-based rendering employs discrete points as primitives for direct rendering. The connection lies in their shared use of point samples to reconstruct detailed visual content, enhancing realistic scene rendering through complementary computational approaches.

Key Terms

Point Clouds

Point-based rendering excels in visualizing point clouds by directly projecting points with associated attributes like color and normal vectors, enabling real-time performance and efficient handling of sparse data. Neural radiance fields (NeRFs) offer superior photorealistic quality through continuous volumetric representation and complex light modeling but require expensive computations and dense sampling, making them less practical for dynamic or large-scale point cloud scenarios. Explore how these technologies impact point cloud visualization by delving deeper into their methods and applications.

Volumetric Rendering

Point-based rendering achieves volumetric effects by directly manipulating point clouds with associated color and opacity attributes, enabling efficient visualization of sparse data. Neural Radiance Fields (NeRF) leverage deep neural networks to model light emission and absorption within a continuous 3D volume, resulting in high-fidelity and photorealistic volumetric rendering. Explore the latest advances in volumetric rendering techniques to understand their impact on 3D visualization and applications.

Implicit Neural Representations

Implicit Neural Representations, such as Neural Radiance Fields (NeRFs), encode 3D scenes by mapping spatial coordinates and view directions to color and density values using deep neural networks, enabling photorealistic volume rendering. Point-based rendering relies on discrete sets of points with attributes like color and normals, which can be less flexible and often produce artifacts in complex geometry or view-dependent effects. Explore the advancements and applications of Implicit Neural Representations to understand their transformative impact on realistic 3D scene synthesis and rendering.

Source and External Links

High-Quality Point-Based Rendering on Modern GPUs - Point-based rendering is a technique that represents surfaces via points (surfels) rather than meshes, offering potential performance and visual quality improvements by rendering these points directly with filtering, and leveraging modern GPU capabilities for efficient and high-quality results.

Point-Based Rendering - Computer Graphics Laboratory - ETH Zurich - This rendering approach uses point clouds where each point (surfel) encodes a small surface patch's position, color, normal, and radius, allowing direct rendering of scanned 3D data without meshing, facilitating interpolation between points for smoother surfaces.

Neural Point-Based Graphics - VIOLET projects index - Modern advances combine point-based rendering with neural networks by associating learnable descriptors with 3D points and using deep learning to produce photorealistic views of scenes efficiently, enabling rendering from arbitrary viewpoints with high fidelity.

dowidth.com

dowidth.com