Algorithmic bias auditing systematically evaluates machine learning models and AI systems to identify inherent prejudices affecting decision-making processes. Disparate impact analysis focuses on measuring and addressing the real-world consequences of algorithms on protected groups, particularly in terms of fairness and equity. Explore further to understand how these methodologies ensure ethical and transparent technology deployment.

Why it is important

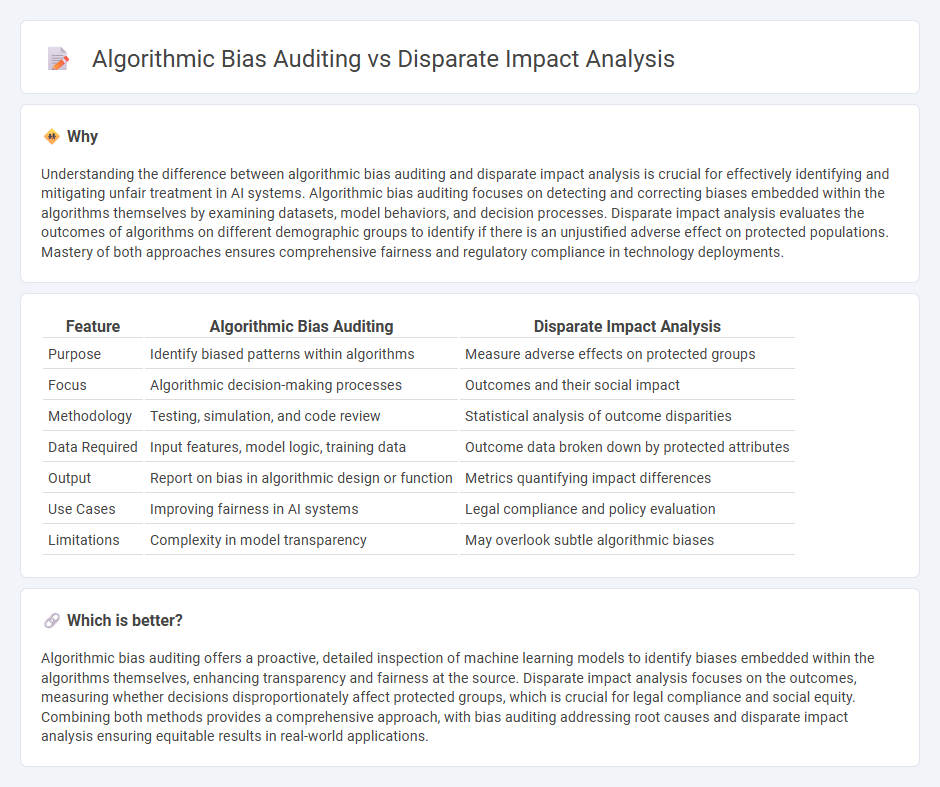

Understanding the difference between algorithmic bias auditing and disparate impact analysis is crucial for effectively identifying and mitigating unfair treatment in AI systems. Algorithmic bias auditing focuses on detecting and correcting biases embedded within the algorithms themselves by examining datasets, model behaviors, and decision processes. Disparate impact analysis evaluates the outcomes of algorithms on different demographic groups to identify if there is an unjustified adverse effect on protected populations. Mastery of both approaches ensures comprehensive fairness and regulatory compliance in technology deployments.

Comparison Table

| Feature | Algorithmic Bias Auditing | Disparate Impact Analysis |

|---|---|---|

| Purpose | Identify biased patterns within algorithms | Measure adverse effects on protected groups |

| Focus | Algorithmic decision-making processes | Outcomes and their social impact |

| Methodology | Testing, simulation, and code review | Statistical analysis of outcome disparities |

| Data Required | Input features, model logic, training data | Outcome data broken down by protected attributes |

| Output | Report on bias in algorithmic design or function | Metrics quantifying impact differences |

| Use Cases | Improving fairness in AI systems | Legal compliance and policy evaluation |

| Limitations | Complexity in model transparency | May overlook subtle algorithmic biases |

Which is better?

Algorithmic bias auditing offers a proactive, detailed inspection of machine learning models to identify biases embedded within the algorithms themselves, enhancing transparency and fairness at the source. Disparate impact analysis focuses on the outcomes, measuring whether decisions disproportionately affect protected groups, which is crucial for legal compliance and social equity. Combining both methods provides a comprehensive approach, with bias auditing addressing root causes and disparate impact analysis ensuring equitable results in real-world applications.

Connection

Algorithmic bias auditing identifies and measures biases in AI systems by evaluating decision patterns across different demographic groups. Disparate impact analysis assesses the extent to which these biases lead to unfair outcomes or discrimination against protected groups. Together, they provide a comprehensive approach to detecting, quantifying, and mitigating unfair algorithmic treatment in technology applications.

Key Terms

Fairness Metrics

Disparate impact analysis evaluates fairness by measuring differences in outcomes across protected groups, primarily using metrics like statistical parity and disparate impact ratio to detect unintentional discrimination. Algorithmic bias auditing encompasses a broader scope, employing multiple fairness metrics such as equal opportunity, predictive parity, and calibration to comprehensively assess and mitigate biases within machine learning models. Explore advanced fairness metrics and auditing techniques to enhance equitable AI deployment.

Protected Attributes

Disparate impact analysis examines outcomes of decisions across protected attributes such as race, gender, and age to identify unintentional discrimination in processes or algorithms. Algorithmic bias auditing involves systematically testing and evaluating algorithms for fairness, transparency, and bias mitigation with an emphasis on protecting sensitive demographic groups. Explore deeper insights into methodologies and tools for ensuring equity in AI and compliance with anti-discrimination laws.

Transparency

Disparate impact analysis quantitatively assesses whether a policy or algorithm produces unequal outcomes across protected groups, focusing on statistical evidence of discrimination. Algorithmic bias auditing comprehensively evaluates transparency in algorithmic design, data sources, and decision-making processes to identify and mitigate hidden biases. Explore how enhancing transparency in these methods drives fairer AI systems.

Source and External Links

Disparate Impact Tool - A tool used for calculating disparate impact in employment decisions by analyzing demographic data in reduction-in-force scenarios.

Disparate Impact vs Treatment: Examples, Analysis & Guide - This resource provides an overview of disparate impact and treatment in employment, highlighting their legal distinctions and implications.

Disparate Impact - A legal concept referring to practices that disproportionately affect certain groups, often unintentionally, and are subject to legal scrutiny in employment and other areas.

dowidth.com

dowidth.com