Algorithmic bias auditing focuses on identifying and mitigating unfair or discriminatory outcomes in AI systems by examining data sources and decision-making processes. Model interpretability analysis aims to make AI models more transparent, enhancing understanding of how predictions are generated through techniques like feature importance and SHAP values. Explore deeper insights into these critical approaches for ethical and transparent AI development.

Why it is important

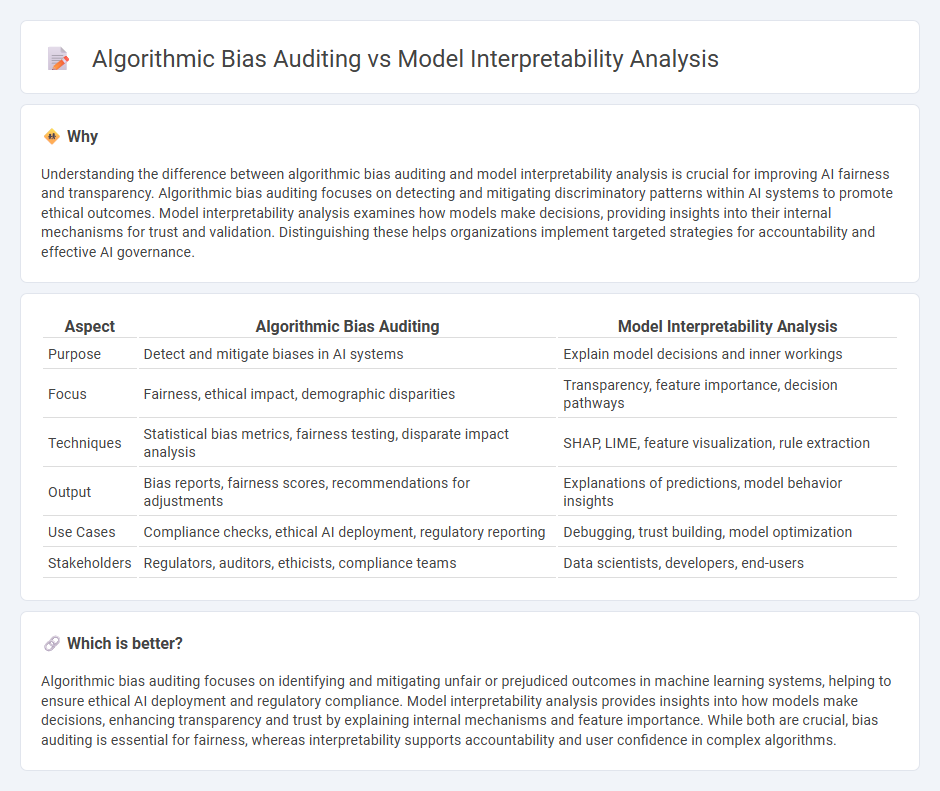

Understanding the difference between algorithmic bias auditing and model interpretability analysis is crucial for improving AI fairness and transparency. Algorithmic bias auditing focuses on detecting and mitigating discriminatory patterns within AI systems to promote ethical outcomes. Model interpretability analysis examines how models make decisions, providing insights into their internal mechanisms for trust and validation. Distinguishing these helps organizations implement targeted strategies for accountability and effective AI governance.

Comparison Table

| Aspect | Algorithmic Bias Auditing | Model Interpretability Analysis |

|---|---|---|

| Purpose | Detect and mitigate biases in AI systems | Explain model decisions and inner workings |

| Focus | Fairness, ethical impact, demographic disparities | Transparency, feature importance, decision pathways |

| Techniques | Statistical bias metrics, fairness testing, disparate impact analysis | SHAP, LIME, feature visualization, rule extraction |

| Output | Bias reports, fairness scores, recommendations for adjustments | Explanations of predictions, model behavior insights |

| Use Cases | Compliance checks, ethical AI deployment, regulatory reporting | Debugging, trust building, model optimization |

| Stakeholders | Regulators, auditors, ethicists, compliance teams | Data scientists, developers, end-users |

Which is better?

Algorithmic bias auditing focuses on identifying and mitigating unfair or prejudiced outcomes in machine learning systems, helping to ensure ethical AI deployment and regulatory compliance. Model interpretability analysis provides insights into how models make decisions, enhancing transparency and trust by explaining internal mechanisms and feature importance. While both are crucial, bias auditing is essential for fairness, whereas interpretability supports accountability and user confidence in complex algorithms.

Connection

Algorithmic bias auditing and model interpretability analysis are connected through their shared goal of enhancing transparency and fairness in AI systems. Bias auditing identifies disparate impacts by examining model outputs, while interpretability analysis provides insights into how models make decisions, revealing potential sources of bias. Together, these approaches enable developers to diagnose and mitigate unfair treatment across different demographic groups.

Key Terms

**Model Interpretability Analysis:**

Model interpretability analysis involves examining machine learning models to understand how input features influence predictions, enhancing transparency and trust in AI systems. Techniques such as SHAP values, LIME, and partial dependence plots reveal model decision pathways, enabling detection of overfitting or unintended correlations. Explore more about interpretability methods to ensure accountable and explainable AI deployments.

Feature Importance

Feature importance is central to model interpretability analysis, providing insights into how different variables influence predictive outcomes in machine learning models. Algorithmic bias auditing leverages feature importance to detect disproportionate impacts of specific features on various demographic groups, unveiling potential fairness issues. Explore further to understand how integrating feature importance enhances both interpretability and bias mitigation strategies.

SHAP Values

Model interpretability analysis using SHAP values provides detailed insights into feature contributions, enabling transparent understanding of individual predictions in complex machine learning models. Algorithmic bias auditing leverages SHAP to detect disproportionate feature influences linked to sensitive attributes, helping identify and mitigate unfair model behaviors. Explore comprehensive techniques and best practices to enhance fairness and interpretability in AI systems through SHAP-based evaluations.

Source and External Links

What is ML Model Interpretability? - JFrog - Machine learning model interpretability refers to how easily a human can understand how a model makes its decisions or predictions, including the factors and processes involved.

2 Interpretability - Interpretable Machine Learning - Interpretability allows users not just to know what a model predicts, but also to understand why it made that prediction--crucial for learning about the problem, data, and potential failures.

Interpretability vs explainability: Understanding the Differences and Importance in Artificial Intelligence - Interpretability in AI means a model's operation is transparent, showing clear relationships between inputs and outputs, which is essential for trust and accountability in critical decision-making systems.

dowidth.com

dowidth.com