Federated learning enables decentralized model training by aggregating data insights from multiple devices without transferring raw data, enhancing privacy and security. On-device AI processes data locally on individual devices, reducing latency and reliance on cloud connectivity while preserving user confidentiality. Discover how these technologies transform intelligent systems and safeguard data privacy by exploring their unique advantages.

Why it is important

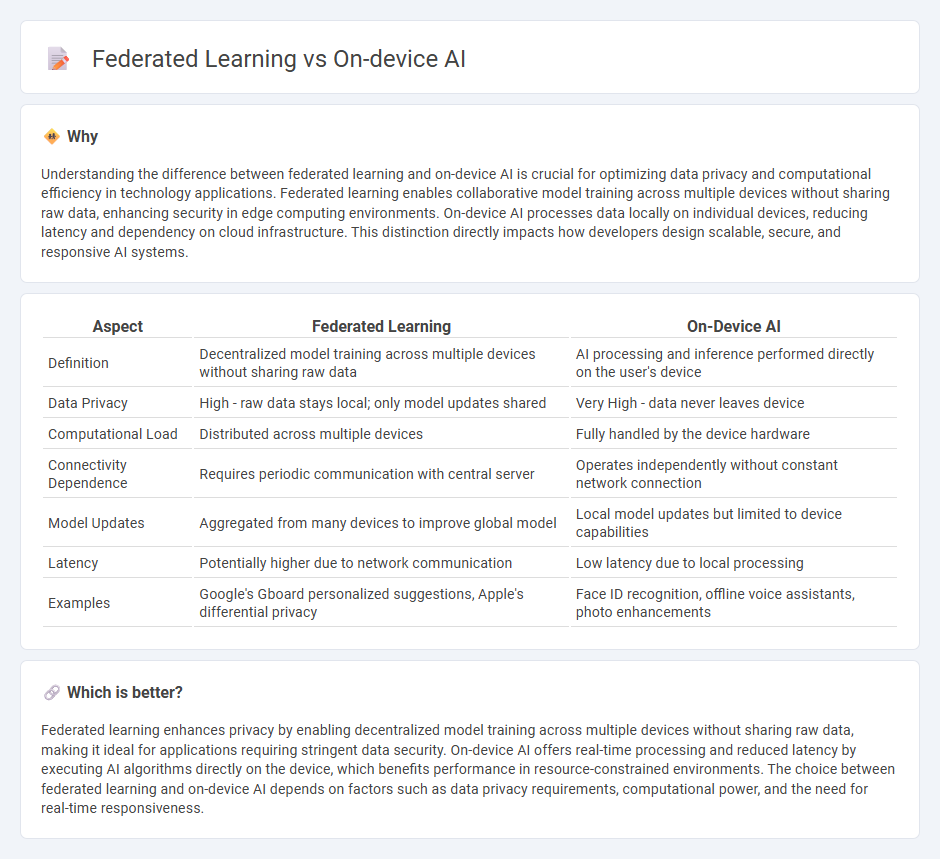

Understanding the difference between federated learning and on-device AI is crucial for optimizing data privacy and computational efficiency in technology applications. Federated learning enables collaborative model training across multiple devices without sharing raw data, enhancing security in edge computing environments. On-device AI processes data locally on individual devices, reducing latency and dependency on cloud infrastructure. This distinction directly impacts how developers design scalable, secure, and responsive AI systems.

Comparison Table

| Aspect | Federated Learning | On-Device AI |

|---|---|---|

| Definition | Decentralized model training across multiple devices without sharing raw data | AI processing and inference performed directly on the user's device |

| Data Privacy | High - raw data stays local; only model updates shared | Very High - data never leaves device |

| Computational Load | Distributed across multiple devices | Fully handled by the device hardware |

| Connectivity Dependence | Requires periodic communication with central server | Operates independently without constant network connection |

| Model Updates | Aggregated from many devices to improve global model | Local model updates but limited to device capabilities |

| Latency | Potentially higher due to network communication | Low latency due to local processing |

| Examples | Google's Gboard personalized suggestions, Apple's differential privacy | Face ID recognition, offline voice assistants, photo enhancements |

Which is better?

Federated learning enhances privacy by enabling decentralized model training across multiple devices without sharing raw data, making it ideal for applications requiring stringent data security. On-device AI offers real-time processing and reduced latency by executing AI algorithms directly on the device, which benefits performance in resource-constrained environments. The choice between federated learning and on-device AI depends on factors such as data privacy requirements, computational power, and the need for real-time responsiveness.

Connection

Federated learning enables on-device AI by allowing models to be trained locally across multiple devices without sharing raw data, enhancing privacy and reducing latency. On-device AI leverages these decentralized models to perform real-time inference directly on smartphones, wearables, or IoT devices. This integration improves personalized user experiences while maintaining data security and compliance with privacy regulations.

Key Terms

Edge Computing

On-device AI processes data locally on edge devices, reducing latency and preserving user privacy by minimizing data transmission. Federated learning enables multiple edge devices to collaboratively train a global model while keeping raw data decentralized, enhancing security and efficiency in edge computing environments. Explore the nuances of these cutting-edge technologies to optimize your edge AI strategy.

Model Aggregation

Model aggregation in federated learning involves the secure combination of locally trained models from multiple devices, enhancing overall performance while preserving data privacy by keeping raw data on-device. In contrast, on-device AI processes data and updates models directly on individual devices without the need for centralized aggregation, prioritizing real-time inference and reduced latency. Explore deeper insights into model aggregation techniques and their impact on privacy and scalability in distributed AI systems.

Data Privacy

On-device AI processes data locally on user devices, minimizing data exposure and enhancing privacy by keeping sensitive information off centralized servers. Federated learning aggregates model updates from multiple devices without transferring raw data, offering a collaborative approach to improve AI accuracy while preserving individual privacy. Explore the benefits and challenges of both techniques to better understand their impact on data privacy.

Source and External Links

On-device artificial intelligence | European Data Protection Supervisor - On-device AI runs directly on end-user devices like smartphones and wearables, processing data locally to minimize latency, reduce bandwidth usage, and enhance privacy by keeping sensitive information on the device.

Introduction to On-Device AI - DeepLearning.AI - This resource teaches developers how to deploy, optimize, and integrate AI models on edge devices, covering model conversion, quantization, and real-time performance for applications like smartphones and IoT.

On-Device AI: Powering the Future of Computing | Coursera - On-device AI enables faster, more personalized experiences by processing data locally, reducing reliance on the cloud, and is closely related to edge computing and machine learning.

dowidth.com

dowidth.com