Liquid neural networks adapt dynamically to changing data patterns through continuous-time state updates, offering enhanced real-time processing capabilities compared to traditional long short-term memory (LSTM) networks, which rely on fixed discrete-time sequences and gated memory cells for managing temporal dependencies. Their architecture enables improved flexibility and robustness in environments with non-stationary inputs, making them suitable for complex, time-varying tasks such as robotics and adaptive control systems. Explore the evolving landscape of neural network technologies to understand the implications for future AI applications.

Why it is important

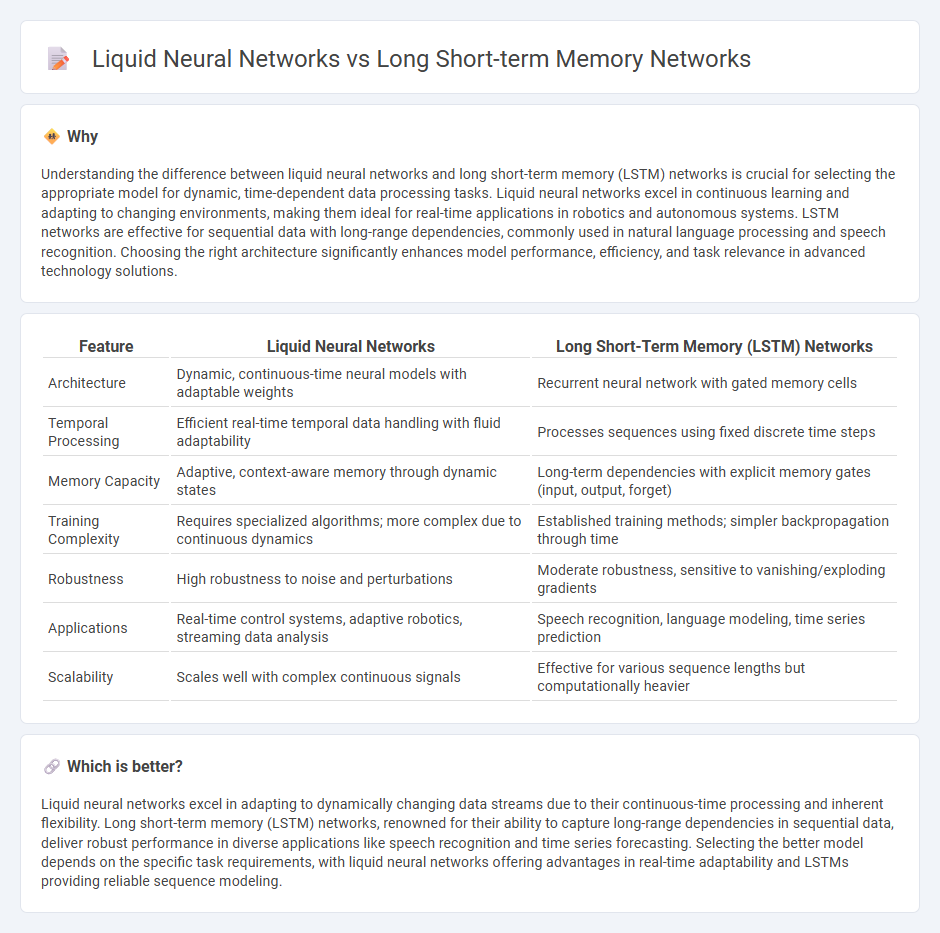

Understanding the difference between liquid neural networks and long short-term memory (LSTM) networks is crucial for selecting the appropriate model for dynamic, time-dependent data processing tasks. Liquid neural networks excel in continuous learning and adapting to changing environments, making them ideal for real-time applications in robotics and autonomous systems. LSTM networks are effective for sequential data with long-range dependencies, commonly used in natural language processing and speech recognition. Choosing the right architecture significantly enhances model performance, efficiency, and task relevance in advanced technology solutions.

Comparison Table

| Feature | Liquid Neural Networks | Long Short-Term Memory (LSTM) Networks |

|---|---|---|

| Architecture | Dynamic, continuous-time neural models with adaptable weights | Recurrent neural network with gated memory cells |

| Temporal Processing | Efficient real-time temporal data handling with fluid adaptability | Processes sequences using fixed discrete time steps |

| Memory Capacity | Adaptive, context-aware memory through dynamic states | Long-term dependencies with explicit memory gates (input, output, forget) |

| Training Complexity | Requires specialized algorithms; more complex due to continuous dynamics | Established training methods; simpler backpropagation through time |

| Robustness | High robustness to noise and perturbations | Moderate robustness, sensitive to vanishing/exploding gradients |

| Applications | Real-time control systems, adaptive robotics, streaming data analysis | Speech recognition, language modeling, time series prediction |

| Scalability | Scales well with complex continuous signals | Effective for various sequence lengths but computationally heavier |

Which is better?

Liquid neural networks excel in adapting to dynamically changing data streams due to their continuous-time processing and inherent flexibility. Long short-term memory (LSTM) networks, renowned for their ability to capture long-range dependencies in sequential data, deliver robust performance in diverse applications like speech recognition and time series forecasting. Selecting the better model depends on the specific task requirements, with liquid neural networks offering advantages in real-time adaptability and LSTMs providing reliable sequence modeling.

Connection

Liquid neural networks and long short-term memory (LSTM) networks both address the challenge of processing sequential data by maintaining dynamic internal states over time. Liquid neural networks incorporate adaptable, continuous-time dynamics that allow them to efficiently model temporal changes, while LSTMs use gated memory cells to capture long-range dependencies in sequences. Their connection lies in their shared goal of improving sequence learning through mechanisms that preserve and manipulate temporal information effectively.

Key Terms

Sequence Processing

Long short-term memory (LSTM) networks excel in sequence processing by effectively capturing long-range dependencies through gated memory cells, making them ideal for tasks like language modeling and time series prediction. Liquid neural networks, inspired by biological neural dynamics, offer adaptive temporal processing and improved robustness by continuously updating their internal states, which enhances handling of irregular or noisy sequential data. Explore detailed comparisons of their architectures and applications to understand which model best suits your sequence processing needs.

Memory Mechanism

Long Short-Term Memory (LSTM) networks utilize gated structures to maintain and update information over long sequences, effectively addressing the vanishing gradient problem in traditional recurrent neural networks. Liquid Neural Networks employ continuous-time dynamics and adaptable state representations, allowing for rapid integration and retention of temporal information with greater flexibility and robustness. Explore the distinct memory mechanisms and applications where each model excels to deepen your understanding.

Adaptive Dynamics

Long Short-Term Memory (LSTM) networks utilize gated mechanisms to maintain and update information over extended sequences, excelling in tasks with fixed temporal patterns. Liquid Neural Networks dynamically adapt their parameters in real-time through continuous learning, providing superior performance in environments with rapidly changing conditions and uncertain inputs. Explore the differences in adaptive dynamics between LSTM and liquid neural networks to understand their distinct advantages in various applications.

Source and External Links

Long Short-Term Memory - Long short-term memory (LSTM) is a type of recurrent neural network designed to mitigate the vanishing gradient problem, allowing it to learn long-term dependencies in sequence data.

Long Short-Term Memory (LSTM), Clearly Explained - This video provides a clear explanation of how LSTMs work, focusing on their ability to handle long sequences and avoid the exploding/vanishing gradient problem.

Understanding LSTM Networks - This article offers an in-depth exploration of LSTM networks, highlighting their capability to learn long-term dependencies and their applications in various tasks.

dowidth.com

dowidth.com