Diffusion models generate data by iteratively refining random noise through learned patterns, excelling in high-quality image synthesis and restoration. Self-supervised learning models leverage unlabeled data by creating proxy tasks to learn robust feature representations, significantly improving performance in natural language processing and computer vision. Explore the nuances and applications of these innovative technologies to understand their impact on AI advancements.

Why it is important

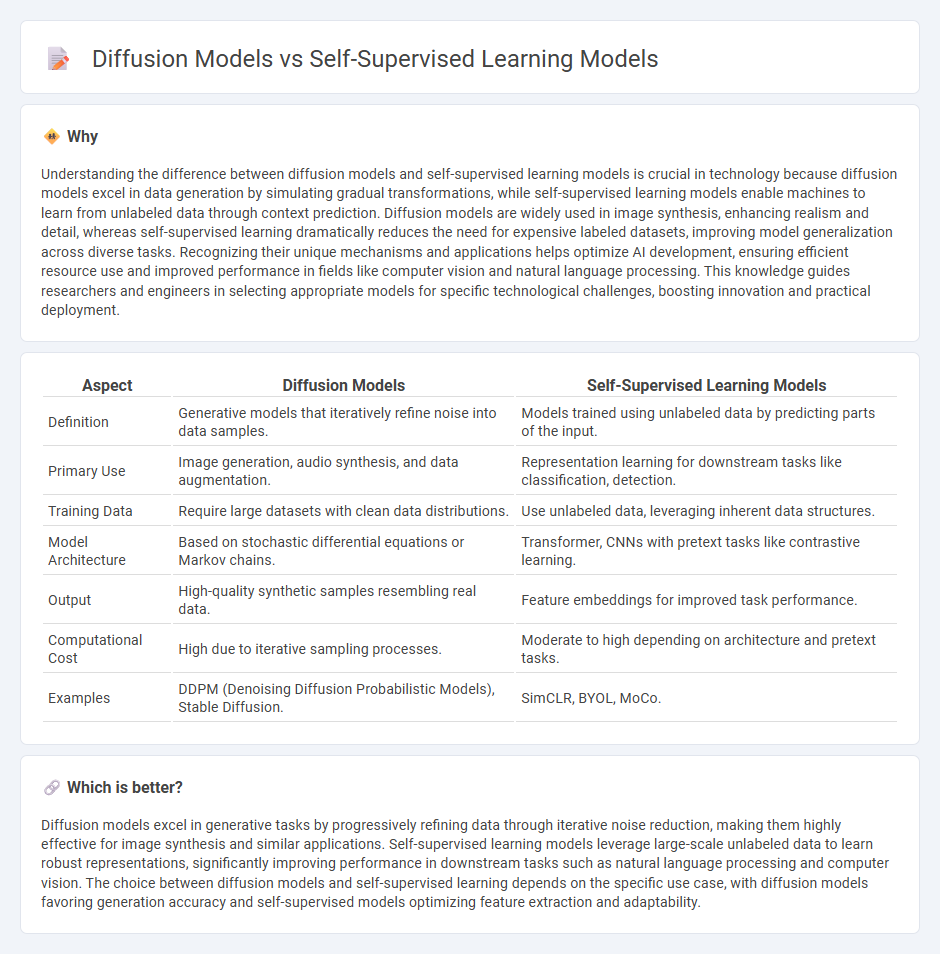

Understanding the difference between diffusion models and self-supervised learning models is crucial in technology because diffusion models excel in data generation by simulating gradual transformations, while self-supervised learning models enable machines to learn from unlabeled data through context prediction. Diffusion models are widely used in image synthesis, enhancing realism and detail, whereas self-supervised learning dramatically reduces the need for expensive labeled datasets, improving model generalization across diverse tasks. Recognizing their unique mechanisms and applications helps optimize AI development, ensuring efficient resource use and improved performance in fields like computer vision and natural language processing. This knowledge guides researchers and engineers in selecting appropriate models for specific technological challenges, boosting innovation and practical deployment.

Comparison Table

| Aspect | Diffusion Models | Self-Supervised Learning Models |

|---|---|---|

| Definition | Generative models that iteratively refine noise into data samples. | Models trained using unlabeled data by predicting parts of the input. |

| Primary Use | Image generation, audio synthesis, and data augmentation. | Representation learning for downstream tasks like classification, detection. |

| Training Data | Require large datasets with clean data distributions. | Use unlabeled data, leveraging inherent data structures. |

| Model Architecture | Based on stochastic differential equations or Markov chains. | Transformer, CNNs with pretext tasks like contrastive learning. |

| Output | High-quality synthetic samples resembling real data. | Feature embeddings for improved task performance. |

| Computational Cost | High due to iterative sampling processes. | Moderate to high depending on architecture and pretext tasks. |

| Examples | DDPM (Denoising Diffusion Probabilistic Models), Stable Diffusion. | SimCLR, BYOL, MoCo. |

Which is better?

Diffusion models excel in generative tasks by progressively refining data through iterative noise reduction, making them highly effective for image synthesis and similar applications. Self-supervised learning models leverage large-scale unlabeled data to learn robust representations, significantly improving performance in downstream tasks such as natural language processing and computer vision. The choice between diffusion models and self-supervised learning depends on the specific use case, with diffusion models favoring generation accuracy and self-supervised models optimizing feature extraction and adaptability.

Connection

Diffusion models leverage noise injection and denoising processes to generate high-quality data representations, which align with self-supervised learning models focused on learning from unlabeled data by predicting parts of the input. Both techniques optimize data efficiency and feature extraction without relying on extensive labeled datasets, enhancing performance in tasks like image synthesis and natural language understanding. Their synergy advances generative modeling by combining generative diffusion processes with robust self-supervised representations.

Key Terms

Pretext Task (Self-Supervised Learning)

Self-supervised learning models utilize pretext tasks such as predicting missing parts of data or ordering sequences to autonomously generate labels from unlabeled datasets, enhancing feature representation without human annotation. Diffusion models, predominantly used for generative tasks, rely on a reverse denoising process to create data from noise, differing from the discriminative objectives of pretext tasks in self-supervised learning. Explore the detailed mechanisms and applications of pretext tasks in self-supervised learning for a deeper understanding.

Noise Scheduling (Diffusion Models)

Noise scheduling in diffusion models plays a critical role in controlling the gradual corruption and denoising of data throughout the learning process, enabling the generation of high-quality samples by effectively modeling complex data distributions. Self-supervised learning models leverage inherent data structures without explicit noise scheduling, relying instead on pretext tasks to learn robust representations. Explore further to understand how noise scheduling enhances the performance and fidelity of diffusion models compared to traditional self-supervised approaches.

Representation Learning

Self-supervised learning models excel in representation learning by leveraging unlabeled data to create robust and generalizable feature embeddings, crucial for downstream tasks such as classification and clustering. Diffusion models, while primarily used for generative tasks, are increasingly being adapted to enhance representation learning through iterative noise reduction and feature refinement techniques. Explore the latest research to understand how these models are transforming representation learning paradigms.

Source and External Links

What Is Self-Supervised Learning? - IBM - This webpage explains self-supervised learning as a machine learning technique that uses unsupervised methods for tasks typically requiring supervised learning, without labeled data.

Self-Supervised Learning (SSL) - GeeksforGeeks - This article discusses self-supervised learning as a deep learning methodology where models are pre-trained on unlabeled data, generating labels automatically, and highlights its advantages.

Self-supervised learning - Wikipedia - This Wikipedia page describes self-supervised learning as a paradigm where models are trained using data itself to generate supervisory signals, including types like autoassociative learning.

dowidth.com

dowidth.com