Retrieval Augmented Generation (RAG) integrates external information sources during the text generation process, enhancing the accuracy and relevance of outputs by dynamically accessing large-scale databases. Knowledge Distillation transfers knowledge from a larger, complex model to a smaller, efficient one to improve performance without the computational cost. Explore how these advanced techniques are transforming AI capabilities and practical applications.

Why it is important

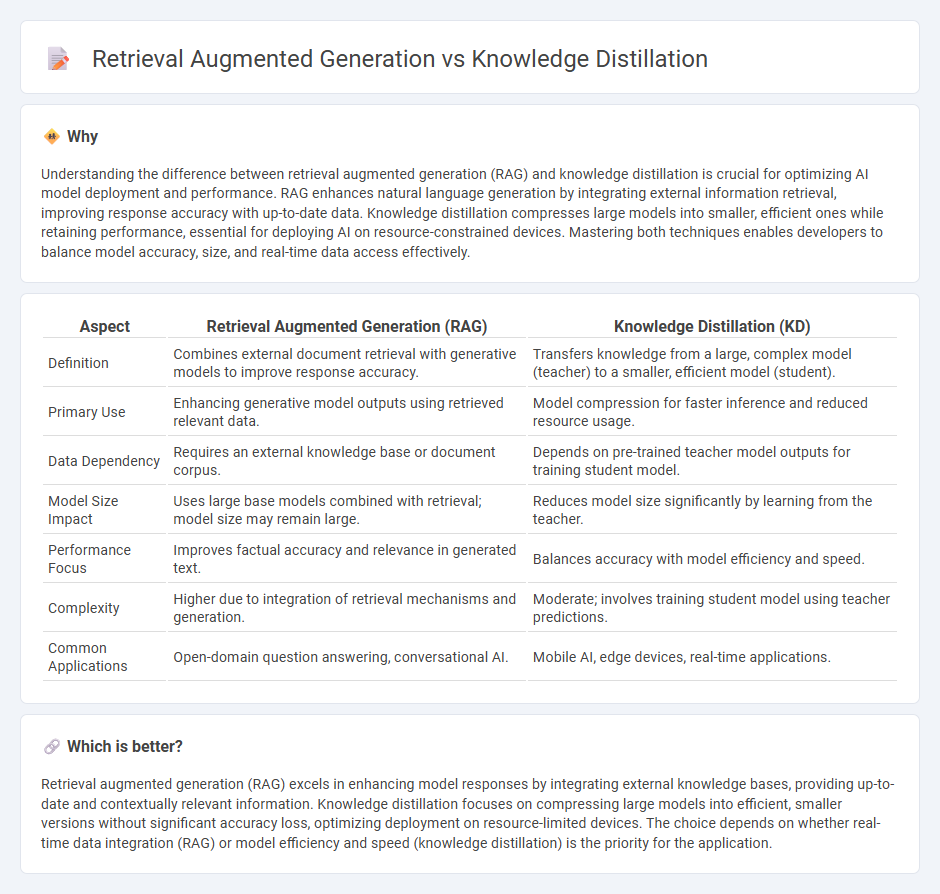

Understanding the difference between retrieval augmented generation (RAG) and knowledge distillation is crucial for optimizing AI model deployment and performance. RAG enhances natural language generation by integrating external information retrieval, improving response accuracy with up-to-date data. Knowledge distillation compresses large models into smaller, efficient ones while retaining performance, essential for deploying AI on resource-constrained devices. Mastering both techniques enables developers to balance model accuracy, size, and real-time data access effectively.

Comparison Table

| Aspect | Retrieval Augmented Generation (RAG) | Knowledge Distillation (KD) |

|---|---|---|

| Definition | Combines external document retrieval with generative models to improve response accuracy. | Transfers knowledge from a large, complex model (teacher) to a smaller, efficient model (student). |

| Primary Use | Enhancing generative model outputs using retrieved relevant data. | Model compression for faster inference and reduced resource usage. |

| Data Dependency | Requires an external knowledge base or document corpus. | Depends on pre-trained teacher model outputs for training student model. |

| Model Size Impact | Uses large base models combined with retrieval; model size may remain large. | Reduces model size significantly by learning from the teacher. |

| Performance Focus | Improves factual accuracy and relevance in generated text. | Balances accuracy with model efficiency and speed. |

| Complexity | Higher due to integration of retrieval mechanisms and generation. | Moderate; involves training student model using teacher predictions. |

| Common Applications | Open-domain question answering, conversational AI. | Mobile AI, edge devices, real-time applications. |

Which is better?

Retrieval augmented generation (RAG) excels in enhancing model responses by integrating external knowledge bases, providing up-to-date and contextually relevant information. Knowledge distillation focuses on compressing large models into efficient, smaller versions without significant accuracy loss, optimizing deployment on resource-limited devices. The choice depends on whether real-time data integration (RAG) or model efficiency and speed (knowledge distillation) is the priority for the application.

Connection

Retrieval augmented generation (RAG) enhances language models by integrating external information retrieval during text generation, improving accuracy and relevance. Knowledge distillation transfers learned knowledge from a large, complex model to a smaller, efficient one, enabling faster inference without significant loss of performance. Together, RAG models benefit from distilled knowledge by maintaining retrieval effectiveness and generation quality with reduced computational resources.

Key Terms

Teacher-Student Model

Knowledge distillation involves training a compact student model to replicate the output behavior of a larger teacher model, optimizing for efficiency and inference speed while preserving accuracy. Retrieval augmented generation (RAG) enhances generative models by integrating external knowledge sources through retrieval mechanisms, enabling the student model to access up-to-date and contextually relevant information dynamically. Explore how these teacher-student frameworks leverage compression and retrieval to improve AI performance and resource efficiency.

External Knowledge Base

Knowledge distillation compresses a larger model's knowledge into a smaller, efficient model but relies solely on its internal parameters without external data access, whereas retrieval augmented generation (RAG) dynamically retrieves relevant information from an external knowledge base to enhance responses. RAG integrates external unstructured or structured data sources, improving factual accuracy and adaptability in real-time queries. Explore the nuances of these approaches and their impact on leveraging external knowledge bases for optimized AI performance.

Inference Efficiency

Knowledge distillation enhances inference efficiency by compressing large models into smaller, faster ones while preserving performance, reducing computational overhead during deployment. Retrieval augmented generation (RAG) improves inference by dynamically fetching relevant external data to generate contextually accurate responses, but it may introduce latency due to real-time retrieval processes. Explore how these techniques balance efficiency and accuracy in AI systems to optimize deployment strategies.

Source and External Links

Knowledge Distillation: Principles, Algorithms, Applications - Knowledge distillation is a technique for transferring knowledge from large, complex models to smaller ones, enabling deployment on resource-constrained devices without significant performance loss.

What is Knowledge Distillation? A Deep Dive - This webpage provides a comprehensive overview of knowledge distillation, including its process and benefits in compressing large neural networks into smaller models.

Knowledge Distillation - Knowledge distillation is a machine learning technique that transfers knowledge from large models to smaller ones, making them suitable for less powerful hardware.

dowidth.com

dowidth.com