Synthetic media detection employs advanced algorithms and machine learning models to identify manipulated audio, video, and images, countering the rapid rise of deepfake generation techniques that create highly realistic but fabricated content. These detection tools analyze inconsistencies in facial movements, lighting, and digital artifacts to distinguish genuine media from synthetic forgeries. Explore the latest advancements in synthetic media detection to understand how technology combats the challenges posed by deepfake generation.

Why it is important

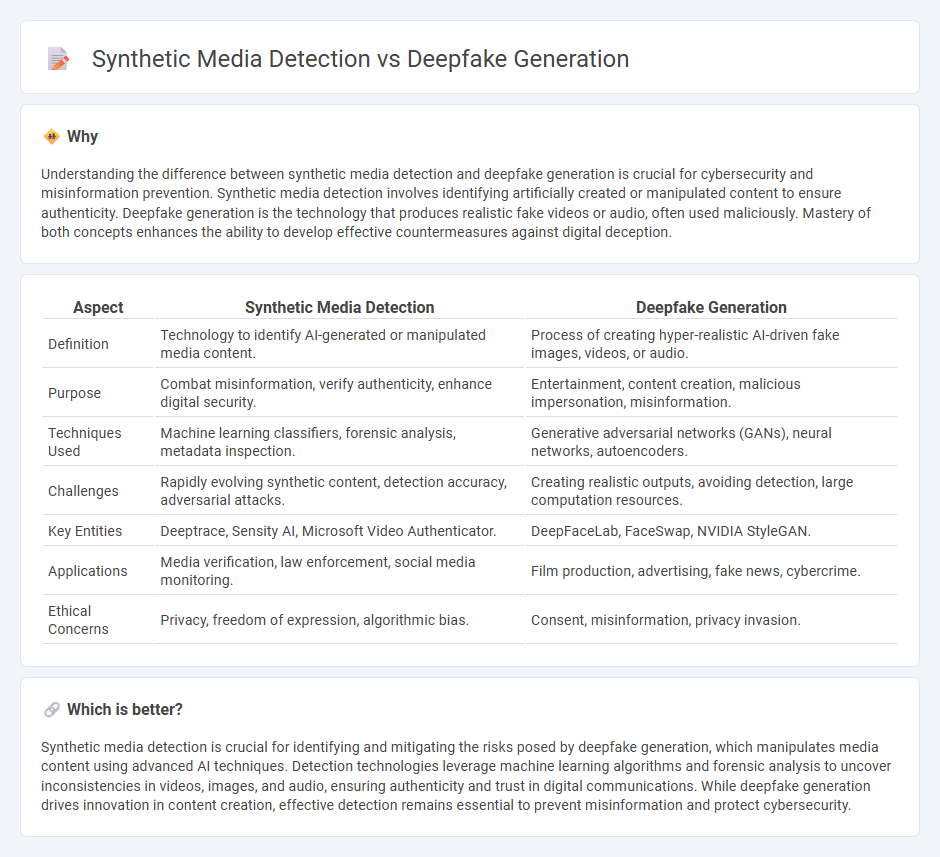

Understanding the difference between synthetic media detection and deepfake generation is crucial for cybersecurity and misinformation prevention. Synthetic media detection involves identifying artificially created or manipulated content to ensure authenticity. Deepfake generation is the technology that produces realistic fake videos or audio, often used maliciously. Mastery of both concepts enhances the ability to develop effective countermeasures against digital deception.

Comparison Table

| Aspect | Synthetic Media Detection | Deepfake Generation |

|---|---|---|

| Definition | Technology to identify AI-generated or manipulated media content. | Process of creating hyper-realistic AI-driven fake images, videos, or audio. |

| Purpose | Combat misinformation, verify authenticity, enhance digital security. | Entertainment, content creation, malicious impersonation, misinformation. |

| Techniques Used | Machine learning classifiers, forensic analysis, metadata inspection. | Generative adversarial networks (GANs), neural networks, autoencoders. |

| Challenges | Rapidly evolving synthetic content, detection accuracy, adversarial attacks. | Creating realistic outputs, avoiding detection, large computation resources. |

| Key Entities | Deeptrace, Sensity AI, Microsoft Video Authenticator. | DeepFaceLab, FaceSwap, NVIDIA StyleGAN. |

| Applications | Media verification, law enforcement, social media monitoring. | Film production, advertising, fake news, cybercrime. |

| Ethical Concerns | Privacy, freedom of expression, algorithmic bias. | Consent, misinformation, privacy invasion. |

Which is better?

Synthetic media detection is crucial for identifying and mitigating the risks posed by deepfake generation, which manipulates media content using advanced AI techniques. Detection technologies leverage machine learning algorithms and forensic analysis to uncover inconsistencies in videos, images, and audio, ensuring authenticity and trust in digital communications. While deepfake generation drives innovation in content creation, effective detection remains essential to prevent misinformation and protect cybersecurity.

Connection

Synthetic media detection relies on advanced machine learning algorithms trained to identify inconsistencies and artifacts commonly produced during deepfake generation. Deepfake creation techniques, such as generative adversarial networks (GANs), produce hyper-realistic synthetic videos and images, presenting ongoing challenges for detection systems. The interplay between improving deepfake generation methods and enhancing synthetic media detection fuels a continuous cycle of technological advancement in digital forensics.

Key Terms

Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) play a pivotal role in deepfake generation by creating highly realistic synthetic images and videos through adversarial training between a generator and a discriminator. The same GAN architecture is employed in synthetic media detection to identify inconsistencies and artifacts by analyzing subtle discrepancies introduced during generation. Explore the latest advancements in GAN-based detection techniques to understand how AI combats sophisticated deepfake manipulations.

Digital Watermarking

Digital watermarking plays a critical role in distinguishing deepfake content from genuine media by embedding imperceptible, tamper-resistant signals directly within video or image files, enabling reliable verification of authenticity. Advanced watermarking techniques often use robust cryptographic algorithms and machine learning models that detect subtle discrepancies introduced during deepfake generation, ensuring traceability and accountability. Explore how digital watermarking advances synthetic media detection to safeguard digital integrity and combat misinformation.

Forensic Analysis Algorithms

Forensic analysis algorithms play a critical role in distinguishing deepfake generation from synthetic media detection by analyzing inconsistencies in visual and audio data patterns usually imperceptible to human observers. These algorithms leverage machine learning models trained on vast datasets containing both genuine and manipulated media to identify subtle artifacts, such as unnatural eye movements, inconsistent lighting, and audio-visual mismatches. Discover the latest advances in forensic techniques designed to combat digital fraud and secure media authenticity.

Source and External Links

Face Deepfakes - A Comprehensive Review - arXiv - This paper reviews deepfake generation in facial manipulation, detailing both early autoencoder-based face-swapping approaches and more advanced generative adversarial network (GAN) techniques for improved realism.

Deepfakes Web | Make Your Own Deepfake [Online App] - This online tool allows users to upload an image and a video, then uses AI to automatically replace faces in the video with the uploaded face, delivering results in minutes.

The AI Arms Race: Deepfake Generation vs. Detection - SecurityWeek - AI-generated deepfake voice technology has surpassed the uncanny valley, becoming highly convincing and fueling a surge in fraudulent activities that outpace traditional detection methods.

dowidth.com

dowidth.com