Differentiable programming integrates neural networks with traditional programming by enabling gradient-based optimization of complex models, allowing for more flexible and efficient learning processes. Statistical modeling relies on probabilistic methods to infer patterns and relationships within data, often assuming fixed model structures and parameter distributions. Explore further to understand how these approaches transform innovation in artificial intelligence and data analysis.

Why it is important

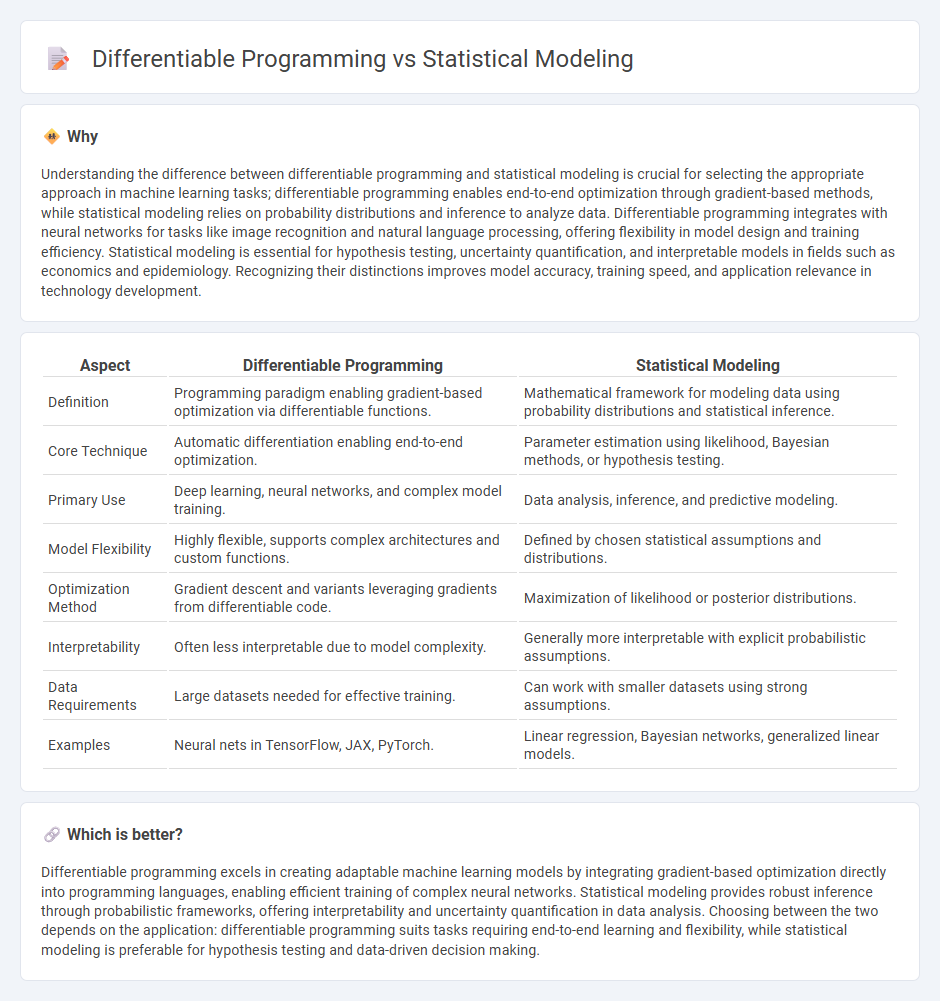

Understanding the difference between differentiable programming and statistical modeling is crucial for selecting the appropriate approach in machine learning tasks; differentiable programming enables end-to-end optimization through gradient-based methods, while statistical modeling relies on probability distributions and inference to analyze data. Differentiable programming integrates with neural networks for tasks like image recognition and natural language processing, offering flexibility in model design and training efficiency. Statistical modeling is essential for hypothesis testing, uncertainty quantification, and interpretable models in fields such as economics and epidemiology. Recognizing their distinctions improves model accuracy, training speed, and application relevance in technology development.

Comparison Table

| Aspect | Differentiable Programming | Statistical Modeling |

|---|---|---|

| Definition | Programming paradigm enabling gradient-based optimization via differentiable functions. | Mathematical framework for modeling data using probability distributions and statistical inference. |

| Core Technique | Automatic differentiation enabling end-to-end optimization. | Parameter estimation using likelihood, Bayesian methods, or hypothesis testing. |

| Primary Use | Deep learning, neural networks, and complex model training. | Data analysis, inference, and predictive modeling. |

| Model Flexibility | Highly flexible, supports complex architectures and custom functions. | Defined by chosen statistical assumptions and distributions. |

| Optimization Method | Gradient descent and variants leveraging gradients from differentiable code. | Maximization of likelihood or posterior distributions. |

| Interpretability | Often less interpretable due to model complexity. | Generally more interpretable with explicit probabilistic assumptions. |

| Data Requirements | Large datasets needed for effective training. | Can work with smaller datasets using strong assumptions. |

| Examples | Neural nets in TensorFlow, JAX, PyTorch. | Linear regression, Bayesian networks, generalized linear models. |

Which is better?

Differentiable programming excels in creating adaptable machine learning models by integrating gradient-based optimization directly into programming languages, enabling efficient training of complex neural networks. Statistical modeling provides robust inference through probabilistic frameworks, offering interpretability and uncertainty quantification in data analysis. Choosing between the two depends on the application: differentiable programming suits tasks requiring end-to-end learning and flexibility, while statistical modeling is preferable for hypothesis testing and data-driven decision making.

Connection

Differentiable programming integrates gradient-based optimization into statistical modeling, enabling complex models to learn from data by minimizing error functions through automatic differentiation. This connection enhances the flexibility of statistical models in handling non-linear relationships and high-dimensional data structures, improving predictive accuracy. Techniques such as neural networks embody this synergy by combining probabilistic reasoning with differentiable algorithms for robust data-driven insights.

Key Terms

Probabilistic Models

Statistical modeling relies on probabilistic models to represent uncertainty through defined probability distributions and parameters, enabling inference and prediction based on observed data. Differentiable programming integrates these probabilistic models within neural networks by leveraging gradient-based optimization techniques for efficient parameter learning and complex model building. Explore the nuances of probabilistic model implementation in both paradigms to enhance your understanding of modern inferential frameworks.

Automatic Differentiation

Statistical modeling relies on probabilistic frameworks to infer patterns from data, while differentiable programming integrates automatic differentiation to optimize functions more efficiently. Automatic differentiation enables precise gradient computation, accelerating model training and improving performance in neural networks and complex simulations. Explore further to understand how automatic differentiation bridges these paradigms for advanced machine learning applications.

Gradient Descent

Statistical modeling relies on probabilistic frameworks to estimate parameters, often using gradient descent for optimization in complex models such as generalized linear models or Bayesian networks. Differentiable programming integrates gradient descent more deeply by enabling end-to-end optimization of programs with differentiable components, commonly applied in neural networks and machine learning pipelines. Explore how gradient descent bridges these paradigms to enhance model accuracy and computational efficiency.

Source and External Links

What is Statistical Modeling? - Simplilearn.com - Statistical modeling is a method for generating sample data, making real-world predictions, and revealing correlations between variables using mathematical models and explicit assumptions.

What Is Statistical Modeling? - Coursera - Statistical modeling formalizes theories by establishing mathematical relationships between random and non-random variables, with applications in supervised, unsupervised, and reinforcement learning.

Statistical model - Wikipedia - A statistical model is formally defined as a mathematical model comprising a sample space and a set of probability distributions, often parameterized, that embody assumptions about how sample data are generated.

dowidth.com

dowidth.com