Differential privacy ensures individual data protection by adding controlled noise to datasets, preserving privacy while enabling accurate analysis. T-closeness enhances privacy by maintaining a distribution of sensitive attributes within equivalence classes close to the overall data distribution, reducing attribute disclosure risks. Explore the nuances of differential privacy and t-closeness to understand their applications in data security environments.

Why it is important

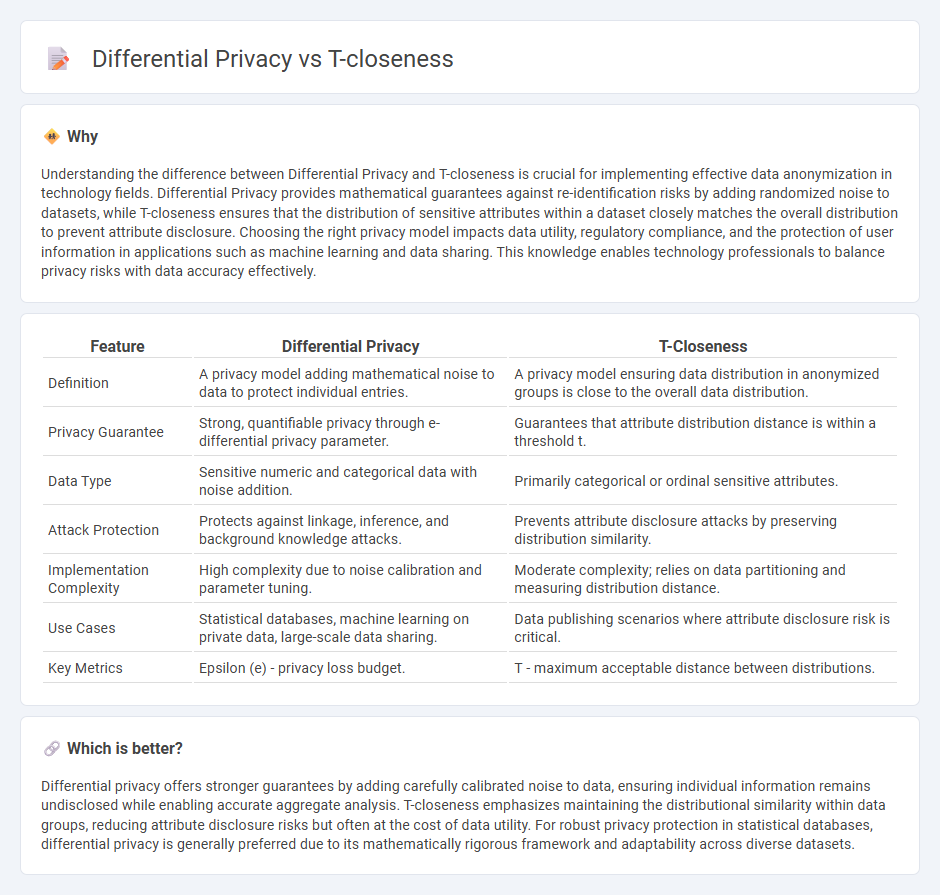

Understanding the difference between Differential Privacy and T-closeness is crucial for implementing effective data anonymization in technology fields. Differential Privacy provides mathematical guarantees against re-identification risks by adding randomized noise to datasets, while T-closeness ensures that the distribution of sensitive attributes within a dataset closely matches the overall distribution to prevent attribute disclosure. Choosing the right privacy model impacts data utility, regulatory compliance, and the protection of user information in applications such as machine learning and data sharing. This knowledge enables technology professionals to balance privacy risks with data accuracy effectively.

Comparison Table

| Feature | Differential Privacy | T-Closeness |

|---|---|---|

| Definition | A privacy model adding mathematical noise to data to protect individual entries. | A privacy model ensuring data distribution in anonymized groups is close to the overall data distribution. |

| Privacy Guarantee | Strong, quantifiable privacy through e-differential privacy parameter. | Guarantees that attribute distribution distance is within a threshold t. |

| Data Type | Sensitive numeric and categorical data with noise addition. | Primarily categorical or ordinal sensitive attributes. |

| Attack Protection | Protects against linkage, inference, and background knowledge attacks. | Prevents attribute disclosure attacks by preserving distribution similarity. |

| Implementation Complexity | High complexity due to noise calibration and parameter tuning. | Moderate complexity; relies on data partitioning and measuring distribution distance. |

| Use Cases | Statistical databases, machine learning on private data, large-scale data sharing. | Data publishing scenarios where attribute disclosure risk is critical. |

| Key Metrics | Epsilon (e) - privacy loss budget. | T - maximum acceptable distance between distributions. |

Which is better?

Differential privacy offers stronger guarantees by adding carefully calibrated noise to data, ensuring individual information remains undisclosed while enabling accurate aggregate analysis. T-closeness emphasizes maintaining the distributional similarity within data groups, reducing attribute disclosure risks but often at the cost of data utility. For robust privacy protection in statistical databases, differential privacy is generally preferred due to its mathematically rigorous framework and adaptability across diverse datasets.

Connection

Differential privacy and t-closeness are connected through their shared goal of enhancing data privacy in data publishing and analysis. Differential privacy provides a mathematical framework to limit the risk of identifying individuals by adding noise to datasets, while t-closeness ensures that the distribution of sensitive attributes in anonymized data closely resembles the original distribution to prevent attribute disclosure. Both techniques complement each other by combining noise addition and attribute distribution constraints to strengthen privacy protection in datasets.

Key Terms

Equivalence Class

T-closeness measures privacy by ensuring that the distribution of sensitive attributes within each equivalence class closely mirrors the overall dataset distribution, limiting attribute disclosure risks. Differential privacy, in contrast, provides formal privacy guarantees by introducing controlled noise to data queries, making it difficult to infer individual information regardless of equivalence class structure. Explore how these approaches balance data utility and privacy protection within equivalence classes for more insights.

Privacy Budget

T-closeness enhances data privacy by ensuring that the distribution of sensitive attributes within any group closely resembles the overall dataset, minimizing attribute disclosure risks without requiring a privacy budget. Differential privacy measures privacy loss using a quantifiable privacy budget (epsilon), controlling the amount of noise added to data queries to balance privacy and utility. Explore the intricacies of both models to understand their impact on privacy budget allocation and data protection strategies.

Distance Metric

T-closeness ensures privacy by maintaining the distribution distance, typically measured using Earth Mover's Distance, between sensitive attribute values in released data and the overall dataset. Differential privacy provides mathematical guarantees by adding calibrated noise to query results based on the sensitivity of the function, often utilizing metrics like the Laplace or Gaussian mechanism for distance in probability distributions. Explore detailed comparisons of distance metrics and their impact on privacy guarantees to understand the nuances between T-closeness and differential privacy.

Source and External Links

t-Closeness: Privacy Beyond k-Anonymity and l-Diversity (PDF) - t-closeness ensures that the distribution of a sensitive attribute in any equivalence class is no more than a threshold t away from its distribution in the whole table.

t-Closeness (Wikipedia) - t-closeness extends l-diversity by additionally requiring the distribution of sensitive attributes within groups to be close to the global distribution, reducing privacy risks from skew and inference attacks.

T-closeness (MOSTLY AI) - t-closeness is an anonymization technique that protects against attribute disclosure by demanding that the distribution of sensitive values within each group be similar to the overall dataset's distribution.

dowidth.com

dowidth.com