Edge inference processes data directly on devices like smartphones or IoT sensors, reducing latency and enhancing privacy by minimizing data transmission to central servers. Hybrid inference combines edge computing with cloud resources, balancing rapid local analysis and the power of extensive cloud-based processing to optimize performance and scalability. Explore the key differences and benefits of edge versus hybrid inference to determine the best fit for your technology needs.

Why it is important

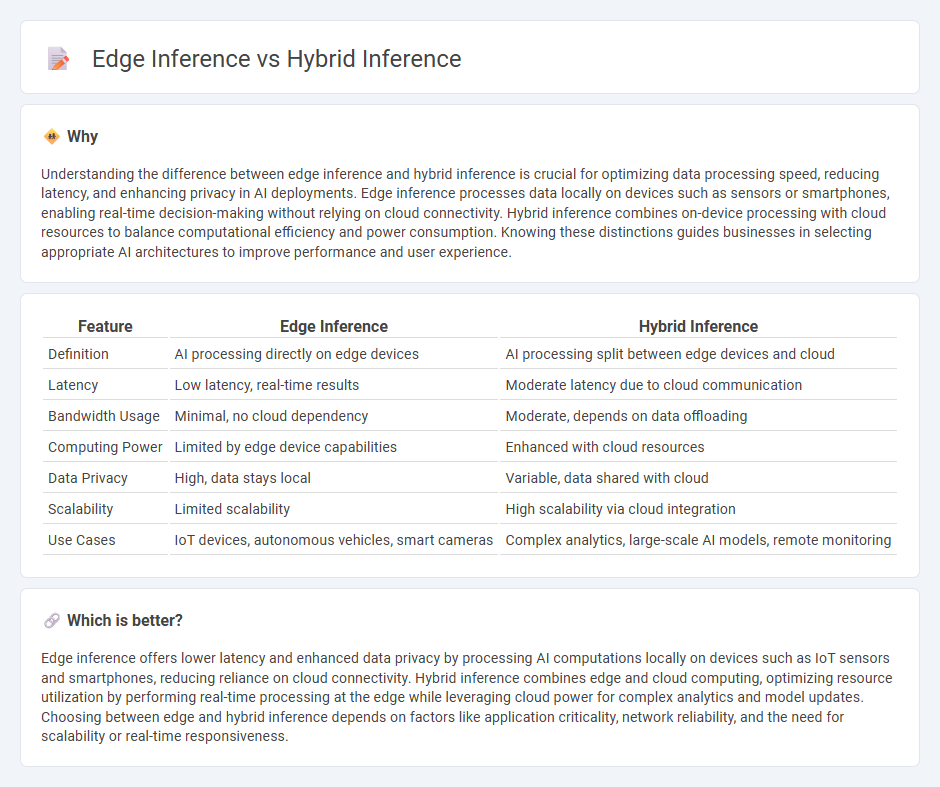

Understanding the difference between edge inference and hybrid inference is crucial for optimizing data processing speed, reducing latency, and enhancing privacy in AI deployments. Edge inference processes data locally on devices such as sensors or smartphones, enabling real-time decision-making without relying on cloud connectivity. Hybrid inference combines on-device processing with cloud resources to balance computational efficiency and power consumption. Knowing these distinctions guides businesses in selecting appropriate AI architectures to improve performance and user experience.

Comparison Table

| Feature | Edge Inference | Hybrid Inference |

|---|---|---|

| Definition | AI processing directly on edge devices | AI processing split between edge devices and cloud |

| Latency | Low latency, real-time results | Moderate latency due to cloud communication |

| Bandwidth Usage | Minimal, no cloud dependency | Moderate, depends on data offloading |

| Computing Power | Limited by edge device capabilities | Enhanced with cloud resources |

| Data Privacy | High, data stays local | Variable, data shared with cloud |

| Scalability | Limited scalability | High scalability via cloud integration |

| Use Cases | IoT devices, autonomous vehicles, smart cameras | Complex analytics, large-scale AI models, remote monitoring |

Which is better?

Edge inference offers lower latency and enhanced data privacy by processing AI computations locally on devices such as IoT sensors and smartphones, reducing reliance on cloud connectivity. Hybrid inference combines edge and cloud computing, optimizing resource utilization by performing real-time processing at the edge while leveraging cloud power for complex analytics and model updates. Choosing between edge and hybrid inference depends on factors like application criticality, network reliability, and the need for scalability or real-time responsiveness.

Connection

Edge inference processes data locally on devices to reduce latency and bandwidth use, while hybrid inference combines edge and cloud computing to balance efficiency and computational power. By integrating local edge processing with cloud resources, hybrid inference optimizes real-time analytics and resource management across distributed systems. This synergy enhances AI performance in applications such as autonomous vehicles, IoT devices, and smart cities.

Key Terms

Latency

Hybrid inference combines cloud and edge processing to balance latency and computational resources, reducing the delay experienced by AI models. Edge inference processes data locally on devices, offering significantly lower latency by minimizing communication with central servers. Explore how optimizing latency in these methods can enhance real-time AI applications.

Connectivity

Hybrid inference combines cloud and edge computing, leveraging robust internet connectivity to offload complex tasks to powerful cloud servers while maintaining real-time processing at the edge. Edge inference prioritizes local data processing on devices with limited or intermittent connectivity, reducing latency and dependence on constant internet access. Explore the differences in connectivity demands and benefits between hybrid and edge inference for optimized AI deployment.

Resource Utilization

Hybrid inference combines cloud and edge computing to optimize resource utilization by offloading complex tasks to the cloud while performing latency-sensitive processing locally on edge devices, reducing bandwidth usage and enhancing real-time responsiveness. Edge inference leverages localized processing power, minimizing data transfer and enabling faster decision-making but may be constrained by limited hardware resources. Explore how balancing these approaches can maximize efficiency and scalability in AI deployments.

Source and External Links

Combining Generative and Discriminative Models for Hybrid Inference - This work proposes a hybrid model that integrates graphical inference with a learned inverse model structured as a graph neural network, improving estimation by balancing graphical and learned inference techniques.

Build hybrid experiences with on-device and cloud-hosted inference - Hybrid inference enables AI-powered apps to run inference locally on-device when possible, with seamless fallback to cloud-hosted models, offering benefits like enhanced privacy, no-cost inference, and offline functionality.

Introducing hybrid on-device inference using Firebase AI Logic - Firebase AI Logic SDK for Web simplifies hybrid inference by automatically managing model availability, allowing developers to prefer or restrict inference to on-device or cloud-hosted models with minimal code changes.

dowidth.com

dowidth.com