Generative fill uses AI to intelligently complete or extend images by synthesizing missing parts based on surrounding content, enhancing creative workflows and restoration tasks. Neural rendering employs deep neural networks to generate photorealistic images or animations from 3D models or limited data, revolutionizing visual effects and interactive media. Explore how these cutting-edge technologies transform digital content creation and immersive experiences.

Why it is important

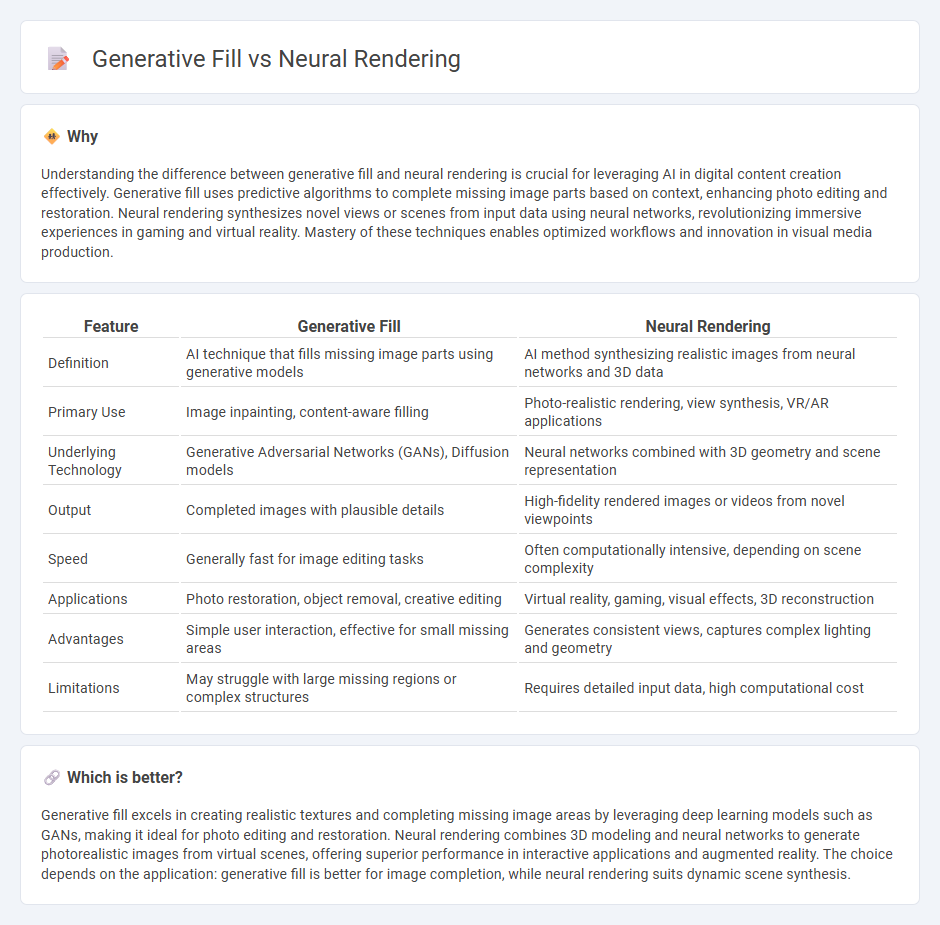

Understanding the difference between generative fill and neural rendering is crucial for leveraging AI in digital content creation effectively. Generative fill uses predictive algorithms to complete missing image parts based on context, enhancing photo editing and restoration. Neural rendering synthesizes novel views or scenes from input data using neural networks, revolutionizing immersive experiences in gaming and virtual reality. Mastery of these techniques enables optimized workflows and innovation in visual media production.

Comparison Table

| Feature | Generative Fill | Neural Rendering |

|---|---|---|

| Definition | AI technique that fills missing image parts using generative models | AI method synthesizing realistic images from neural networks and 3D data |

| Primary Use | Image inpainting, content-aware filling | Photo-realistic rendering, view synthesis, VR/AR applications |

| Underlying Technology | Generative Adversarial Networks (GANs), Diffusion models | Neural networks combined with 3D geometry and scene representation |

| Output | Completed images with plausible details | High-fidelity rendered images or videos from novel viewpoints |

| Speed | Generally fast for image editing tasks | Often computationally intensive, depending on scene complexity |

| Applications | Photo restoration, object removal, creative editing | Virtual reality, gaming, visual effects, 3D reconstruction |

| Advantages | Simple user interaction, effective for small missing areas | Generates consistent views, captures complex lighting and geometry |

| Limitations | May struggle with large missing regions or complex structures | Requires detailed input data, high computational cost |

Which is better?

Generative fill excels in creating realistic textures and completing missing image areas by leveraging deep learning models such as GANs, making it ideal for photo editing and restoration. Neural rendering combines 3D modeling and neural networks to generate photorealistic images from virtual scenes, offering superior performance in interactive applications and augmented reality. The choice depends on the application: generative fill is better for image completion, while neural rendering suits dynamic scene synthesis.

Connection

Generative fill leverages AI algorithms to seamlessly complete missing or corrupted parts of an image by predicting content that matches the surrounding context, while neural rendering employs neural networks to generate photo-realistic images from abstract representations or incomplete data. Both technologies utilize deep learning models to interpret and reconstruct visual information, enabling advanced editing and synthetic image generation. This connection enhances applications in computer graphics, virtual reality, and image restoration by improving the realism and coherence of generated visuals.

Key Terms

Neural Networks

Neural rendering employs deep neural networks to synthesize photorealistic images by interpreting 3D scene representations, enhancing virtual reality and computer graphics with accurate lighting and texture effects. Generative fill utilizes generative adversarial networks (GANs) or diffusion models to intelligently complete images by predicting missing pixels based on learned patterns, excelling in image restoration and editing tasks. Explore further to understand how neural networks power these groundbreaking visual technologies.

Image Synthesis

Neural rendering leverages deep neural networks to create photorealistic images by simulating light transport and 3D scene properties, achieving high-quality image synthesis with accurate geometry and textures. Generative fill, based on generative adversarial networks (GANs) or diffusion models, focuses on completing or expanding images by synthesizing missing or occluded parts, enhancing image coherence and creativity. Explore the latest advancements in both techniques to understand their unique strengths and applications in image synthesis.

Inpainting

Neural rendering leverages deep learning to reconstruct and synthesize realistic images by modeling complex scene geometry and lighting, while generative fill focuses on inpainting by intelligently filling missing or corrupted regions using context-aware generative models. Inpainting techniques powered by generative fill excel in producing coherent textures and seamless transitions in image editing and restoration tasks. Explore further to understand the advancements and applications of neural rendering and generative fill in inpainting technologies.

Source and External Links

CVPR 2020 - Tutorial on Neural Rendering - Neural rendering combines generative machine learning with computer graphics techniques to enable photo-realistic, controllable generation of images and videos by explicitly or implicitly manipulating scene properties like lighting, pose, geometry, and appearance.

NVIDIA RTX Neural Rendering Introduces Next Era of AI-Powered Graphics Innovation - NVIDIA's RTX Kit leverages neural rendering to enhance real-time ray tracing, allowing for massively complex geometry, improved lighting, and lifelike character visuals in games and virtual environments, all while optimizing performance and reducing artifacts.

COMP 590/790: Neural Rendering - Neural rendering broadly refers to techniques that integrate deep learning into traditional rendering pipelines--such as ray-tracing and image-based rendering--to synthesize novel views or manipulate visual content using learned models.

dowidth.com

dowidth.com