Neuromorphic computing mimics the architecture and functionality of the human brain to achieve energy-efficient processing, while high performance computing (HPC) focuses on maximizing computational power through parallel processing and large-scale data management. Neuromorphic systems excel in pattern recognition tasks and adaptive learning, whereas HPC platforms dominate in simulations, big data analytics, and scientific computations. Explore the differences, use cases, and future potential of neuromorphic computing versus high performance computing to understand their impact on technology advancement.

Why it is important

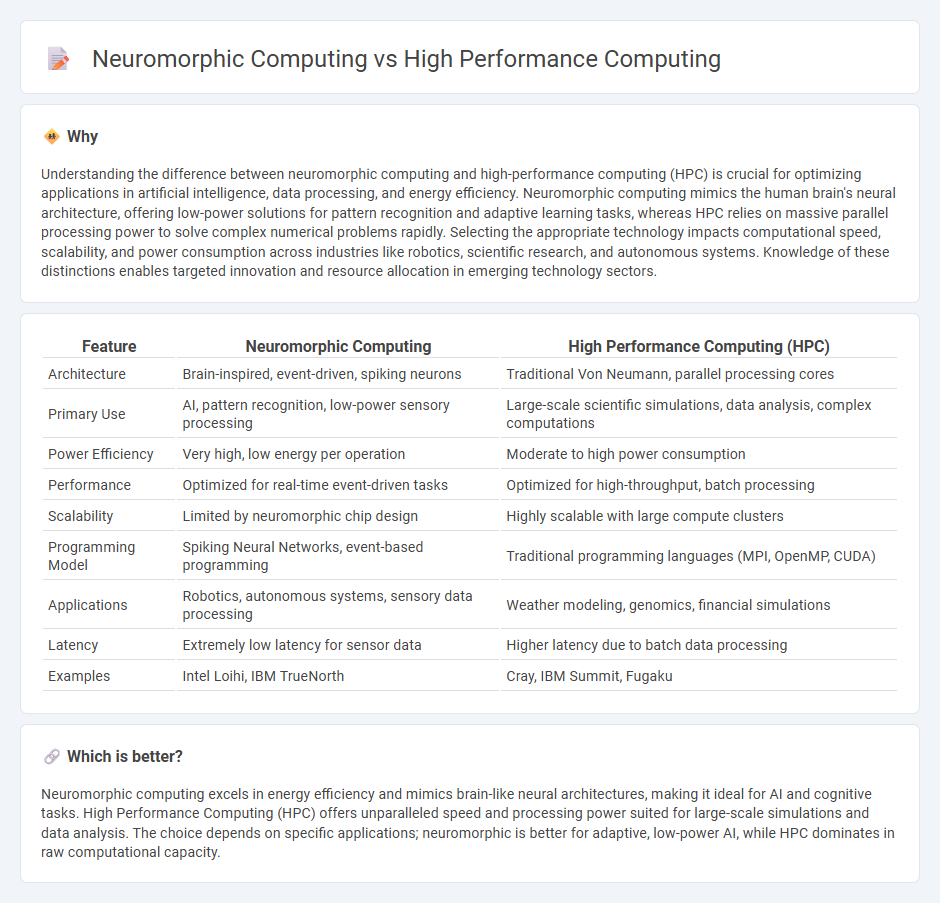

Understanding the difference between neuromorphic computing and high-performance computing (HPC) is crucial for optimizing applications in artificial intelligence, data processing, and energy efficiency. Neuromorphic computing mimics the human brain's neural architecture, offering low-power solutions for pattern recognition and adaptive learning tasks, whereas HPC relies on massive parallel processing power to solve complex numerical problems rapidly. Selecting the appropriate technology impacts computational speed, scalability, and power consumption across industries like robotics, scientific research, and autonomous systems. Knowledge of these distinctions enables targeted innovation and resource allocation in emerging technology sectors.

Comparison Table

| Feature | Neuromorphic Computing | High Performance Computing (HPC) |

|---|---|---|

| Architecture | Brain-inspired, event-driven, spiking neurons | Traditional Von Neumann, parallel processing cores |

| Primary Use | AI, pattern recognition, low-power sensory processing | Large-scale scientific simulations, data analysis, complex computations |

| Power Efficiency | Very high, low energy per operation | Moderate to high power consumption |

| Performance | Optimized for real-time event-driven tasks | Optimized for high-throughput, batch processing |

| Scalability | Limited by neuromorphic chip design | Highly scalable with large compute clusters |

| Programming Model | Spiking Neural Networks, event-based programming | Traditional programming languages (MPI, OpenMP, CUDA) |

| Applications | Robotics, autonomous systems, sensory data processing | Weather modeling, genomics, financial simulations |

| Latency | Extremely low latency for sensor data | Higher latency due to batch data processing |

| Examples | Intel Loihi, IBM TrueNorth | Cray, IBM Summit, Fugaku |

Which is better?

Neuromorphic computing excels in energy efficiency and mimics brain-like neural architectures, making it ideal for AI and cognitive tasks. High Performance Computing (HPC) offers unparalleled speed and processing power suited for large-scale simulations and data analysis. The choice depends on specific applications; neuromorphic is better for adaptive, low-power AI, while HPC dominates in raw computational capacity.

Connection

Neuromorphic computing mimics the neural architecture of the human brain to enable energy-efficient processing of complex tasks, which significantly benefits high performance computing (HPC) systems by enhancing parallel processing capabilities and reducing latency. HPC leverages these brain-inspired architectures to handle massive datasets and perform sophisticated simulations faster than traditional computing methods. The integration of neuromorphic principles within HPC frameworks drives advancements in artificial intelligence, machine learning, and real-time data analysis, optimizing computational efficiency and scalability.

Key Terms

**High Performance Computing:**

High Performance Computing (HPC) involves the use of supercomputers and parallel processing techniques to solve complex scientific and engineering problems at extraordinary speeds, handling massive datasets with optimized performance and scalability. HPC architectures typically rely on CPUs and GPUs designed for precise numerical computation, enabling simulations, cryptographic analysis, and big data analytics across industries including weather forecasting, genomics, and financial modeling. Explore advanced HPC technologies and frameworks to understand its transformative impact on computational science and innovation.

Parallel Processing

High performance computing (HPC) utilizes extensive parallel processing through multiple processors or cores to achieve significant computational power for complex simulations and data analysis. Neuromorphic computing mimics the human brain's neural networks, enabling parallel processing at the level of neurons and synapses, which allows for energy-efficient, adaptive computation especially suited for AI tasks. Explore the nuances of parallel processing differences between HPC and neuromorphic systems to better understand their applications and advantages.

Supercomputers

Supercomputers leverage high performance computing (HPC) architectures based on traditional CMOS technology to excel in large-scale numerical simulations and data-intensive tasks with high clock speeds and massive parallelism. Neuromorphic computing, inspired by the human brain's neural structure, offers energy-efficient processing through spiking neural networks and asynchronous event-driven architecture but currently trails HPC in raw speed for scientific computations. Explore the advancements and trade-offs between these computing paradigms in the realm of supercomputers to better understand their future impact on computational science.

Source and External Links

What is High-Performance Computing (HPC)? | NVIDIA Glossary - High-performance computing (HPC) is the use of groups of advanced computer systems to perform complex simulations, computations, and data analysis beyond the capabilities of standard commercial computers, often leveraging parallel processing and high-speed GPUs.

What is High Performance Computing | NetApp - High performance computing (HPC) enables processing data and performing complex calculations at extremely high speeds, with supercomputers combining thousands of compute nodes for parallel processing to tackle massive datasets and real-time analytics.

What Is High-Performance Computing (HPC)? - IBM - HPC uses clusters of powerful processors working in parallel to process massive, multidimensional data sets and solve complex problems--such as DNA sequencing, AI algorithms, and real-time simulations--at speeds far exceeding traditional computing systems.

dowidth.com

dowidth.com