Graph Neural Networks (GNNs) excel at processing data structured as graphs, capturing relationships and dependencies between nodes, unlike Fully Connected Neural Networks (FCNNs) which treat inputs as flat vectors without inherent connectivity. GNNs are widely applied in social network analysis, molecular biology, and recommendation systems where relational data is crucial, while FCNNs are typically used for standard classification and regression tasks. Explore the key differences and applications of GNNs and FCNNs to enhance your understanding of neural network architectures.

Why it is important

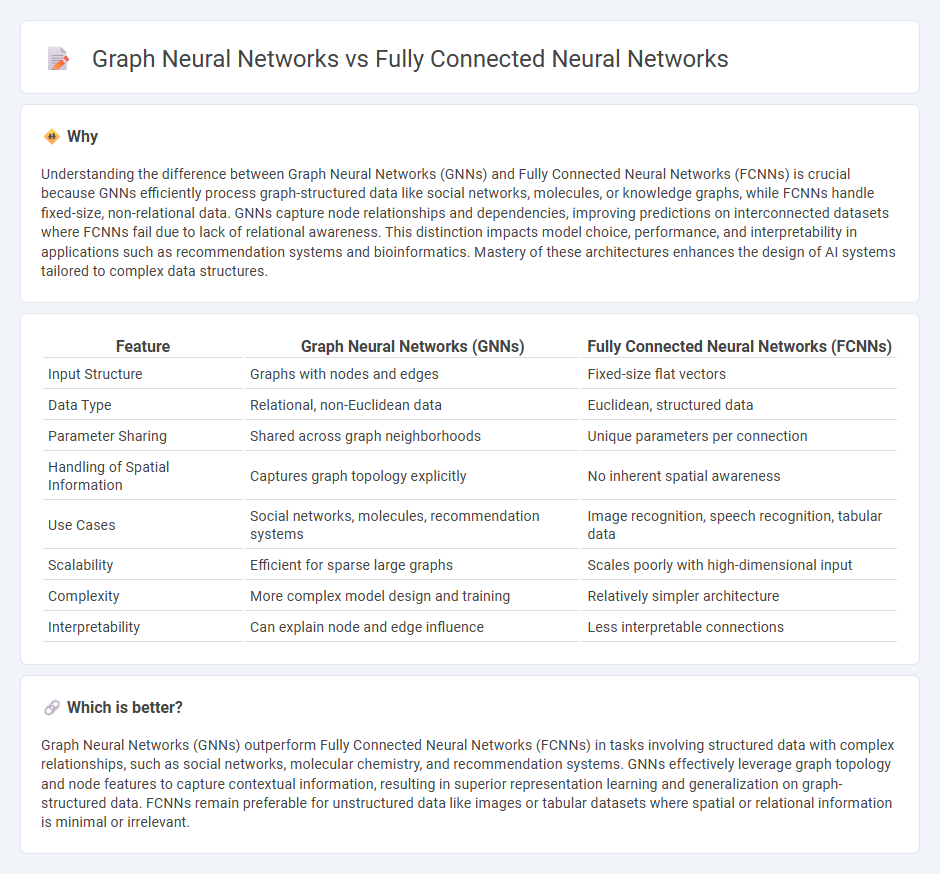

Understanding the difference between Graph Neural Networks (GNNs) and Fully Connected Neural Networks (FCNNs) is crucial because GNNs efficiently process graph-structured data like social networks, molecules, or knowledge graphs, while FCNNs handle fixed-size, non-relational data. GNNs capture node relationships and dependencies, improving predictions on interconnected datasets where FCNNs fail due to lack of relational awareness. This distinction impacts model choice, performance, and interpretability in applications such as recommendation systems and bioinformatics. Mastery of these architectures enhances the design of AI systems tailored to complex data structures.

Comparison Table

| Feature | Graph Neural Networks (GNNs) | Fully Connected Neural Networks (FCNNs) |

|---|---|---|

| Input Structure | Graphs with nodes and edges | Fixed-size flat vectors |

| Data Type | Relational, non-Euclidean data | Euclidean, structured data |

| Parameter Sharing | Shared across graph neighborhoods | Unique parameters per connection |

| Handling of Spatial Information | Captures graph topology explicitly | No inherent spatial awareness |

| Use Cases | Social networks, molecules, recommendation systems | Image recognition, speech recognition, tabular data |

| Scalability | Efficient for sparse large graphs | Scales poorly with high-dimensional input |

| Complexity | More complex model design and training | Relatively simpler architecture |

| Interpretability | Can explain node and edge influence | Less interpretable connections |

Which is better?

Graph Neural Networks (GNNs) outperform Fully Connected Neural Networks (FCNNs) in tasks involving structured data with complex relationships, such as social networks, molecular chemistry, and recommendation systems. GNNs effectively leverage graph topology and node features to capture contextual information, resulting in superior representation learning and generalization on graph-structured data. FCNNs remain preferable for unstructured data like images or tabular datasets where spatial or relational information is minimal or irrelevant.

Connection

Graph Neural Networks (GNNs) extend Fully Connected Neural Networks (FCNNs) by incorporating graph-structured data, enabling the modeling of relationships and interactions between nodes, unlike FCNNs that process fixed-size, unstructured input vectors. Both architectures rely on layers of neurons and weight matrices optimized through backpropagation, but GNNs use message-passing mechanisms to aggregate neighbor information, enhancing performance on tasks like node classification and link prediction. The shared foundation in neural computation allows GNNs to leverage FCNN principles while adapting to complex, non-Euclidean data domains.

Key Terms

Layer connectivity

Fully Connected Neural Networks (FCNNs) feature dense connections where each neuron in one layer links to every neuron in the next layer, enabling comprehensive feature interaction but leading to high computational cost and overfitting risks. Graph Neural Networks (GNNs) utilize sparse connectivity tailored to graph structures, focusing on neighboring node relationships, which improves efficiency and captures relational data more effectively. Explore how layer connectivity differences impact performance in specific applications to deepen your understanding.

Node relationships

Fully Connected Neural Networks (FCNNs) treat input data as independent features without explicitly modeling relationships between nodes, limiting their effectiveness on graph-structured data. Graph Neural Networks (GNNs) leverage node connectivity and edge attributes to capture complex relationships and propagate information across graph topology, enhancing tasks like node classification and link prediction. Explore how GNN architectures outperform FCNNs in harnessing node relationships for advanced graph analytics.

Data structure

Fully Connected Neural Networks (FCNNs) process data as fixed-size vectors, limiting their ability to capture complex relationships inherent in graph-structured data. Graph Neural Networks (GNNs) leverage nodes and edges to model interactions and dependencies, enabling dynamic representation learning on irregular graphs such as social networks or molecules. Explore the differences in data handling and architecture to understand which model suits your specific application.

Source and External Links

Fully Connected Layer vs. Convolutional Layer: Explained - This article explains how fully connected layers work in neural networks, contrasting them with convolutional layers, and discusses their roles in deep learning architectures.

How does a fully connected neural network learn features compared to convolution neural networks? - This discussion explores how fully connected neural networks (FCNNs) learn features compared to convolutional neural networks (CNNs), highlighting the differences in their learning processes.

What is Fully Connected Layer in Deep Learning? - This article provides an overview of fully connected layers in deep learning, explaining their structure and how they function within neural networks.

dowidth.com

dowidth.com