Federated learning enables decentralized model training by aggregating updates from multiple local devices while preserving data privacy, contrasting with edge computing which processes data locally on edge devices to reduce latency and bandwidth usage. Both technologies aim to optimize data handling and improve real-time decision-making in distributed networks, with federated learning focusing on collaborative AI model development and edge computing emphasizing computational efficiency at the network's edge. Discover more about how these cutting-edge technologies reshape data processing and AI deployment.

Why it is important

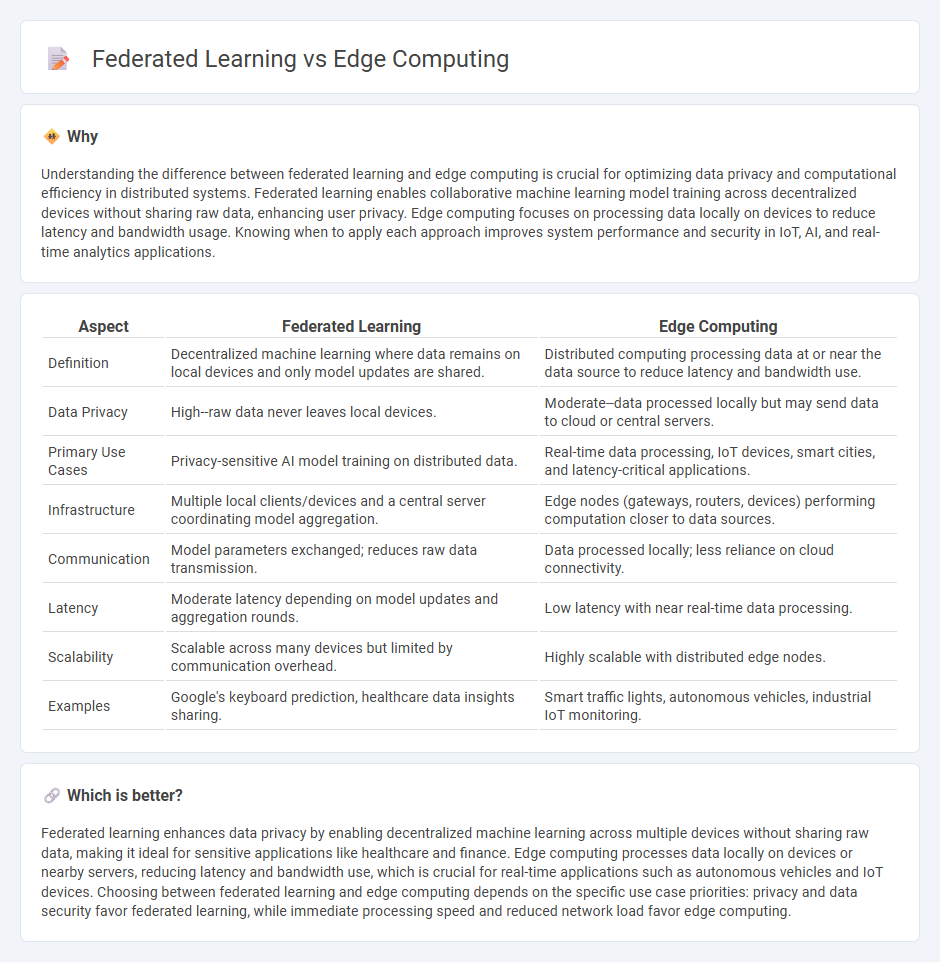

Understanding the difference between federated learning and edge computing is crucial for optimizing data privacy and computational efficiency in distributed systems. Federated learning enables collaborative machine learning model training across decentralized devices without sharing raw data, enhancing user privacy. Edge computing focuses on processing data locally on devices to reduce latency and bandwidth usage. Knowing when to apply each approach improves system performance and security in IoT, AI, and real-time analytics applications.

Comparison Table

| Aspect | Federated Learning | Edge Computing |

|---|---|---|

| Definition | Decentralized machine learning where data remains on local devices and only model updates are shared. | Distributed computing processing data at or near the data source to reduce latency and bandwidth use. |

| Data Privacy | High--raw data never leaves local devices. | Moderate--data processed locally but may send data to cloud or central servers. |

| Primary Use Cases | Privacy-sensitive AI model training on distributed data. | Real-time data processing, IoT devices, smart cities, and latency-critical applications. |

| Infrastructure | Multiple local clients/devices and a central server coordinating model aggregation. | Edge nodes (gateways, routers, devices) performing computation closer to data sources. |

| Communication | Model parameters exchanged; reduces raw data transmission. | Data processed locally; less reliance on cloud connectivity. |

| Latency | Moderate latency depending on model updates and aggregation rounds. | Low latency with near real-time data processing. |

| Scalability | Scalable across many devices but limited by communication overhead. | Highly scalable with distributed edge nodes. |

| Examples | Google's keyboard prediction, healthcare data insights sharing. | Smart traffic lights, autonomous vehicles, industrial IoT monitoring. |

Which is better?

Federated learning enhances data privacy by enabling decentralized machine learning across multiple devices without sharing raw data, making it ideal for sensitive applications like healthcare and finance. Edge computing processes data locally on devices or nearby servers, reducing latency and bandwidth use, which is crucial for real-time applications such as autonomous vehicles and IoT devices. Choosing between federated learning and edge computing depends on the specific use case priorities: privacy and data security favor federated learning, while immediate processing speed and reduced network load favor edge computing.

Connection

Federated learning leverages edge computing by processing data locally on devices, reducing latency and preserving user privacy through decentralized model training. Edge computing provides the necessary infrastructure to perform machine learning tasks near data sources, enabling efficient aggregation of distributed model updates without transferring raw data to central servers. This synergy enhances real-time analytics and supports scalable AI applications across IoT devices and mobile networks.

Key Terms

Data Localization

Edge computing processes data locally on devices close to the data source, ensuring minimal latency and enhanced data privacy by reducing the need to transmit sensitive information to centralized servers. Federated learning enables multiple devices to collaboratively train machine learning models without sharing raw data, maintaining data localization while improving model accuracy across distributed datasets. Explore the benefits and challenges of these technologies in maintaining data privacy and optimizing distributed data processing.

Distributed Processing

Edge computing involves processing data near the source, reducing latency and bandwidth usage by distributing computational tasks across local devices. Federated learning enables collaborative model training on decentralized data without transferring raw data, enhancing privacy while leveraging distributed processing. Explore the nuances and applications of these technologies to understand their impact on modern data processing.

Model Aggregation

Edge computing processes data locally on devices, minimizing latency and enhancing real-time responsiveness, while federated learning emphasizes distributed model training across decentralized data sources without centralized data pooling. Model aggregation in federated learning involves combining locally trained models into a global model through iterative updates, often using algorithms like Federated Averaging, which contrasts with edge computing's focus on local inference rather than collaborative model updates. Explore deeper insights into model aggregation techniques and their impact on scalable distributed AI systems.

Source and External Links

What is edge computing? - Edge computing is a technology that processes data close to its source, such as IoT devices or local servers, to reduce latency and improve speed by avoiding the need to send all data to a remote data center.

What is edge computing? | Benefits of the edge - Edge computing brings computation as near as possible to the data source to minimize bandwidth usage and server overload, enabling faster responses and scaling to support billions of IoT devices.

What Is Edge Computing? - Edge computing is a distributed framework that allows devices to process and act on data in real time at the network edge, sending only critical information to central datacenters, ideal for environments with limited connectivity.

dowidth.com

dowidth.com