Federated learning enables decentralized data processing across multiple devices, enhancing privacy by keeping data locally while collaboratively training shared machine learning models. Centralized learning collects all data into a single server or data center, offering streamlined processing but raising concerns over data privacy and security risks. Explore the differences in architecture, benefits, and challenges to understand which learning approach best suits your technological needs.

Why it is important

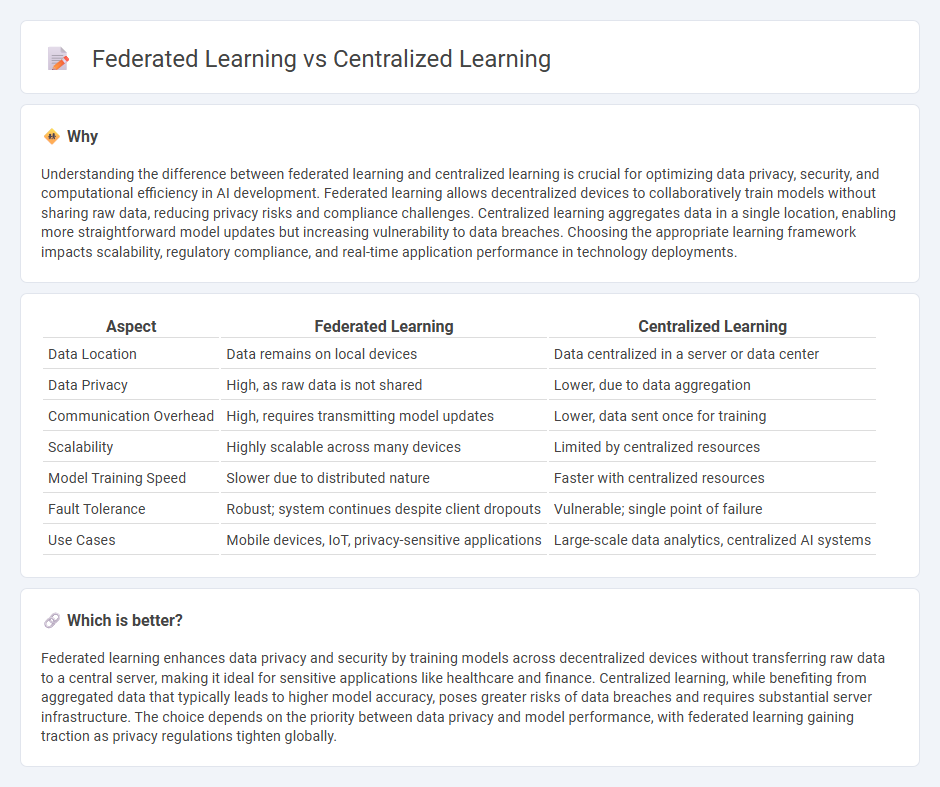

Understanding the difference between federated learning and centralized learning is crucial for optimizing data privacy, security, and computational efficiency in AI development. Federated learning allows decentralized devices to collaboratively train models without sharing raw data, reducing privacy risks and compliance challenges. Centralized learning aggregates data in a single location, enabling more straightforward model updates but increasing vulnerability to data breaches. Choosing the appropriate learning framework impacts scalability, regulatory compliance, and real-time application performance in technology deployments.

Comparison Table

| Aspect | Federated Learning | Centralized Learning |

|---|---|---|

| Data Location | Data remains on local devices | Data centralized in a server or data center |

| Data Privacy | High, as raw data is not shared | Lower, due to data aggregation |

| Communication Overhead | High, requires transmitting model updates | Lower, data sent once for training |

| Scalability | Highly scalable across many devices | Limited by centralized resources |

| Model Training Speed | Slower due to distributed nature | Faster with centralized resources |

| Fault Tolerance | Robust; system continues despite client dropouts | Vulnerable; single point of failure |

| Use Cases | Mobile devices, IoT, privacy-sensitive applications | Large-scale data analytics, centralized AI systems |

Which is better?

Federated learning enhances data privacy and security by training models across decentralized devices without transferring raw data to a central server, making it ideal for sensitive applications like healthcare and finance. Centralized learning, while benefiting from aggregated data that typically leads to higher model accuracy, poses greater risks of data breaches and requires substantial server infrastructure. The choice depends on the priority between data privacy and model performance, with federated learning gaining traction as privacy regulations tighten globally.

Connection

Federated learning and centralized learning are connected through their shared goal of training machine learning models, but they differ in data management: federated learning distributes model training across decentralized devices to enhance data privacy, while centralized learning aggregates data in a single location for model development. Both approaches leverage algorithms like gradient descent, yet federated learning uses secure aggregation techniques to ensure that sensitive data remains on local devices. This connection highlights a trade-off between data privacy protection in federated learning and the efficiency of data centralization found in traditional centralized learning methods.

Key Terms

Central server, Model aggregation, Data privacy

Centralized learning relies on a central server to collect and aggregate data from all clients, enabling unified model training but raising significant data privacy concerns due to data transmission. Federated learning decentralizes model training by aggregating locally trained models at the central server without sharing raw data, enhancing data privacy while maintaining model performance. Explore the key differences between centralized and federated learning to understand their impact on privacy and efficiency.

Data privacy

Centralized learning collects data in a single repository, increasing the risk of data breaches and privacy violations, while federated learning processes data locally on devices, minimizing data exposure by sharing only model updates. Federated learning enhances data privacy by keeping sensitive information decentralized and utilizing techniques like differential privacy and secure aggregation. Explore the differences further to understand which method best protects your data privacy needs.

Model aggregation

Model aggregation in centralized learning involves collecting all raw data in a single server, enabling straightforward model updates and consistency across the system. In federated learning, model aggregation happens on a central server after local models train independently on decentralized data, preserving data privacy and reducing communication costs. Explore more to understand how aggregation methods impact learning efficiency and data security.

Source and External Links

Decentralized vs. Centralized Training: Which Model Suits Your Organization? - This article explores the differences between centralized and decentralized training models, highlighting their benefits and challenges in organizational settings.

Centralized Training Organization - A centralized training organization consolidates training functions under a single group, providing consistency across the enterprise but potentially limiting customization across diverse business units.

4 Reasons to Embrace Centralized Training with an LMS - Centralized training using an LMS streamlines learning processes, ensures consistency, enhances tracking, and drives cost efficiency in corporate learning and development.

dowidth.com

dowidth.com