Tiny Machine Learning (TinyML) enables deployment of lightweight AI models on resource-constrained devices, focusing on energy efficiency and real-time processing at the device level. Edge AI extends this by incorporating more complex data analytics and machine learning directly on devices or local servers, reducing latency and enhancing privacy compared to cloud-based AI. Explore the distinctions and applications of TinyML and Edge AI to understand their pivotal roles in advancing smart technology.

Why it is important

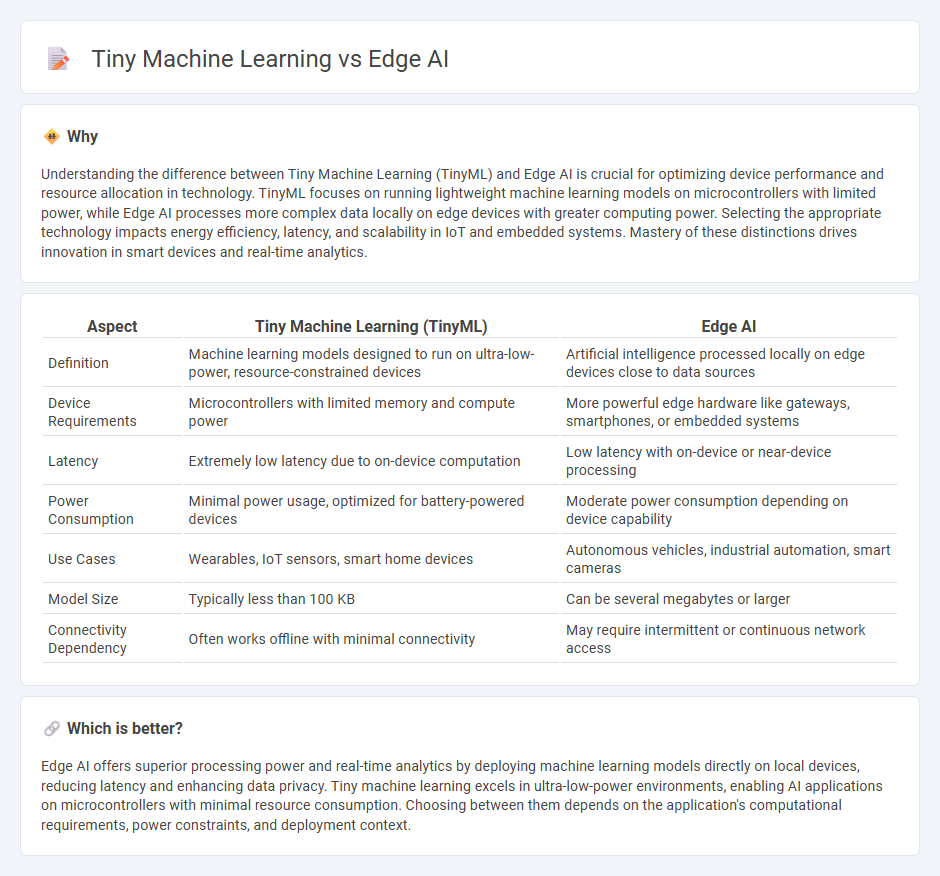

Understanding the difference between Tiny Machine Learning (TinyML) and Edge AI is crucial for optimizing device performance and resource allocation in technology. TinyML focuses on running lightweight machine learning models on microcontrollers with limited power, while Edge AI processes more complex data locally on edge devices with greater computing power. Selecting the appropriate technology impacts energy efficiency, latency, and scalability in IoT and embedded systems. Mastery of these distinctions drives innovation in smart devices and real-time analytics.

Comparison Table

| Aspect | Tiny Machine Learning (TinyML) | Edge AI |

|---|---|---|

| Definition | Machine learning models designed to run on ultra-low-power, resource-constrained devices | Artificial intelligence processed locally on edge devices close to data sources |

| Device Requirements | Microcontrollers with limited memory and compute power | More powerful edge hardware like gateways, smartphones, or embedded systems |

| Latency | Extremely low latency due to on-device computation | Low latency with on-device or near-device processing |

| Power Consumption | Minimal power usage, optimized for battery-powered devices | Moderate power consumption depending on device capability |

| Use Cases | Wearables, IoT sensors, smart home devices | Autonomous vehicles, industrial automation, smart cameras |

| Model Size | Typically less than 100 KB | Can be several megabytes or larger |

| Connectivity Dependency | Often works offline with minimal connectivity | May require intermittent or continuous network access |

Which is better?

Edge AI offers superior processing power and real-time analytics by deploying machine learning models directly on local devices, reducing latency and enhancing data privacy. Tiny machine learning excels in ultra-low-power environments, enabling AI applications on microcontrollers with minimal resource consumption. Choosing between them depends on the application's computational requirements, power constraints, and deployment context.

Connection

Tiny machine learning enables edge AI by deploying lightweight models directly on edge devices, facilitating real-time data processing without relying on cloud connectivity. Edge AI leverages these compact algorithms to perform inference locally, reducing latency and enhancing privacy. The synergy between tiny machine learning and edge AI drives efficient, scalable IoT applications and smart device functionalities.

Key Terms

Edge Computing

Edge AI processes data locally on devices like sensors and smartphones, reducing latency and bandwidth usage compared to cloud computing. Tiny Machine Learning (TinyML) enables running lightweight machine learning models directly on microcontrollers within edge devices, emphasizing ultra-low power consumption and real-time inference. Explore the advancements in Edge Computing to understand how Edge AI and TinyML are transforming decentralized intelligence.

Model Compression

Edge AI leverages model compression techniques like pruning, quantization, and knowledge distillation to optimize deep learning models for deployment on resource-constrained devices, enhancing inference speed and reducing memory footprint. Tiny machine learning (TinyML) specifically targets ultra-low-power microcontrollers by compressing models to fit within kilobytes of memory while maintaining accuracy, often employing aggressive quantization and algorithmic simplifications. Explore the latest advancements in model compression to better understand how these approaches enable efficient AI at the edge.

On-device Inference

Edge AI enables on-device inference by processing data locally on edge devices, reducing latency and enhancing data privacy compared to cloud-based solutions. Tiny Machine Learning (TinyML) specializes in deploying compact, low-power machine learning models on microcontrollers and resource-constrained devices, facilitating efficient on-device inference in scenarios with limited computational capacity. Explore how these technologies drive real-time intelligence at the edge and transform connected applications.

Source and External Links

What is edge AI? - Edge AI combines edge computing and artificial intelligence to process data locally, enabling fast responses and real-time decision-making without relying on cloud infrastructure.

A beginner's guide to AI Edge computing - This guide explains how edge AI works by processing data locally to enhance real-time decision-making, improve efficiency, and reduce costs across industries.

What Is Edge AI? - Edge AI involves deploying AI algorithms on local edge devices like sensors or IoT devices, enabling real-time data processing and analysis without constant cloud reliance.

dowidth.com

dowidth.com