Edge inference processes data locally on devices like IoT sensors and smartphones, reducing latency and bandwidth usage by minimizing data transmission to central servers. Fog inference extends this concept by distributing computation across local networks, including gateways and edge servers, to enhance processing power and scalability for complex applications. Explore the key differences and advantages of edge and fog inference to optimize your technology strategy.

Why it is important

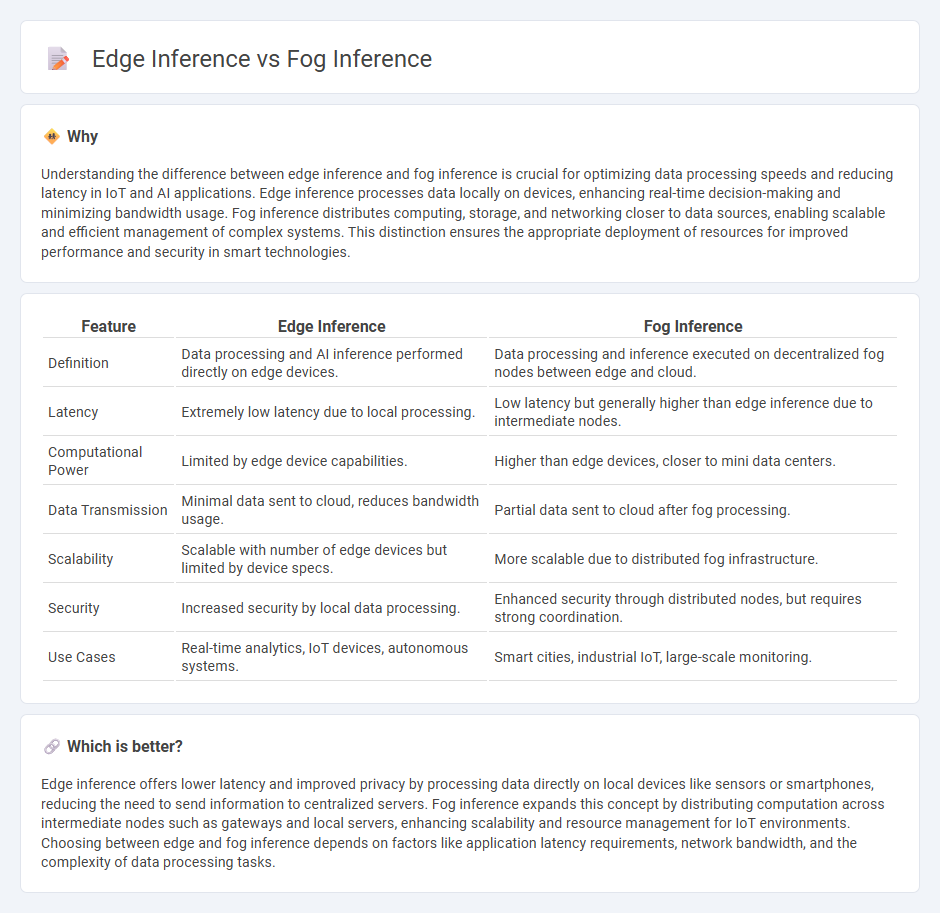

Understanding the difference between edge inference and fog inference is crucial for optimizing data processing speeds and reducing latency in IoT and AI applications. Edge inference processes data locally on devices, enhancing real-time decision-making and minimizing bandwidth usage. Fog inference distributes computing, storage, and networking closer to data sources, enabling scalable and efficient management of complex systems. This distinction ensures the appropriate deployment of resources for improved performance and security in smart technologies.

Comparison Table

| Feature | Edge Inference | Fog Inference |

|---|---|---|

| Definition | Data processing and AI inference performed directly on edge devices. | Data processing and inference executed on decentralized fog nodes between edge and cloud. |

| Latency | Extremely low latency due to local processing. | Low latency but generally higher than edge inference due to intermediate nodes. |

| Computational Power | Limited by edge device capabilities. | Higher than edge devices, closer to mini data centers. |

| Data Transmission | Minimal data sent to cloud, reduces bandwidth usage. | Partial data sent to cloud after fog processing. |

| Scalability | Scalable with number of edge devices but limited by device specs. | More scalable due to distributed fog infrastructure. |

| Security | Increased security by local data processing. | Enhanced security through distributed nodes, but requires strong coordination. |

| Use Cases | Real-time analytics, IoT devices, autonomous systems. | Smart cities, industrial IoT, large-scale monitoring. |

Which is better?

Edge inference offers lower latency and improved privacy by processing data directly on local devices like sensors or smartphones, reducing the need to send information to centralized servers. Fog inference expands this concept by distributing computation across intermediate nodes such as gateways and local servers, enhancing scalability and resource management for IoT environments. Choosing between edge and fog inference depends on factors like application latency requirements, network bandwidth, and the complexity of data processing tasks.

Connection

Edge inference processes data locally on devices near data sources, reducing latency and bandwidth usage by minimizing cloud reliance. Fog inference extends this by distributing computational tasks across intermediate nodes between edge devices and central cloud servers, enhancing scalability and real-time analytics. Both approaches collaborate to optimize data processing efficiency in Internet of Things (IoT) networks and smart environments.

Key Terms

Latency

Fog inference processes data closer to the source within a local area network, reducing latency compared to cloud-based solutions by minimizing transmission distances. Edge inference further lowers latency by performing computations directly on IoT devices or edge servers, enabling real-time responses critical for applications like autonomous vehicles and industrial automation. Discover how latency differences impact performance and application suitability in fog vs edge inference scenarios.

Data Proximity

Fog inference processes data closer to its source by utilizing local edge devices and intermediate fog nodes, reducing latency and bandwidth usage compared to centralized cloud computing. Edge inference executes AI models directly on edge devices, enhancing real-time decision-making by minimizing data transmission and improving data privacy. Explore detailed comparisons to understand how data proximity impacts performance and application suitability.

Scalability

Fog inference distributes computational tasks across numerous local devices and gateways, enhancing scalability by reducing data transmission to central servers. Edge inference processes data directly on edge devices, limiting scalability due to hardware constraints but enabling faster real-time analytics. Explore the benefits and challenges of both approaches to optimize scalability in your IoT infrastructure.

Source and External Links

inference (iii) - Fog Lake Bandcamp - Fog Lake's "inference (iii)" is a digital album available for streaming and download, featuring emotional, lo-fi indie tracks that reflect the artist's signature melancholic style.

Fog Lake -- Inference (I) - YouTube - "Inference (I)" is a live performance track from Fog Lake's 2017 release, played as the opening song at their Beachland Tavern show in Cleveland.

Inference (I) - song and lyrics by Fog Lake - Spotify - "Inference (I)" by Fog Lake is available for streaming on Spotify, released in 2017 as part of their "Inference 3" EP.

dowidth.com

dowidth.com