Edge inference processes data locally on devices like smartphones or IoT sensors, reducing latency and enhancing privacy by minimizing data transmission to central servers. Distributed inference spreads computational tasks across multiple nodes in a network, optimizing resource usage and enabling scalability for complex machine learning models. Explore the differences and applications of edge versus distributed inference to optimize your AI deployment strategy.

Why it is important

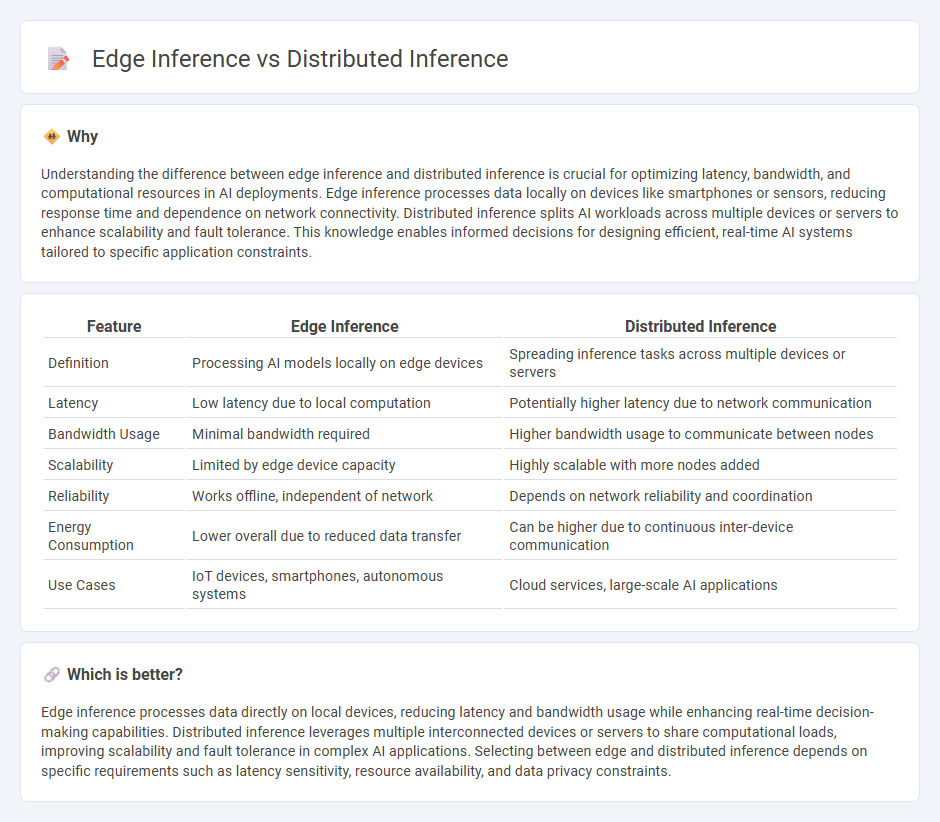

Understanding the difference between edge inference and distributed inference is crucial for optimizing latency, bandwidth, and computational resources in AI deployments. Edge inference processes data locally on devices like smartphones or sensors, reducing response time and dependence on network connectivity. Distributed inference splits AI workloads across multiple devices or servers to enhance scalability and fault tolerance. This knowledge enables informed decisions for designing efficient, real-time AI systems tailored to specific application constraints.

Comparison Table

| Feature | Edge Inference | Distributed Inference |

|---|---|---|

| Definition | Processing AI models locally on edge devices | Spreading inference tasks across multiple devices or servers |

| Latency | Low latency due to local computation | Potentially higher latency due to network communication |

| Bandwidth Usage | Minimal bandwidth required | Higher bandwidth usage to communicate between nodes |

| Scalability | Limited by edge device capacity | Highly scalable with more nodes added |

| Reliability | Works offline, independent of network | Depends on network reliability and coordination |

| Energy Consumption | Lower overall due to reduced data transfer | Can be higher due to continuous inter-device communication |

| Use Cases | IoT devices, smartphones, autonomous systems | Cloud services, large-scale AI applications |

Which is better?

Edge inference processes data directly on local devices, reducing latency and bandwidth usage while enhancing real-time decision-making capabilities. Distributed inference leverages multiple interconnected devices or servers to share computational loads, improving scalability and fault tolerance in complex AI applications. Selecting between edge and distributed inference depends on specific requirements such as latency sensitivity, resource availability, and data privacy constraints.

Connection

Edge inference and distributed inference both optimize AI model processing by decentralizing computation closer to data sources, reducing latency and bandwidth usage. Edge inference executes AI tasks directly on local devices such as IoT sensors and smartphones, enabling real-time decision-making. Distributed inference extends this concept by sharing computational loads across multiple edge nodes and cloud servers, enhancing scalability and reliability in complex AI deployments.

Key Terms

Latency

Distributed inference reduces latency by leveraging multiple nodes to process data simultaneously, minimizing the distance between data sources and processing units. Edge inference further enhances latency performance by performing computations directly on edge devices, eliminating the need for data transmission to centralized servers. Explore the nuanced trade-offs between distributed and edge inference latency to optimize your AI deployment strategy.

Bandwidth

Distributed inference reduces bandwidth consumption by splitting the model across multiple devices, allowing partial data processing locally and transmitting only intermediate results. Edge inference processes data entirely on local devices, minimizing upstream data transmission and significantly lowering bandwidth usage compared to cloud-based inference. Explore how optimizing bandwidth in inference architectures can improve system efficiency and responsiveness.

Compute location

Distributed inference leverages multiple devices or servers across a network to process data, optimizing workload by dividing computation among cloud, edge, and on-premises resources. Edge inference performs data processing locally on edge devices, reducing latency and bandwidth usage by minimizing data transmission to central servers. Explore the differences in compute location and performance benefits to determine the best approach for your application needs.

Source and External Links

Distributed Inference with Accelerate - This guide provides methods for distributing inference processes across multiple GPUs by splitting data or model layers.

Deep Dive into llm-d and Distributed Inference - The llm-d project introduces a Kubernetes-native approach to distributed inference, focusing on disaggregated processing and cache-aware scheduling.

Distributed Inference for Large Language Models - This overview discusses how distributed inference enables efficient predictions across multiple GPUs by leveraging techniques like pipeline parallelism and tensor parallelism.

dowidth.com

dowidth.com