Neuromorphic chips mimic the human brain's neural architecture, enabling energy-efficient processing and adaptive learning for AI applications. Tensor Processing Units (TPUs) are specialized accelerators designed by Google to accelerate large-scale machine learning tasks using matrix multiplication and deep neural network computations. Explore how these groundbreaking technologies are transforming artificial intelligence and machine learning performance.

Why it is important

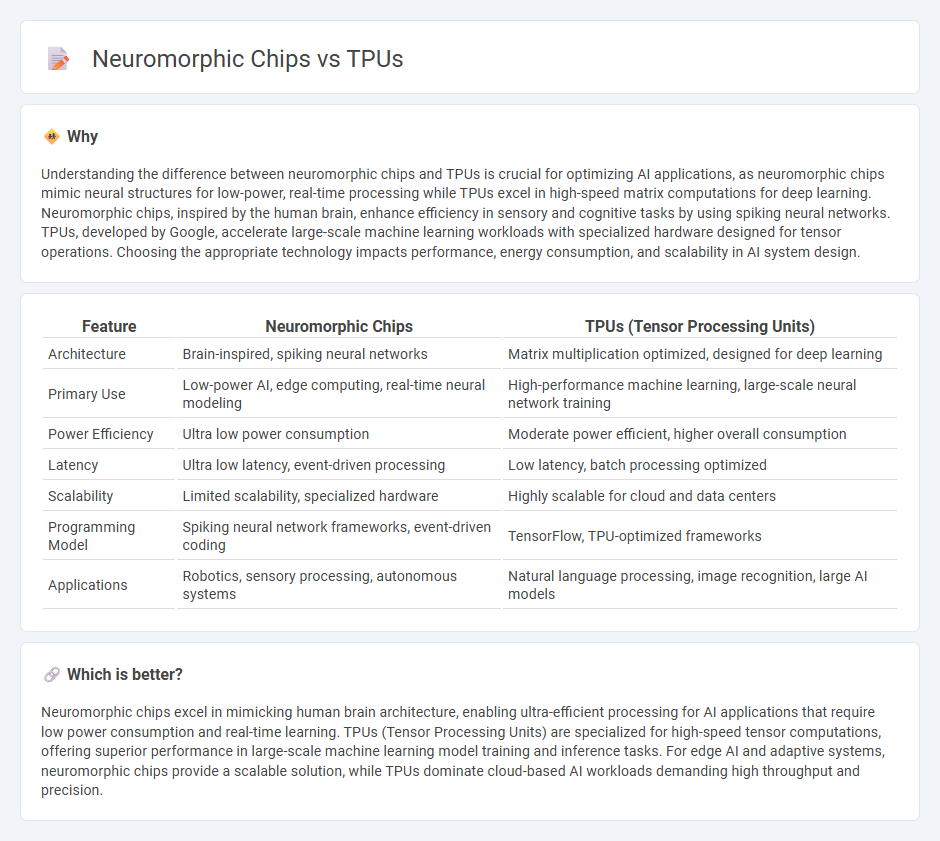

Understanding the difference between neuromorphic chips and TPUs is crucial for optimizing AI applications, as neuromorphic chips mimic neural structures for low-power, real-time processing while TPUs excel in high-speed matrix computations for deep learning. Neuromorphic chips, inspired by the human brain, enhance efficiency in sensory and cognitive tasks by using spiking neural networks. TPUs, developed by Google, accelerate large-scale machine learning workloads with specialized hardware designed for tensor operations. Choosing the appropriate technology impacts performance, energy consumption, and scalability in AI system design.

Comparison Table

| Feature | Neuromorphic Chips | TPUs (Tensor Processing Units) |

|---|---|---|

| Architecture | Brain-inspired, spiking neural networks | Matrix multiplication optimized, designed for deep learning |

| Primary Use | Low-power AI, edge computing, real-time neural modeling | High-performance machine learning, large-scale neural network training |

| Power Efficiency | Ultra low power consumption | Moderate power efficient, higher overall consumption |

| Latency | Ultra low latency, event-driven processing | Low latency, batch processing optimized |

| Scalability | Limited scalability, specialized hardware | Highly scalable for cloud and data centers |

| Programming Model | Spiking neural network frameworks, event-driven coding | TensorFlow, TPU-optimized frameworks |

| Applications | Robotics, sensory processing, autonomous systems | Natural language processing, image recognition, large AI models |

Which is better?

Neuromorphic chips excel in mimicking human brain architecture, enabling ultra-efficient processing for AI applications that require low power consumption and real-time learning. TPUs (Tensor Processing Units) are specialized for high-speed tensor computations, offering superior performance in large-scale machine learning model training and inference tasks. For edge AI and adaptive systems, neuromorphic chips provide a scalable solution, while TPUs dominate cloud-based AI workloads demanding high throughput and precision.

Connection

Neuromorphic chips and Tensor Processing Units (TPUs) are both specialized hardware designed to accelerate artificial intelligence tasks by mimicking brain-like processes and optimizing deep learning computations, respectively. Neuromorphic chips leverage spiking neural networks for energy-efficient, real-time processing, while TPUs focus on matrix multiplications essential for training and inference in neural networks. Their connection lies in enhancing AI performance through hardware tailored to specific neural computation paradigms, enabling faster and more efficient machine learning applications.

Key Terms

Parallel Processing

Tensor Processing Units (TPUs) excel in parallel processing by leveraging highly optimized matrix multiplication units tailored for deep learning tasks, enabling massive throughput and low latency in neural network computations. Neuromorphic chips mimic the brain's architecture by using spiking neural networks to process information asynchronously and in parallel, consuming less energy and enhancing efficiency in tasks like pattern recognition and sensory data processing. Explore the nuanced differences in parallel processing architectures between TPUs and neuromorphic chips to understand their distinct applications and performance benefits.

Spiking Neural Networks

Tensor Processing Units (TPUs) deliver high computational power optimized for traditional deep learning models using dense matrix operations, while neuromorphic chips excel at Spiking Neural Networks (SNNs) by mimicking biological neuron behavior with event-driven, sparse spike-based communication. SNNs implemented on neuromorphic hardware offer energy-efficient processing and low latency, making them suitable for real-time perception and adaptive learning tasks. Explore more to understand the advantages of TPUs and neuromorphic chips in advancing brain-inspired computing.

Matrix Multiplication

Tensor Processing Units (TPUs) excel in matrix multiplication by leveraging large-scale systolic arrays optimized for high-throughput, low-latency numerical operations essential in deep learning. Neuromorphic chips mimic neural architectures using spiking neurons and event-driven computation, emphasizing energy efficiency over pure matrix multiplication speed. Explore the advancements in both technologies to understand their impact on future AI hardware.

Source and External Links

Tensor Processing Unit (TPU) - A TPU is a Google-designed AI accelerator ASIC specialized in speeding up neural network machine learning tasks by efficiently handling high volumes of low-precision computations, optimized beyond general CPUs and GPUs for AI workloads.

Tensor Processing Unit - TPUs are custom AI chips by Google, with multiple generations improving from 8-bit integer matrix multiplication in the first TPU to floating-point bfloat16 operations in the second generation, enabling both training and inference of neural networks at petaflop scale.

Understanding TPUs vs GPUs in AI: A Comprehensive Guide - TPUs are Google's custom ASICs engineered specifically for tensor operations key to deep learning, offering fast training and inference by excelling at matrix multiplications unique to AI workloads, unlike GPUs initially made for graphics processing.

dowidth.com

dowidth.com