Edge intelligence integrates artificial intelligence directly into edge devices, enabling real-time data processing with minimal latency for applications like autonomous vehicles and smart cities. Fog computing extends cloud capabilities by distributing storage, computing, and networking closer to data sources, enhancing scalability and reducing bandwidth usage across IoT environments. Explore more to understand how these technologies redefine data management and operational efficiency across industries.

Why it is important

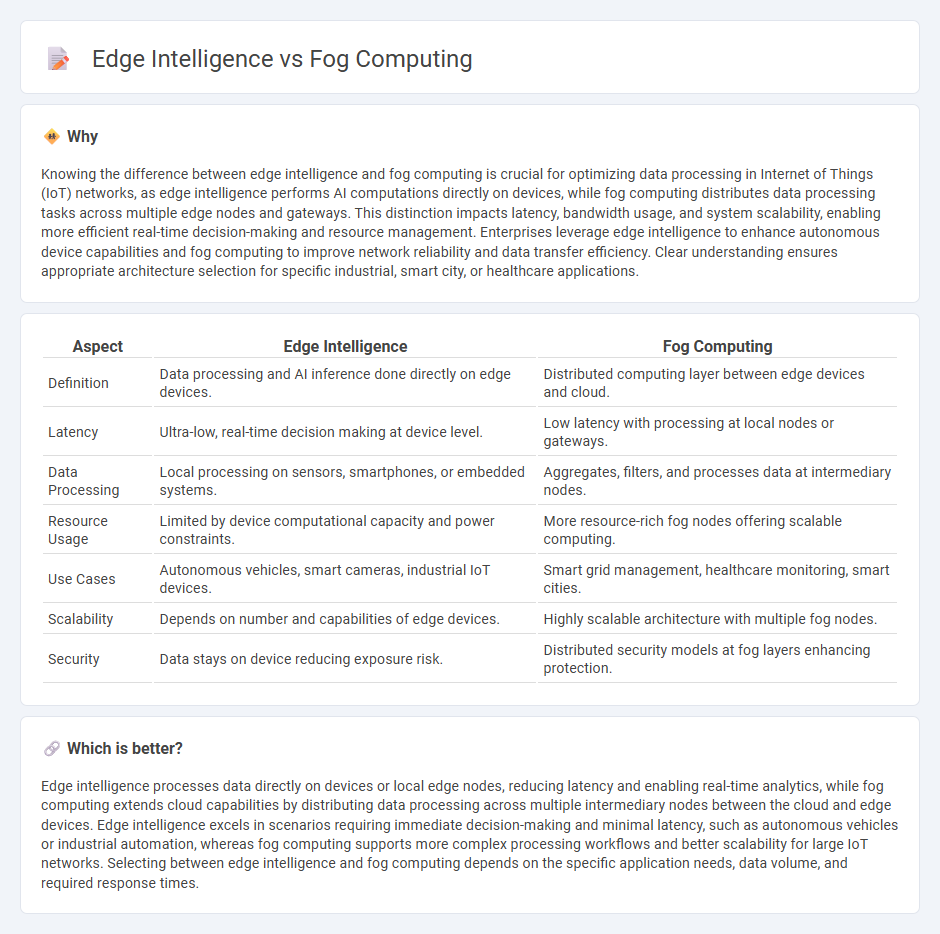

Knowing the difference between edge intelligence and fog computing is crucial for optimizing data processing in Internet of Things (IoT) networks, as edge intelligence performs AI computations directly on devices, while fog computing distributes data processing tasks across multiple edge nodes and gateways. This distinction impacts latency, bandwidth usage, and system scalability, enabling more efficient real-time decision-making and resource management. Enterprises leverage edge intelligence to enhance autonomous device capabilities and fog computing to improve network reliability and data transfer efficiency. Clear understanding ensures appropriate architecture selection for specific industrial, smart city, or healthcare applications.

Comparison Table

| Aspect | Edge Intelligence | Fog Computing |

|---|---|---|

| Definition | Data processing and AI inference done directly on edge devices. | Distributed computing layer between edge devices and cloud. |

| Latency | Ultra-low, real-time decision making at device level. | Low latency with processing at local nodes or gateways. |

| Data Processing | Local processing on sensors, smartphones, or embedded systems. | Aggregates, filters, and processes data at intermediary nodes. |

| Resource Usage | Limited by device computational capacity and power constraints. | More resource-rich fog nodes offering scalable computing. |

| Use Cases | Autonomous vehicles, smart cameras, industrial IoT devices. | Smart grid management, healthcare monitoring, smart cities. |

| Scalability | Depends on number and capabilities of edge devices. | Highly scalable architecture with multiple fog nodes. |

| Security | Data stays on device reducing exposure risk. | Distributed security models at fog layers enhancing protection. |

Which is better?

Edge intelligence processes data directly on devices or local edge nodes, reducing latency and enabling real-time analytics, while fog computing extends cloud capabilities by distributing data processing across multiple intermediary nodes between the cloud and edge devices. Edge intelligence excels in scenarios requiring immediate decision-making and minimal latency, such as autonomous vehicles or industrial automation, whereas fog computing supports more complex processing workflows and better scalability for large IoT networks. Selecting between edge intelligence and fog computing depends on the specific application needs, data volume, and required response times.

Connection

Edge intelligence leverages local data processing capabilities at the network edge, reducing latency and enhancing real-time decision-making. Fog computing extends cloud services closer to edge devices by providing a distributed computing infrastructure between the cloud and edge locations. This synergy enables efficient data analysis, resource optimization, and seamless communication in Internet of Things (IoT) and smart applications.

Key Terms

Data Processing Location

Fog computing distributes data processing across local devices and intermediate nodes between the cloud and edge, optimizing latency and bandwidth by handling data closer to its source. Edge intelligence integrates AI capabilities directly within edge devices, enabling real-time analytics and decision-making without relying heavily on centralized cloud resources. Explore deeper differences and applications of fog computing and edge intelligence to maximize your data strategy effectiveness.

Latency Optimization

Fog computing distributes data processing closer to IoT devices, significantly minimizing latency by handling tasks at intermediate nodes within the network, while edge intelligence integrates AI capabilities directly into edge devices, enabling real-time data analysis and immediate decision-making. Latency optimization in fog computing leverages multi-layered architectures to reduce the distance data travels, whereas edge intelligence focuses on on-device processing to eliminate transmission delays. Explore how each approach uniquely enhances latency reduction in various use cases for deeper insights.

Distributed Architecture

Fog computing distributes data processing tasks across a hierarchical architecture extending from the cloud to local devices, enhancing scalability and reducing latency by processing data closer to the source. Edge intelligence integrates AI capabilities directly at or near data generation points, enabling real-time decision-making with minimal dependency on centralized cloud resources. Explore detailed comparisons of distributed architectures in fog computing and edge intelligence to optimize your network's performance.

Source and External Links

Fog Computing: Definition, Explanation, and Use Cases - This webpage provides an in-depth explanation of fog computing, including its decentralized infrastructure and benefits such as reduced latency and improved security.

Fog Computing - Wikipedia defines fog computing as an architecture that extends cloud computing to the edge of the network, facilitating real-time processing and reducing latency for IoT devices.

Fog Computing Overview: Everything You Should Know - This article provides an overview of fog computing, highlighting its advantages such as reduced latency and improved security, along with its disadvantages like limited resources.

dowidth.com

dowidth.com