Differentiable programming leverages gradient-based methods to optimize models by making components differentiable, enabling efficient parameter tuning through backpropagation. Black-box optimization treats models as opaque systems, optimizing without gradient information using methods like evolutionary algorithms or Bayesian optimization. Explore the nuances between these approaches to understand their applications in modern AI and machine learning.

Why it is important

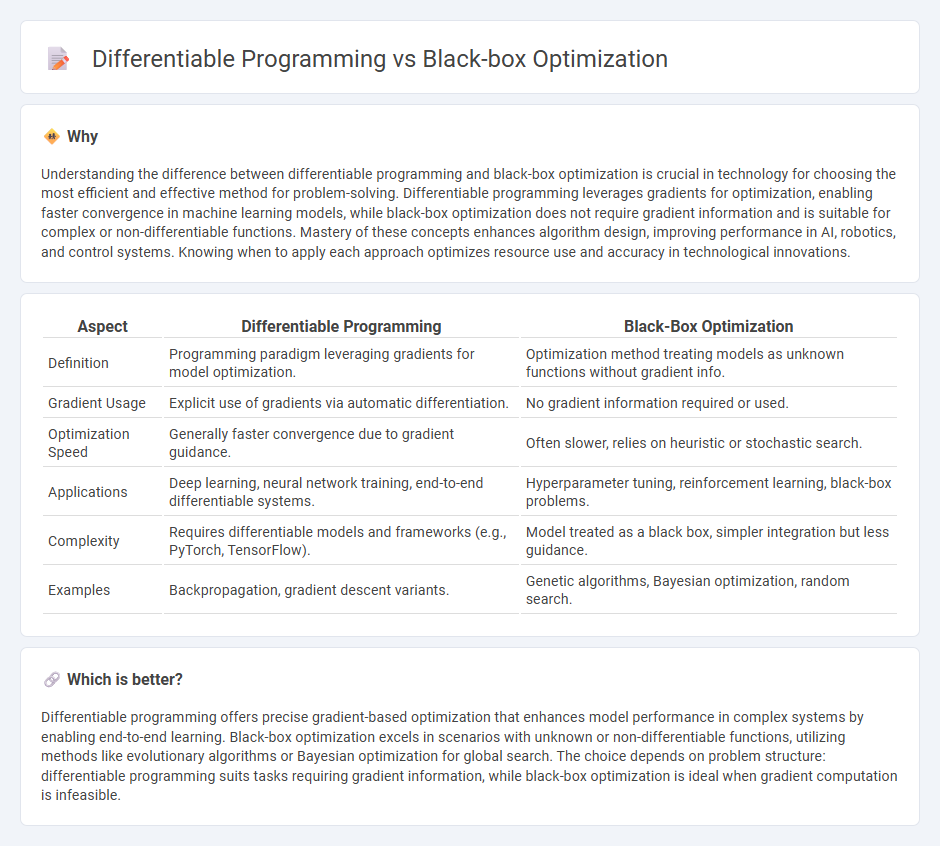

Understanding the difference between differentiable programming and black-box optimization is crucial in technology for choosing the most efficient and effective method for problem-solving. Differentiable programming leverages gradients for optimization, enabling faster convergence in machine learning models, while black-box optimization does not require gradient information and is suitable for complex or non-differentiable functions. Mastery of these concepts enhances algorithm design, improving performance in AI, robotics, and control systems. Knowing when to apply each approach optimizes resource use and accuracy in technological innovations.

Comparison Table

| Aspect | Differentiable Programming | Black-Box Optimization |

|---|---|---|

| Definition | Programming paradigm leveraging gradients for model optimization. | Optimization method treating models as unknown functions without gradient info. |

| Gradient Usage | Explicit use of gradients via automatic differentiation. | No gradient information required or used. |

| Optimization Speed | Generally faster convergence due to gradient guidance. | Often slower, relies on heuristic or stochastic search. |

| Applications | Deep learning, neural network training, end-to-end differentiable systems. | Hyperparameter tuning, reinforcement learning, black-box problems. |

| Complexity | Requires differentiable models and frameworks (e.g., PyTorch, TensorFlow). | Model treated as a black box, simpler integration but less guidance. |

| Examples | Backpropagation, gradient descent variants. | Genetic algorithms, Bayesian optimization, random search. |

Which is better?

Differentiable programming offers precise gradient-based optimization that enhances model performance in complex systems by enabling end-to-end learning. Black-box optimization excels in scenarios with unknown or non-differentiable functions, utilizing methods like evolutionary algorithms or Bayesian optimization for global search. The choice depends on problem structure: differentiable programming suits tasks requiring gradient information, while black-box optimization is ideal when gradient computation is infeasible.

Connection

Differentiable programming integrates gradient-based methods to optimize models by leveraging the differentiability of functions, while black-box optimization tackles problems where gradient information is unavailable or unreliable. Combining these approaches enhances machine learning and control systems by using differentiable programming for learnable model components and black-box optimization for tuning non-differentiable parameters. This hybrid strategy improves performance in complex systems such as neural architecture search, robotics, and reinforcement learning.

Key Terms

Gradient-free methods

Black-box optimization methods excel in scenarios where gradient information is unavailable or unreliable, using heuristic or stochastic approaches such as genetic algorithms, Bayesian optimization, and evolutionary strategies to optimize complex objective functions. Differentiable programming leverages gradient-based techniques, enabling efficient training of models by computing exact derivatives through automatic differentiation, but it struggles with non-differentiable or noisy functions. Explore more to understand how gradient-free methods complement differentiable programming in optimizing diverse problem spaces.

Automatic differentiation

Black-box optimization involves optimizing functions without explicit gradient information, relying on methods like evolutionary algorithms or Bayesian optimization, whereas differentiable programming utilizes automatic differentiation to compute precise gradients for gradient-based optimization. Automatic differentiation efficiently calculates derivatives by systematically applying the chain rule, enabling complex models to be trained with high accuracy and speed. Explore how automatic differentiation transforms model training and optimization in differentiable programming.

Objective function

Black-box optimization treats the objective function as an unknown or opaque system, relying solely on input-output evaluations without requiring gradient information, making it suitable for complex or noisy problems. Differentiable programming exploits the objective function's analytic form to compute gradients, enabling efficient optimization through gradient descent and backpropagation. Explore further to understand how these approaches impact optimization strategies and performance.

Source and External Links

Black-Box Optimization and Its Applications - Black-box optimization (BBO) is a data-driven, derivative-free method for minimizing objective functions where only input-output pairs are available, often used when gradient information is inaccessible or expensive, and it includes approaches like direct search and sequential model-based optimization to efficiently find global optima in constrained or unconstrained problems.

A Tutorial on Black-Box Optimization - LIX - Black-box optimization deals with problems where the objective function is hidden inside legacy code, simulations, or experiments making evaluation costly, and solutions focus on sampling and search strategies that balance local intensification with global exploration under limited evaluation budgets.

Derivative-free optimization - Wikipedia - Derivative-free optimization, also known as black-box optimization, refers to optimization methods that operate without derivative information, useful for noisy, expensive, or non-smooth objective functions, and includes popular algorithms such as Bayesian optimization, evolutionary strategies, genetic algorithms, Nelder-Mead, and particle swarm optimization.

dowidth.com

dowidth.com