Differential privacy ensures individual data is protected by adding random noise to datasets, preventing the identification of specific records during analysis. L-diversity enhances privacy in data anonymization by guaranteeing that sensitive attributes within any group have at least L well-represented distinct values, minimizing the risk of attribute disclosure. Explore the differences and applications of differential privacy and L-diversity to safeguard sensitive information in data science.

Why it is important

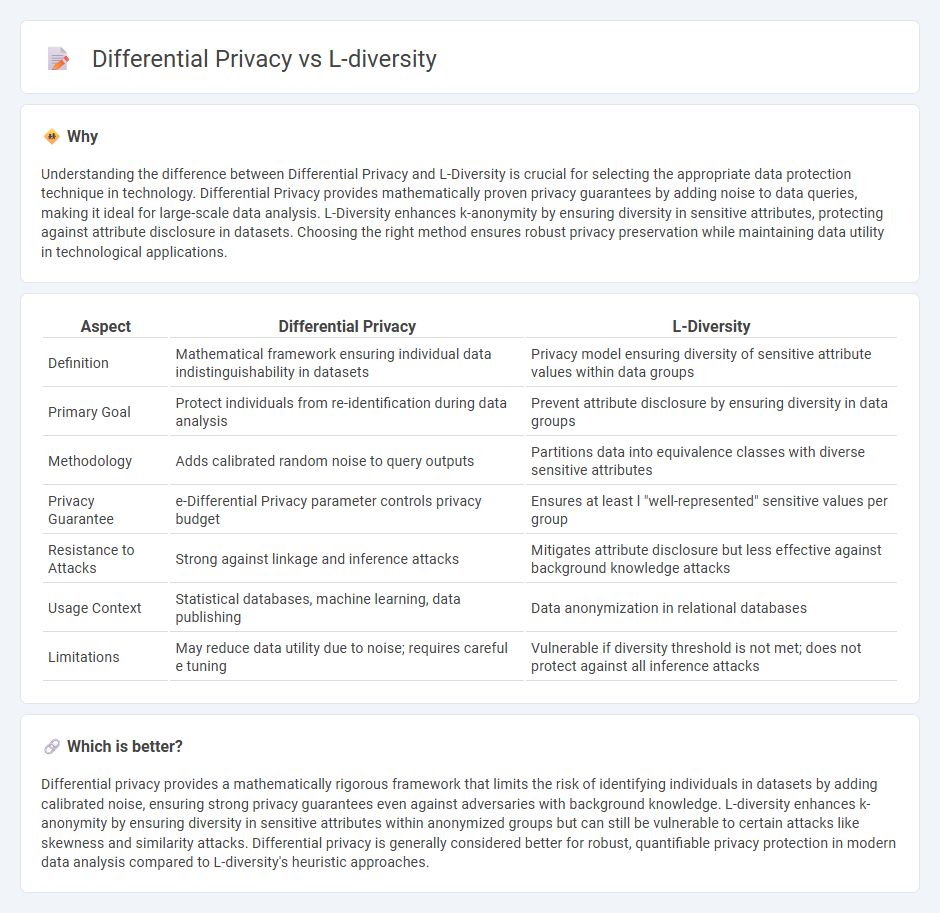

Understanding the difference between Differential Privacy and L-Diversity is crucial for selecting the appropriate data protection technique in technology. Differential Privacy provides mathematically proven privacy guarantees by adding noise to data queries, making it ideal for large-scale data analysis. L-Diversity enhances k-anonymity by ensuring diversity in sensitive attributes, protecting against attribute disclosure in datasets. Choosing the right method ensures robust privacy preservation while maintaining data utility in technological applications.

Comparison Table

| Aspect | Differential Privacy | L-Diversity |

|---|---|---|

| Definition | Mathematical framework ensuring individual data indistinguishability in datasets | Privacy model ensuring diversity of sensitive attribute values within data groups |

| Primary Goal | Protect individuals from re-identification during data analysis | Prevent attribute disclosure by ensuring diversity in data groups |

| Methodology | Adds calibrated random noise to query outputs | Partitions data into equivalence classes with diverse sensitive attributes |

| Privacy Guarantee | e-Differential Privacy parameter controls privacy budget | Ensures at least l "well-represented" sensitive values per group |

| Resistance to Attacks | Strong against linkage and inference attacks | Mitigates attribute disclosure but less effective against background knowledge attacks |

| Usage Context | Statistical databases, machine learning, data publishing | Data anonymization in relational databases |

| Limitations | May reduce data utility due to noise; requires careful e tuning | Vulnerable if diversity threshold is not met; does not protect against all inference attacks |

Which is better?

Differential privacy provides a mathematically rigorous framework that limits the risk of identifying individuals in datasets by adding calibrated noise, ensuring strong privacy guarantees even against adversaries with background knowledge. L-diversity enhances k-anonymity by ensuring diversity in sensitive attributes within anonymized groups but can still be vulnerable to certain attacks like skewness and similarity attacks. Differential privacy is generally considered better for robust, quantifiable privacy protection in modern data analysis compared to L-diversity's heuristic approaches.

Connection

Differential privacy and L-diversity are connected through their shared goal of enhancing data privacy by protecting individual information within datasets. Differential privacy adds controlled noise to data queries to prevent the identification of specific individuals, while L-diversity ensures that sensitive attributes within anonymized groups exhibit sufficient diversity to reduce re-identification risks. Both techniques complement each other in data anonymization frameworks, strengthening privacy guarantees against inference attacks.

Key Terms

Anonymization

L-diversity enhances anonymization by ensuring that each data group contains diverse sensitive attributes, reducing the risk of attribute disclosure. Differential privacy protects individual data by adding mathematically calibrated noise, preventing re-identification even with auxiliary information. Explore the detailed mechanisms and applications of these anonymization techniques to safeguard sensitive data effectively.

Quasi-identifier

L-diversity enhances data anonymization by ensuring that sensitive attributes within each quasi-identifier group exhibit diversity, reducing the risk of attribute disclosure. Differential privacy, on the other hand, injects calibrated noise into query results to obscure individual contributions while maintaining overall data utility, without relying specifically on quasi-identifier grouping. Explore how these privacy models address quasi-identifier challenges for stronger data protection.

Noise injection

L-diversity enhances data privacy by ensuring diverse sensitive attribute values within each equivalence class, limiting attribute disclosure risks without adding noise. Differential privacy injects calibrated noise into query outputs, providing strong mathematical guarantees against individual data re-identification. Explore the mechanisms and effectiveness of noise injection in differential privacy to understand its privacy-preserving advantages.

Source and External Links

k--Anonymity, L--Diversity, t--Closeness - Duke Computer Science - L-diversity means that every group of records with the same quasi-identifiers has at least L distinct sensitive values, ideally in roughly equal proportions.

The L Diversity Data Anonymization Model: Extending K ... - K2view - L-diversity reduces re-identification risk by ensuring that, within groups sharing the same quasi-identifiers, there are at least L distinct sensitive values, correcting weaknesses in k-anonymity.

K-anonymity, l-diversity and t-closeness | Data Privacy Handbook - L-diversity is an extension of k-anonymity that requires at least L different sensitive attribute values for each combination of quasi-identifiers, preventing homogeneity and background knowledge attacks.

dowidth.com

dowidth.com