Diffusion models simulate continuous stochastic processes to generate complex data distributions, excelling in high-dimensional space modeling. Markov Chain Monte Carlo (MCMC) techniques employ iterative sampling methods to approximate probability distributions, widely used for Bayesian inference and statistical mechanics. Explore the differences and applications of these powerful technologies to understand their impact on modern computational science.

Why it is important

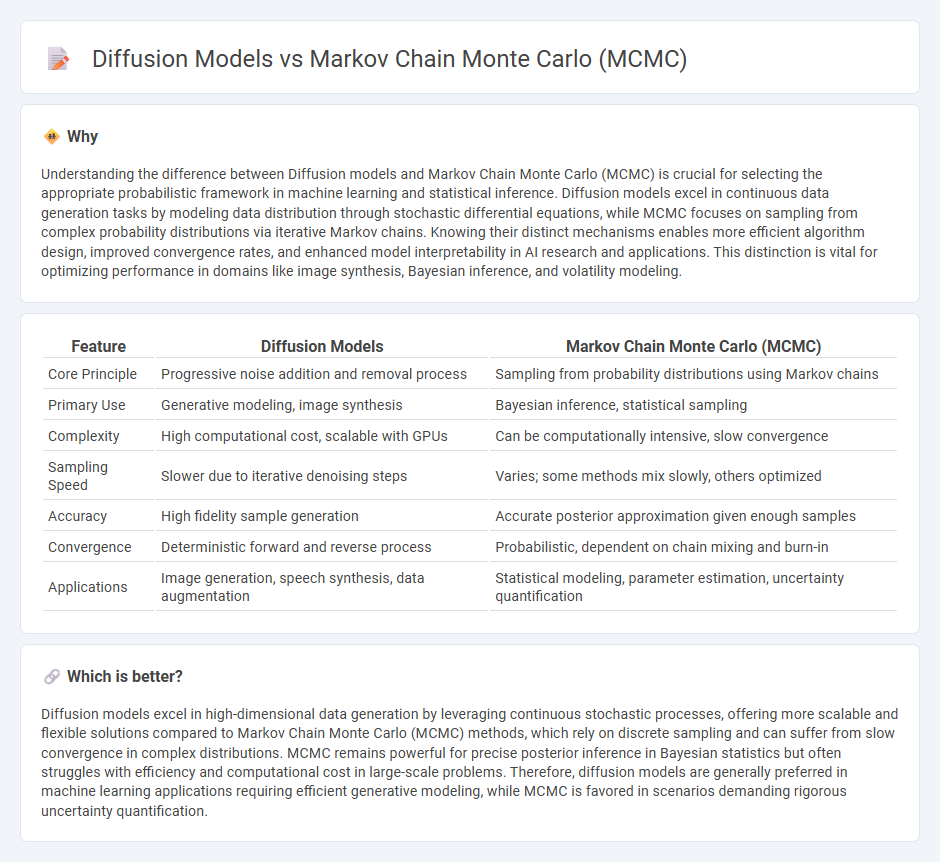

Understanding the difference between Diffusion models and Markov Chain Monte Carlo (MCMC) is crucial for selecting the appropriate probabilistic framework in machine learning and statistical inference. Diffusion models excel in continuous data generation tasks by modeling data distribution through stochastic differential equations, while MCMC focuses on sampling from complex probability distributions via iterative Markov chains. Knowing their distinct mechanisms enables more efficient algorithm design, improved convergence rates, and enhanced model interpretability in AI research and applications. This distinction is vital for optimizing performance in domains like image synthesis, Bayesian inference, and volatility modeling.

Comparison Table

| Feature | Diffusion Models | Markov Chain Monte Carlo (MCMC) |

|---|---|---|

| Core Principle | Progressive noise addition and removal process | Sampling from probability distributions using Markov chains |

| Primary Use | Generative modeling, image synthesis | Bayesian inference, statistical sampling |

| Complexity | High computational cost, scalable with GPUs | Can be computationally intensive, slow convergence |

| Sampling Speed | Slower due to iterative denoising steps | Varies; some methods mix slowly, others optimized |

| Accuracy | High fidelity sample generation | Accurate posterior approximation given enough samples |

| Convergence | Deterministic forward and reverse process | Probabilistic, dependent on chain mixing and burn-in |

| Applications | Image generation, speech synthesis, data augmentation | Statistical modeling, parameter estimation, uncertainty quantification |

Which is better?

Diffusion models excel in high-dimensional data generation by leveraging continuous stochastic processes, offering more scalable and flexible solutions compared to Markov Chain Monte Carlo (MCMC) methods, which rely on discrete sampling and can suffer from slow convergence in complex distributions. MCMC remains powerful for precise posterior inference in Bayesian statistics but often struggles with efficiency and computational cost in large-scale problems. Therefore, diffusion models are generally preferred in machine learning applications requiring efficient generative modeling, while MCMC is favored in scenarios demanding rigorous uncertainty quantification.

Connection

Diffusion models simulate data generation by progressively transforming noise into structured outputs, leveraging stochastic processes closely related to Markov chains. Markov Chain Monte Carlo (MCMC) methods enable sampling from complex probability distributions through iterative transitions that maintain detailed balance, conceptually aligning with the stepwise refinement in diffusion models. This connection allows diffusion models to benefit from MCMC's theoretical guarantees for convergence and sampling efficiency in generative tasks.

Key Terms

Sampling

Markov Chain Monte Carlo (MCMC) algorithms generate samples by constructing a Markov chain that has the desired distribution as its equilibrium distribution, enabling effective exploration of complex probability spaces. Diffusion models utilize stochastic differential equations to iteratively transform a simple distribution into a target distribution, often yielding more efficient and scalable sampling for high-dimensional data. Explore the nuances and applications of these powerful sampling methods to enhance your understanding of modern probabilistic modeling.

Probabilistic Models

Markov Chain Monte Carlo (MCMC) algorithms generate samples by constructing a Markov chain with a specified stationary distribution, effectively exploring complex posterior distributions in Bayesian inference. Diffusion models employ stochastic differential equations to gradually transform simple distributions into targeted data distributions, excelling in high-dimensional generative tasks. Explore the theoretical foundations and practical applications of these probabilistic models to deepen your understanding.

Generative Processes

Markov Chain Monte Carlo (MCMC) methods generate samples by constructing a Markov chain that converges to a target distribution, relying on iterative stochastic transitions for estimating complex probability densities. Diffusion models employ a continuous-time stochastic process that gradually transforms noise into data, leveraging neural networks to reverse this diffusion and synthesize high-fidelity samples. Explore further to understand the nuanced advantages of MCMC's sampling convergence versus diffusion models' scalable generative capabilities.

Source and External Links

Markov chain Monte Carlo - Wikipedia - MCMC is a class of algorithms used to draw samples from a probability distribution by constructing a Markov chain with a desired invariant distribution, enabling estimation via the ergodic theorem which parallels the Law of Large Numbers.

A simple introduction to Markov Chain Monte-Carlo sampling - PMC - MCMC is a computer-driven method that generates correlated random samples sequentially, where each sample depends only on the previous one, allowing sampling from complex distributions based on known density functions without needing full distributional properties.

A Gentle Introduction to Markov Chain Monte Carlo for Probability - MCMC combines Monte Carlo integration with Markov chains to sample from high-dimensional probability distributions where independent sampling is difficult, by creating a chain whose equilibrium distribution matches the target distribution to approximate quantities of interest.

dowidth.com

dowidth.com