Edge intelligence integrates real-time data processing at the source, enabling faster decision-making and reduced latency compared to traditional cloud-based AI systems. AI accelerators, such as GPUs and TPUs, are specialized hardware designed to enhance the speed and efficiency of machine learning tasks by optimizing neural network computations. Explore more to understand how these technologies transform data-driven applications.

Why it is important

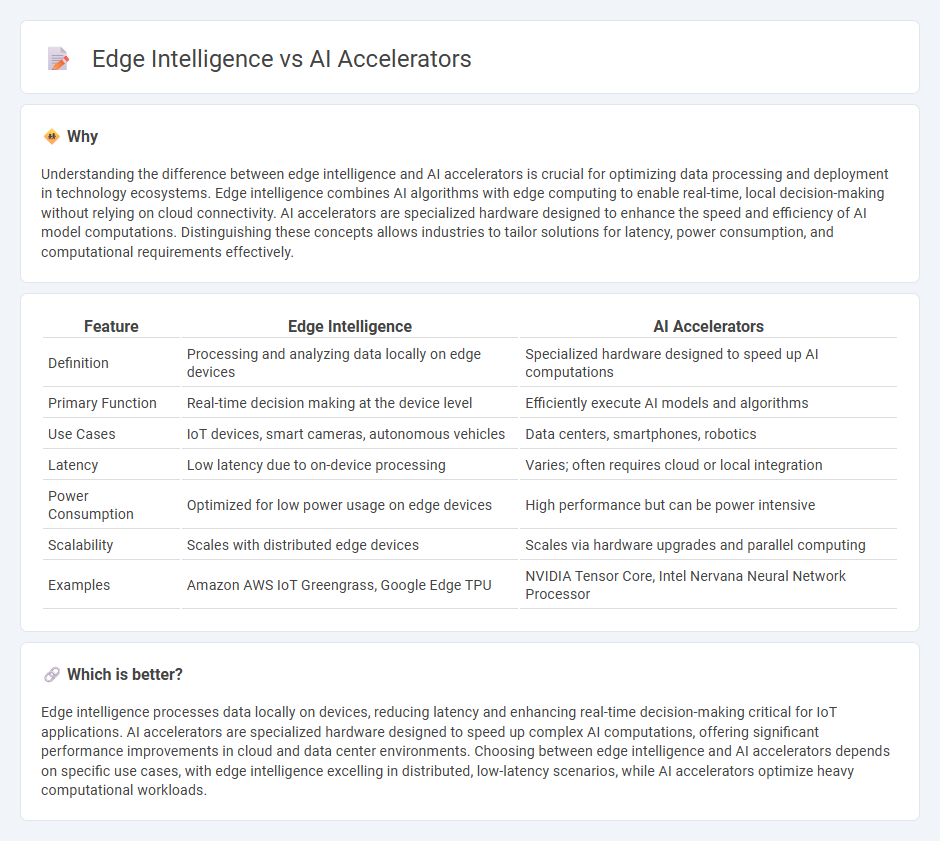

Understanding the difference between edge intelligence and AI accelerators is crucial for optimizing data processing and deployment in technology ecosystems. Edge intelligence combines AI algorithms with edge computing to enable real-time, local decision-making without relying on cloud connectivity. AI accelerators are specialized hardware designed to enhance the speed and efficiency of AI model computations. Distinguishing these concepts allows industries to tailor solutions for latency, power consumption, and computational requirements effectively.

Comparison Table

| Feature | Edge Intelligence | AI Accelerators |

|---|---|---|

| Definition | Processing and analyzing data locally on edge devices | Specialized hardware designed to speed up AI computations |

| Primary Function | Real-time decision making at the device level | Efficiently execute AI models and algorithms |

| Use Cases | IoT devices, smart cameras, autonomous vehicles | Data centers, smartphones, robotics |

| Latency | Low latency due to on-device processing | Varies; often requires cloud or local integration |

| Power Consumption | Optimized for low power usage on edge devices | High performance but can be power intensive |

| Scalability | Scales with distributed edge devices | Scales via hardware upgrades and parallel computing |

| Examples | Amazon AWS IoT Greengrass, Google Edge TPU | NVIDIA Tensor Core, Intel Nervana Neural Network Processor |

Which is better?

Edge intelligence processes data locally on devices, reducing latency and enhancing real-time decision-making critical for IoT applications. AI accelerators are specialized hardware designed to speed up complex AI computations, offering significant performance improvements in cloud and data center environments. Choosing between edge intelligence and AI accelerators depends on specific use cases, with edge intelligence excelling in distributed, low-latency scenarios, while AI accelerators optimize heavy computational workloads.

Connection

Edge intelligence leverages AI accelerators--specialized hardware such as GPUs, TPUs, and FPGAs--to perform real-time data processing at the network edge, reducing latency and bandwidth usage. These accelerators enable efficient execution of AI models directly on edge devices, enhancing responsiveness and power efficiency in applications like autonomous vehicles, IoT systems, and smart cameras. By integrating edge intelligence with AI accelerators, industries achieve faster decision-making and improved scalability without relying heavily on cloud infrastructure.

Key Terms

Hardware Optimization

AI accelerators specialize in enhancing performance by optimizing hardware components such as GPUs, TPUs, and FPGAs to efficiently handle complex neural network computations. Edge intelligence leverages these optimized hardware platforms to perform real-time data processing and AI inference directly on edge devices, reducing latency and bandwidth usage. Explore detailed comparisons and advancements in hardware optimization for AI accelerators and edge intelligence to maximize system efficiency.

Low Latency Processing

AI accelerators enhance low latency processing by providing specialized hardware designed to optimize computational speed and energy efficiency, significantly reducing inference times in AI applications. Edge intelligence embeds AI capabilities directly within local devices or edge nodes, minimizing data transmission delays and enabling real-time decision-making in latency-sensitive environments such as autonomous vehicles and industrial IoT. Explore how combining AI accelerators with edge intelligence can revolutionize low latency processing for advanced AI deployments.

On-Device Learning

AI accelerators enhance edge intelligence by providing specialized hardware for faster on-device learning, enabling real-time data processing and reduced latency. On-device learning leverages these accelerators to personalize AI models directly on smartphones, wearables, and IoT devices without relying on cloud connectivity. Explore how AI accelerators revolutionize edge intelligence through efficient, privacy-preserving, and adaptive on-device learning.

Source and External Links

What is an AI accelerator? - AI accelerators, such as GPUs, FPGAs, and ASICs, are specialized hardware designed to dramatically speed up and improve the efficiency of AI and deep learning applications, enabling breakthroughs at scale with lower latency and power consumption compared to traditional CPUs.

What's the Difference Between AI accelerators and GPUs? - While GPUs are a common type of AI accelerator, more specialized accelerators like ASICs can be 100 to 1,000 times more energy-efficient for specific AI tasks, leveraging advanced parallel processing and heterogeneous chip architectures for superior performance in targeted applications.

Artificial Intelligence (AI) Accelerators - AI accelerators can be discrete hardware components working alongside CPUs in parallel computing models or integrated features within CPUs themselves, both aimed at delivering high-throughput, low-latency performance for demanding AI workloads across devices, edge, and data centers.

dowidth.com

dowidth.com