Biosignal decoding involves interpreting physiological signals such as EEG or EMG to understand human intentions, enabling direct communication between the brain and external devices. Gesture recognition uses computer vision and sensor data to identify and classify human gestures for controlling systems or enhancing interaction. Explore the latest advancements in these technologies to discover their impacts on healthcare and human-computer interaction.

Why it is important

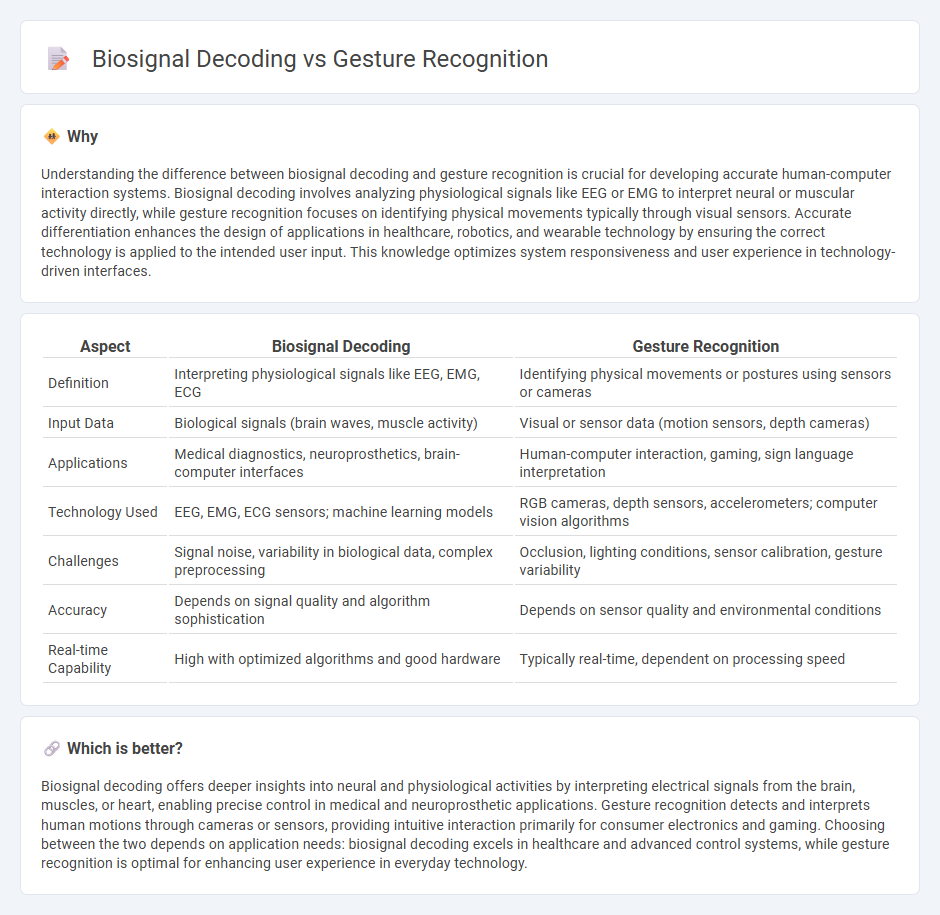

Understanding the difference between biosignal decoding and gesture recognition is crucial for developing accurate human-computer interaction systems. Biosignal decoding involves analyzing physiological signals like EEG or EMG to interpret neural or muscular activity directly, while gesture recognition focuses on identifying physical movements typically through visual sensors. Accurate differentiation enhances the design of applications in healthcare, robotics, and wearable technology by ensuring the correct technology is applied to the intended user input. This knowledge optimizes system responsiveness and user experience in technology-driven interfaces.

Comparison Table

| Aspect | Biosignal Decoding | Gesture Recognition |

|---|---|---|

| Definition | Interpreting physiological signals like EEG, EMG, ECG | Identifying physical movements or postures using sensors or cameras |

| Input Data | Biological signals (brain waves, muscle activity) | Visual or sensor data (motion sensors, depth cameras) |

| Applications | Medical diagnostics, neuroprosthetics, brain-computer interfaces | Human-computer interaction, gaming, sign language interpretation |

| Technology Used | EEG, EMG, ECG sensors; machine learning models | RGB cameras, depth sensors, accelerometers; computer vision algorithms |

| Challenges | Signal noise, variability in biological data, complex preprocessing | Occlusion, lighting conditions, sensor calibration, gesture variability |

| Accuracy | Depends on signal quality and algorithm sophistication | Depends on sensor quality and environmental conditions |

| Real-time Capability | High with optimized algorithms and good hardware | Typically real-time, dependent on processing speed |

Which is better?

Biosignal decoding offers deeper insights into neural and physiological activities by interpreting electrical signals from the brain, muscles, or heart, enabling precise control in medical and neuroprosthetic applications. Gesture recognition detects and interprets human motions through cameras or sensors, providing intuitive interaction primarily for consumer electronics and gaming. Choosing between the two depends on application needs: biosignal decoding excels in healthcare and advanced control systems, while gesture recognition is optimal for enhancing user experience in everyday technology.

Connection

Biosignal decoding translates physiological signals such as EEG or EMG into interpretable data, enabling precise gesture recognition by identifying specific muscle movements or neural patterns. Gesture recognition systems leverage these decoded biosignals to interpret user intent, enhancing human-computer interaction in applications like prosthetics control and virtual reality. Integrating advanced machine learning algorithms improves the accuracy and responsiveness of biosignal-based gesture recognition technologies.

Key Terms

Sensors

Gesture recognition relies on vision-based sensors such as cameras, depth sensors, and infrared devices to capture and interpret human movements, allowing for real-time interaction with digital systems. Biosignal decoding employs physiological sensors, including electromyography (EMG), electroencephalography (EEG), and electrocardiography (ECG), to detect and analyze electrical activities from muscles, brain, and heart, enabling deeper insight into human intent and state. Explore detailed sensor technologies and their applications to understand the comparative advantages of gesture recognition versus biosignal decoding.

Pattern Recognition

Gesture recognition relies heavily on visual and motion data from sensors like cameras and accelerometers to classify human movements, whereas biosignal decoding interprets physiological signals such as EEG, EMG, or ECG to understand neural or muscular activity patterns. Pattern recognition techniques in gesture recognition often employ convolutional neural networks (CNNs) for spatial feature extraction, while biosignal decoding utilizes machine learning models like support vector machines (SVMs) and recurrent neural networks (RNNs) to capture temporal dynamics. Explore in-depth comparisons of algorithms and applications in pattern recognition for a clearer understanding of their distinct roles.

Machine Learning

Gesture recognition leverages machine learning algorithms to interpret visual or sensor-based inputs such as images, videos, or accelerometer data, enabling systems to identify hand or body movements in real time. Biosignal decoding employs machine learning to analyze physiological data like EEG, EMG, or ECG signals, extracting meaningful patterns related to neural or muscular activity for applications in medical diagnostics and human-computer interaction. Explore advanced machine learning techniques and applications to understand the evolving landscape of gesture recognition and biosignal decoding.

Source and External Links

Gesture recognition - Wikipedia - Gesture recognition is a computer science field focused on recognizing and interpreting human gestures, often using cameras and algorithms to interface with devices without physical touch.

What is Gesture Recognition? - Virtusa - Gesture recognition technology uses mathematical algorithms and hardware like cameras to interpret movements such as hand gestures, head nods, or gaits, enhancing human-computer interaction in devices from touchscreens to gaming consoles.

Gesture recognition task guide | Google AI Edge - Google's MediaPipe Gesture Recognizer can detect and classify common hand gestures in real time, offering both pre-trained models for standard gestures and the ability to train custom gesture classifiers for specialized uses.

dowidth.com

dowidth.com