Neural Radiance Fields (NeRF) leverage deep learning models to synthesize highly realistic 3D scenes by predicting color and density at any point in space, revolutionizing rendering techniques. Traditional ray tracing simulates light paths through pixels to produce photorealistic images but often requires significant computational resources and time. Discover how NeRF is transforming digital rendering beyond the limits of classic ray tracing.

Why it is important

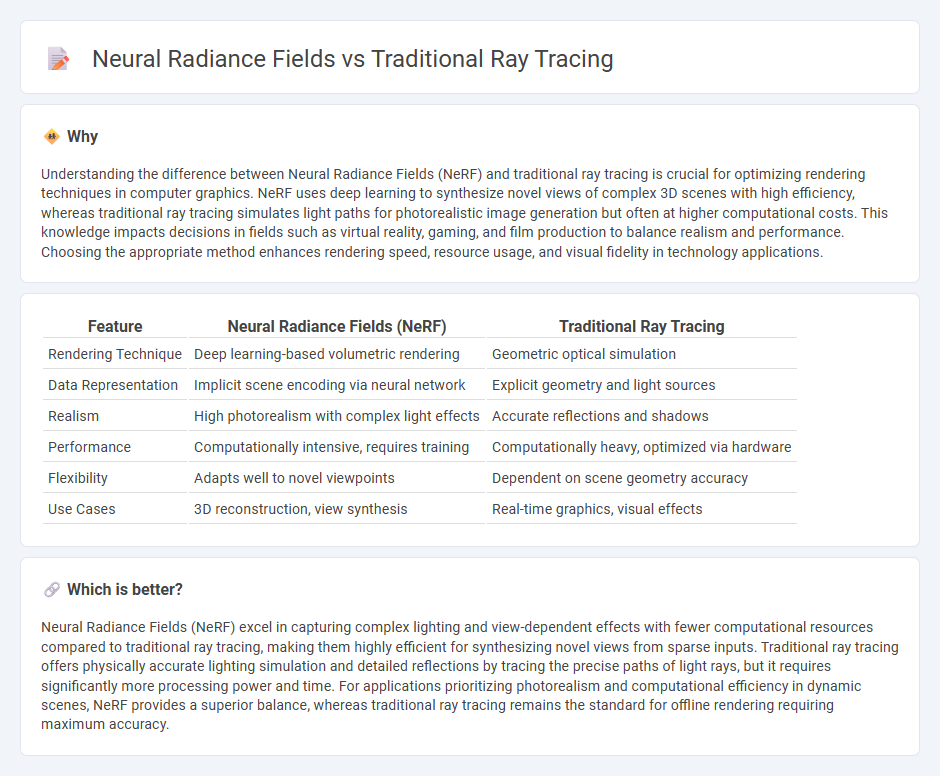

Understanding the difference between Neural Radiance Fields (NeRF) and traditional ray tracing is crucial for optimizing rendering techniques in computer graphics. NeRF uses deep learning to synthesize novel views of complex 3D scenes with high efficiency, whereas traditional ray tracing simulates light paths for photorealistic image generation but often at higher computational costs. This knowledge impacts decisions in fields such as virtual reality, gaming, and film production to balance realism and performance. Choosing the appropriate method enhances rendering speed, resource usage, and visual fidelity in technology applications.

Comparison Table

| Feature | Neural Radiance Fields (NeRF) | Traditional Ray Tracing |

|---|---|---|

| Rendering Technique | Deep learning-based volumetric rendering | Geometric optical simulation |

| Data Representation | Implicit scene encoding via neural network | Explicit geometry and light sources |

| Realism | High photorealism with complex light effects | Accurate reflections and shadows |

| Performance | Computationally intensive, requires training | Computationally heavy, optimized via hardware |

| Flexibility | Adapts well to novel viewpoints | Dependent on scene geometry accuracy |

| Use Cases | 3D reconstruction, view synthesis | Real-time graphics, visual effects |

Which is better?

Neural Radiance Fields (NeRF) excel in capturing complex lighting and view-dependent effects with fewer computational resources compared to traditional ray tracing, making them highly efficient for synthesizing novel views from sparse inputs. Traditional ray tracing offers physically accurate lighting simulation and detailed reflections by tracing the precise paths of light rays, but it requires significantly more processing power and time. For applications prioritizing photorealism and computational efficiency in dynamic scenes, NeRF provides a superior balance, whereas traditional ray tracing remains the standard for offline rendering requiring maximum accuracy.

Connection

Neural radiance fields (NeRFs) extend traditional ray tracing by utilizing deep learning to model complex light interactions and volumetric scene representations, enabling more accurate and efficient photo-realistic renderings. Both techniques rely on tracing rays to capture light transport, but NeRFs encode scene geometry and appearance into neural networks, which optimizes rendering in challenging scenarios like view synthesis and dynamic lighting. This integration enhances computer graphics by combining the mathematical precision of ray tracing with the adaptive learning capabilities of neural networks.

Key Terms

Rasterization (Traditional Ray Tracing)

Traditional ray tracing relies on rasterization to project 3D geometry onto a 2D screen by converting scene objects into pixel data, enabling efficient rendering of complex environments with high spatial accuracy. This method calculates light interactions such as shadows and reflections by tracing rays through each pixel, offering real-time performance advantages in gaming and simulation. Explore the evolving capabilities and trade-offs between rasterization and neural radiance fields in advanced rendering techniques.

Path Tracing (Traditional Ray Tracing)

Path tracing, a cornerstone of traditional ray tracing, simulates the complex interactions of light by tracing numerous rays from the camera through each pixel into the scene to produce highly realistic images with accurate global illumination and shadows. Neural Radiance Fields (NeRF), in contrast, employ deep learning to represent and render 3D scenes by optimizing volumetric scene functions, yielding efficient novel view synthesis but lacking the physical accuracy of path tracing's light transport simulation. Explore further to understand the trade-offs between physical accuracy and computational efficiency in rendering technologies.

Volume Rendering (Neural Radiance Fields)

Traditional ray tracing simulates light paths by calculating intersections with surfaces, producing photorealistic images but often struggling with volumetric effects like smoke or fog. Neural Radiance Fields (NeRFs) represent scenes as continuous volumetric radiance functions learned by deep neural networks, enabling high-quality volume rendering with fine details and view-dependent effects. Explore advanced techniques and applications of Neural Radiance Fields to unlock new possibilities in realistic volume rendering.

Source and External Links

Ray Tracing (Graphics) - Wikipedia - Ray tracing is a rendering technique that generates images by tracing paths of light as they interact with objects in a scene to produce realistic reflections, shadows, and illumination.

Ray Tracing for Beginners - Ray tracing simulates light behavior to create photorealistic images by tracing rays from a viewer's perspective, though it traditionally requires significant computational resources.

Ray Tracing - NVIDIA Developer - Ray tracing is a rendering technique that accurately models light interactions with objects, producing reflections, refractions, shadows, and indirect lighting for highly realistic graphics.

dowidth.com

dowidth.com